An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Descriptive Statistics: Reporting the Answers to the 5 Basic Questions of Who, What, Why, When, Where, and a Sixth, So What?

Affiliation.

- 1 From the Department of Surgery and Perioperative Care, Dell Medical School at the University of Texas at Austin, Austin, Texas.

- PMID: 28891910

- DOI: 10.1213/ANE.0000000000002471

Descriptive statistics are specific methods basically used to calculate, describe, and summarize collected research data in a logical, meaningful, and efficient way. Descriptive statistics are reported numerically in the manuscript text and/or in its tables, or graphically in its figures. This basic statistical tutorial discusses a series of fundamental concepts about descriptive statistics and their reporting. The mean, median, and mode are 3 measures of the center or central tendency of a set of data. In addition to a measure of its central tendency (mean, median, or mode), another important characteristic of a research data set is its variability or dispersion (ie, spread). In simplest terms, variability is how much the individual recorded scores or observed values differ from one another. The range, standard deviation, and interquartile range are 3 measures of variability or dispersion. The standard deviation is typically reported for a mean, and the interquartile range for a median. Testing for statistical significance, along with calculating the observed treatment effect (or the strength of the association between an exposure and an outcome), and generating a corresponding confidence interval are 3 tools commonly used by researchers (and their collaborating biostatistician or epidemiologist) to validly make inferences and more generalized conclusions from their collected data and descriptive statistics. A number of journals, including Anesthesia & Analgesia, strongly encourage or require the reporting of pertinent confidence intervals. A confidence interval can be calculated for virtually any variable or outcome measure in an experimental, quasi-experimental, or observational research study design. Generally speaking, in a clinical trial, the confidence interval is the range of values within which the true treatment effect in the population likely resides. In an observational study, the confidence interval is the range of values within which the true strength of the association between the exposure and the outcome (eg, the risk ratio or odds ratio) in the population likely resides. There are many possible ways to graphically display or illustrate different types of data. While there is often latitude as to the choice of format, ultimately, the simplest and most comprehensible format is preferred. Common examples include a histogram, bar chart, line chart or line graph, pie chart, scatterplot, and box-and-whisker plot. Valid and reliable descriptive statistics can answer basic yet important questions about a research data set, namely: "Who, What, Why, When, Where, How, How Much?"

PubMed Disclaimer

Similar articles

- Fundamentals of Research Data and Variables: The Devil Is in the Details. Vetter TR. Vetter TR. Anesth Analg. 2017 Oct;125(4):1375-1380. doi: 10.1213/ANE.0000000000002370. Anesth Analg. 2017. PMID: 28787341 Review.

- Repeated Measures Designs and Analysis of Longitudinal Data: If at First You Do Not Succeed-Try, Try Again. Schober P, Vetter TR. Schober P, et al. Anesth Analg. 2018 Aug;127(2):569-575. doi: 10.1213/ANE.0000000000003511. Anesth Analg. 2018. PMID: 29905618 Free PMC article.

- Preparing for the first meeting with a statistician. De Muth JE. De Muth JE. Am J Health Syst Pharm. 2008 Dec 15;65(24):2358-66. doi: 10.2146/ajhp070007. Am J Health Syst Pharm. 2008. PMID: 19052282 Review.

- Summarizing and presenting numerical data. Pupovac V, Petrovecki M. Pupovac V, et al. Biochem Med (Zagreb). 2011;21(2):106-10. doi: 10.11613/bm.2011.018. Biochem Med (Zagreb). 2011. PMID: 22135849

- Introduction to biostatistics: Part 2, Descriptive statistics. Gaddis GM, Gaddis ML. Gaddis GM, et al. Ann Emerg Med. 1990 Mar;19(3):309-15. doi: 10.1016/s0196-0644(05)82052-9. Ann Emerg Med. 1990. PMID: 2310070

- Canadian midwives' perspectives on the clinical impacts of point of care ultrasound in obstetrical care: A concurrent mixed-methods study. Johnston BK, Darling EK, Malott A, Thomas L, Murray-Davis B. Johnston BK, et al. Heliyon. 2024 Mar 5;10(6):e27512. doi: 10.1016/j.heliyon.2024.e27512. eCollection 2024 Mar 30. Heliyon. 2024. PMID: 38533003 Free PMC article.

- Validation and psychometric testing of the Chinese version of the prenatal body image questionnaire. Wang Q, Lin J, Zheng Q, Kang L, Zhang X, Zhang K, Lin R, Lin R. Wang Q, et al. BMC Pregnancy Childbirth. 2024 Feb 1;24(1):102. doi: 10.1186/s12884-024-06281-w. BMC Pregnancy Childbirth. 2024. PMID: 38302902 Free PMC article.

- Cracking the code: uncovering the factors that drive COVID-19 standard operating procedures compliance among school management in Malaysia. Ahmad NS, Karuppiah K, Praveena SM, Ali NF, Ramdas M, Mohammad Yusof NAD. Ahmad NS, et al. Sci Rep. 2024 Jan 4;14(1):556. doi: 10.1038/s41598-023-49968-4. Sci Rep. 2024. PMID: 38177620 Free PMC article.

- Comparison of Nonneurological Structures at Risk During Anterior-to-Psoas Versus Transpsoas Surgical Approaches Using Abdominal CT Imaging From L1 to S1. Razzouk J, Ramos O, Harianja G, Carter M, Mehta S, Wycliffe N, Danisa O, Cheng W. Razzouk J, et al. Int J Spine Surg. 2023 Dec 26;17(6):809-815. doi: 10.14444/8542. Int J Spine Surg. 2023. PMID: 37748918 Free PMC article.

- CT-based analysis of oblique lateral interbody fusion from L1 to L5: location of incision, feasibility of safe corridor approach, and influencing factors. Razzouk J, Ramos O, Mehta S, Harianja G, Wycliffe N, Danisa O, Cheng W. Razzouk J, et al. Eur Spine J. 2023 Jun;32(6):1947-1952. doi: 10.1007/s00586-023-07555-1. Epub 2023 Apr 28. Eur Spine J. 2023. PMID: 37118479

- Search in MeSH

Related information

- Cited in Books

LinkOut - more resources

Full text sources.

- Ingenta plc

- Ovid Technologies, Inc.

- Wolters Kluwer

Other Literature Sources

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Descriptive Statistics | Definitions, Types, Examples

Published on July 9, 2020 by Pritha Bhandari . Revised on June 21, 2023.

Descriptive statistics summarize and organize characteristics of a data set. A data set is a collection of responses or observations from a sample or entire population.

In quantitative research , after collecting data, the first step of statistical analysis is to describe characteristics of the responses, such as the average of one variable (e.g., age), or the relation between two variables (e.g., age and creativity).

The next step is inferential statistics , which help you decide whether your data confirms or refutes your hypothesis and whether it is generalizable to a larger population.

Table of contents

Types of descriptive statistics, frequency distribution, measures of central tendency, measures of variability, univariate descriptive statistics, bivariate descriptive statistics, other interesting articles, frequently asked questions about descriptive statistics.

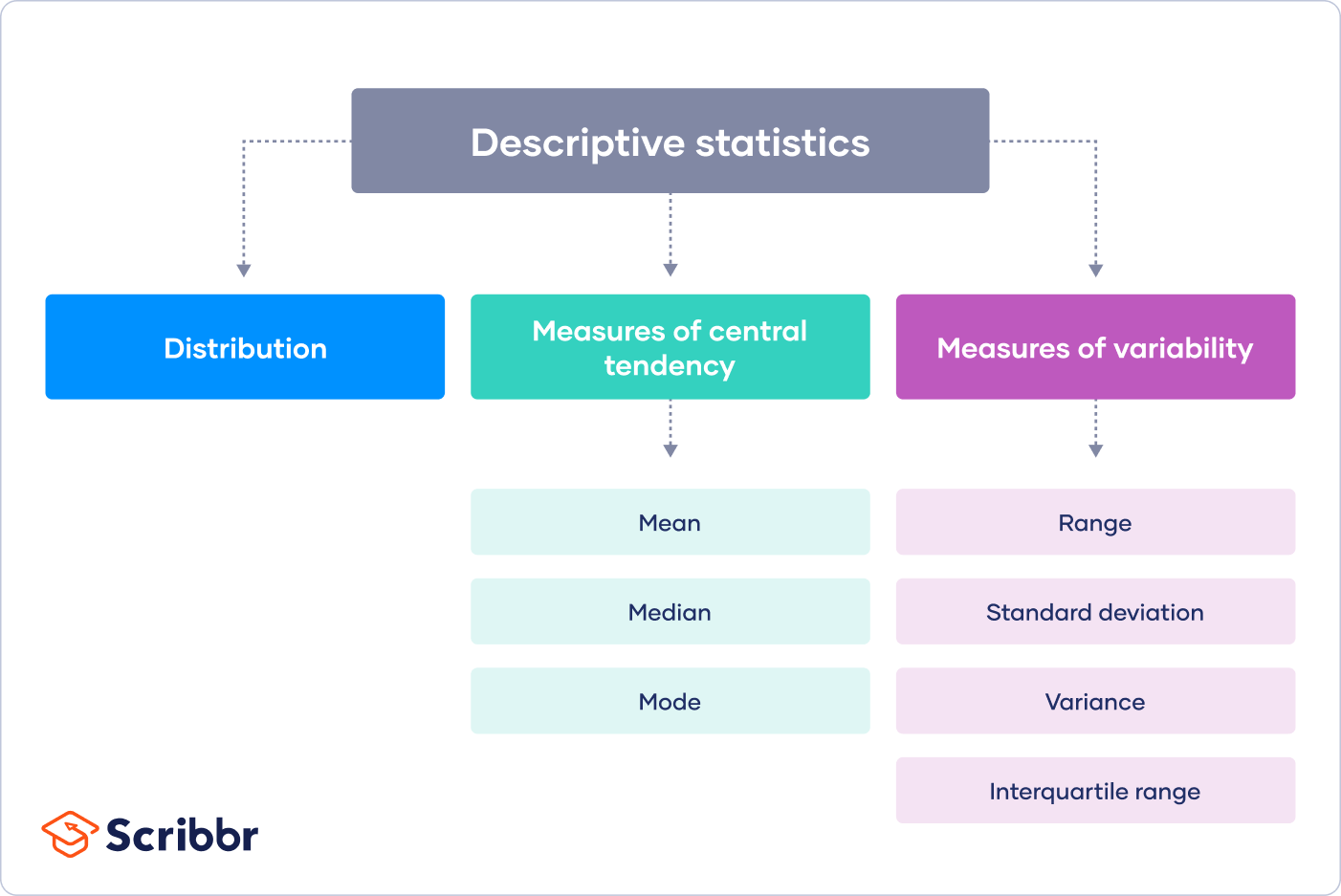

There are 3 main types of descriptive statistics:

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability or dispersion concerns how spread out the values are.

You can apply these to assess only one variable at a time, in univariate analysis, or to compare two or more, in bivariate and multivariate analysis.

- Go to a library

- Watch a movie at a theater

- Visit a national park

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A data set is made up of a distribution of values, or scores. In tables or graphs, you can summarize the frequency of every possible value of a variable in numbers or percentages. This is called a frequency distribution .

- Simple frequency distribution table

- Grouped frequency distribution table

| Gender | Number |

|---|---|

| Male | 182 |

| Female | 235 |

| Other | 27 |

From this table, you can see that more women than men or people with another gender identity took part in the study. In a grouped frequency distribution, you can group numerical response values and add up the number of responses for each group. You can also convert each of these numbers to percentages.

| Library visits in the past year | Percent |

|---|---|

| 0–4 | 6% |

| 5–8 | 20% |

| 9–12 | 42% |

| 13–16 | 24% |

| 17+ | 8% |

Measures of central tendency estimate the center, or average, of a data set. The mean, median and mode are 3 ways of finding the average.

Here we will demonstrate how to calculate the mean, median, and mode using the first 6 responses of our survey.

The mean , or M , is the most commonly used method for finding the average.

To find the mean, simply add up all response values and divide the sum by the total number of responses. The total number of responses or observations is called N .

| Data set | 15, 3, 12, 0, 24, 3 |

|---|---|

| Sum of all values | 15 + 3 + 12 + 0 + 24 + 3 = 57 |

| Total number of responses | = 6 |

| Mean | Divide the sum of values by to find : 57/6 = |

The median is the value that’s exactly in the middle of a data set.

To find the median, order each response value from the smallest to the biggest. Then , the median is the number in the middle. If there are two numbers in the middle, find their mean.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Middle numbers | 3, 12 |

| Median | Find the mean of the two middle numbers: (3 + 12)/2 = |

The mode is the simply the most popular or most frequent response value. A data set can have no mode, one mode, or more than one mode.

To find the mode, order your data set from lowest to highest and find the response that occurs most frequently.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Mode | Find the most frequently occurring response: |

Measures of variability give you a sense of how spread out the response values are. The range, standard deviation and variance each reflect different aspects of spread.

The range gives you an idea of how far apart the most extreme response scores are. To find the range , simply subtract the lowest value from the highest value.

Standard deviation

The standard deviation ( s or SD ) is the average amount of variability in your dataset. It tells you, on average, how far each score lies from the mean. The larger the standard deviation, the more variable the data set is.

There are six steps for finding the standard deviation:

- List each score and find their mean.

- Subtract the mean from each score to get the deviation from the mean.

- Square each of these deviations.

- Add up all of the squared deviations.

- Divide the sum of the squared deviations by N – 1.

- Find the square root of the number you found.

| Raw data | Deviation from mean | Squared deviation |

|---|---|---|

| 15 | 15 – 9.5 = 5.5 | 30.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| 12 | 12 – 9.5 = 2.5 | 6.25 |

| 0 | 0 – 9.5 = -9.5 | 90.25 |

| 24 | 24 – 9.5 = 14.5 | 210.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| = 9.5 | Sum = 0 | Sum of squares = 421.5 |

Step 5: 421.5/5 = 84.3

Step 6: √84.3 = 9.18

The variance is the average of squared deviations from the mean. Variance reflects the degree of spread in the data set. The more spread the data, the larger the variance is in relation to the mean.

To find the variance, simply square the standard deviation. The symbol for variance is s 2 .

Prevent plagiarism. Run a free check.

Univariate descriptive statistics focus on only one variable at a time. It’s important to examine data from each variable separately using multiple measures of distribution, central tendency and spread. Programs like SPSS and Excel can be used to easily calculate these.

| Visits to the library | |

|---|---|

| 6 | |

| Mean | 9.5 |

| Median | 7.5 |

| Mode | 3 |

| Standard deviation | 9.18 |

| Variance | 84.3 |

| Range | 24 |

If you were to only consider the mean as a measure of central tendency, your impression of the “middle” of the data set can be skewed by outliers, unlike the median or mode.

Likewise, while the range is sensitive to outliers , you should also consider the standard deviation and variance to get easily comparable measures of spread.

If you’ve collected data on more than one variable, you can use bivariate or multivariate descriptive statistics to explore whether there are relationships between them.

In bivariate analysis, you simultaneously study the frequency and variability of two variables to see if they vary together. You can also compare the central tendency of the two variables before performing further statistical tests .

Multivariate analysis is the same as bivariate analysis but with more than two variables.

Contingency table

In a contingency table, each cell represents the intersection of two variables. Usually, an independent variable (e.g., gender) appears along the vertical axis and a dependent one appears along the horizontal axis (e.g., activities). You read “across” the table to see how the independent and dependent variables relate to each other.

| Number of visits to the library in the past year | |||||

|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ |

| Children | 32 | 68 | 37 | 23 | 22 |

| Adults | 36 | 48 | 43 | 83 | 25 |

Interpreting a contingency table is easier when the raw data is converted to percentages. Percentages make each row comparable to the other by making it seem as if each group had only 100 observations or participants. When creating a percentage-based contingency table, you add the N for each independent variable on the end.

| Visits to the library in the past year (Percentages) | ||||||

|---|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ | |

| Children | 18% | 37% | 20% | 13% | 12% | 182 |

| Adults | 15% | 20% | 18% | 35% | 11% | 235 |

From this table, it is more clear that similar proportions of children and adults go to the library over 17 times a year. Additionally, children most commonly went to the library between 5 and 8 times, while for adults, this number was between 13 and 16.

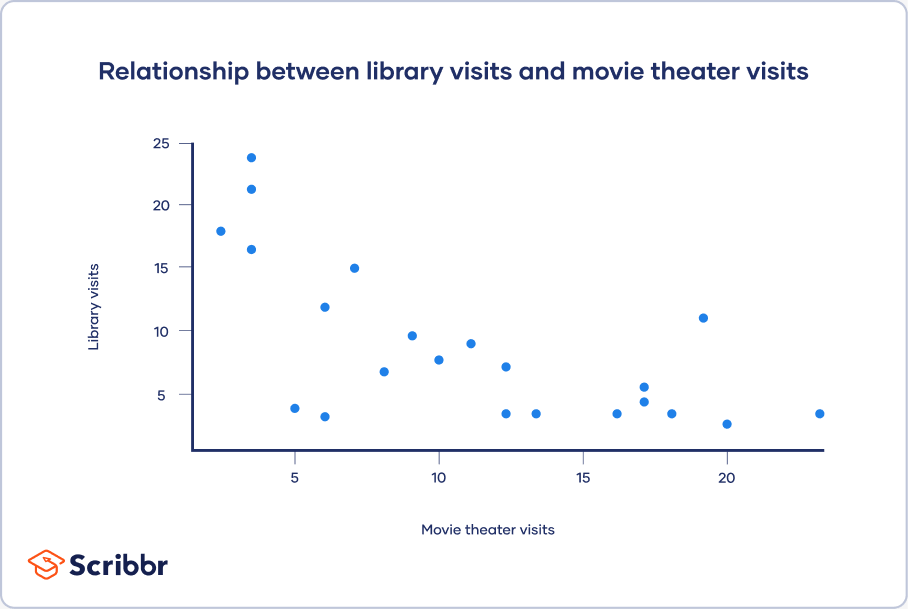

Scatter plots

A scatter plot is a chart that shows you the relationship between two or three variables . It’s a visual representation of the strength of a relationship.

In a scatter plot, you plot one variable along the x-axis and another one along the y-axis. Each data point is represented by a point in the chart.

From your scatter plot, you see that as the number of movies seen at movie theaters increases, the number of visits to the library decreases. Based on your visual assessment of a possible linear relationship, you perform further tests of correlation and regression.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Statistical power

- Pearson correlation

- Degrees of freedom

- Statistical significance

Methodology

- Cluster sampling

- Stratified sampling

- Focus group

- Systematic review

- Ethnography

- Double-Barreled Question

Research bias

- Implicit bias

- Publication bias

- Cognitive bias

- Placebo effect

- Pygmalion effect

- Hindsight bias

- Overconfidence bias

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

- Univariate statistics summarize only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 21). Descriptive Statistics | Definitions, Types, Examples. Scribbr. Retrieved August 21, 2024, from https://www.scribbr.com/statistics/descriptive-statistics/

Is this article helpful?

Pritha Bhandari

Other students also liked, central tendency | understanding the mean, median & mode, variability | calculating range, iqr, variance, standard deviation, inferential statistics | an easy introduction & examples, what is your plagiarism score.

Quant Analysis 101: Descriptive Statistics

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Kerryn Warren (PhD) | October 2023

If you’re new to quantitative data analysis , one of the first terms you’re likely to hear being thrown around is descriptive statistics. In this post, we’ll unpack the basics of descriptive statistics, using straightforward language and loads of examples . So grab a cup of coffee and let’s crunch some numbers!

Overview: Descriptive Statistics

What are descriptive statistics.

- Descriptive vs inferential statistics

- Why the descriptives matter

- The “ Big 7 ” descriptive statistics

- Key takeaways

At the simplest level, descriptive statistics summarise and describe relatively basic but essential features of a quantitative dataset – for example, a set of survey responses. They provide a snapshot of the characteristics of your dataset and allow you to better understand, roughly, how the data are “shaped” (more on this later). For example, a descriptive statistic could include the proportion of males and females within a sample or the percentages of different age groups within a population.

Another common descriptive statistic is the humble average (which in statistics-talk is called the mean ). For example, if you undertook a survey and asked people to rate their satisfaction with a particular product on a scale of 1 to 10, you could then calculate the average rating. This is a very basic statistic, but as you can see, it gives you some idea of how this data point is shaped .

What about inferential statistics?

Now, you may have also heard the term inferential statistics being thrown around, and you’re probably wondering how that’s different from descriptive statistics. Simply put, descriptive statistics describe and summarise the sample itself , while inferential statistics use the data from a sample to make inferences or predictions about a population .

Put another way, descriptive statistics help you understand your dataset , while inferential statistics help you make broader statements about the population , based on what you observe within the sample. If you’re keen to learn more, we cover inferential stats in another post , or you can check out the explainer video below.

Why do descriptive statistics matter?

While descriptive statistics are relatively simple from a mathematical perspective, they play a very important role in any research project . All too often, students skim over the descriptives and run ahead to the seemingly more exciting inferential statistics, but this can be a costly mistake.

The reason for this is that descriptive statistics help you, as the researcher, comprehend the key characteristics of your sample without getting lost in vast amounts of raw data. In doing so, they provide a foundation for your quantitative analysis . Additionally, they enable you to quickly identify potential issues within your dataset – for example, suspicious outliers, missing responses and so on. Just as importantly, descriptive statistics inform the decision-making process when it comes to choosing which inferential statistics you’ll run, as each inferential test has specific requirements regarding the shape of the data.

Long story short, it’s essential that you take the time to dig into your descriptive statistics before looking at more “advanced” inferentials. It’s also worth noting that, depending on your research aims and questions, descriptive stats may be all that you need in any case . So, don’t discount the descriptives!

The “Big 7” descriptive statistics

With the what and why out of the way, let’s take a look at the most common descriptive statistics. Beyond the counts, proportions and percentages we mentioned earlier, we have what we call the “Big 7” descriptives. These can be divided into two categories – measures of central tendency and measures of dispersion.

Measures of central tendency

True to the name, measures of central tendency describe the centre or “middle section” of a dataset. In other words, they provide some indication of what a “typical” data point looks like within a given dataset. The three most common measures are:

The mean , which is the mathematical average of a set of numbers – in other words, the sum of all numbers divided by the count of all numbers.

The median , which is the middlemost number in a set of numbers, when those numbers are ordered from lowest to highest.

The mode , which is the most frequently occurring number in a set of numbers (in any order). Naturally, a dataset can have one mode, no mode (no number occurs more than once) or multiple modes.

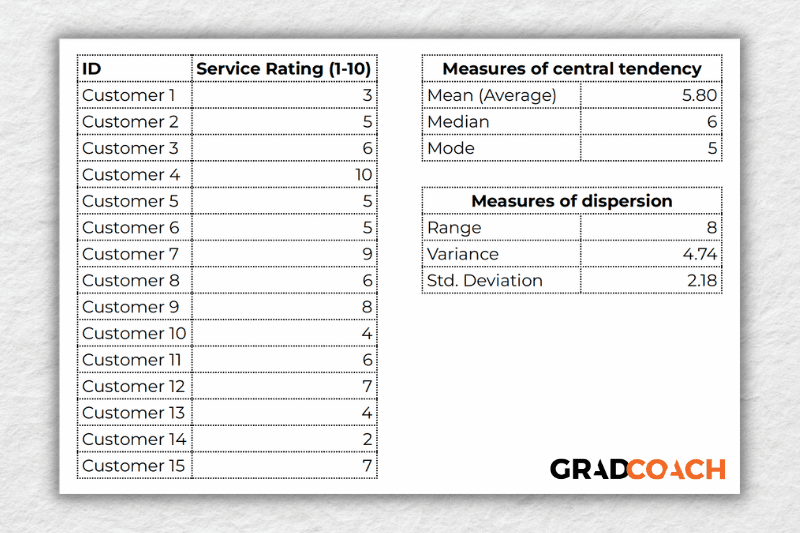

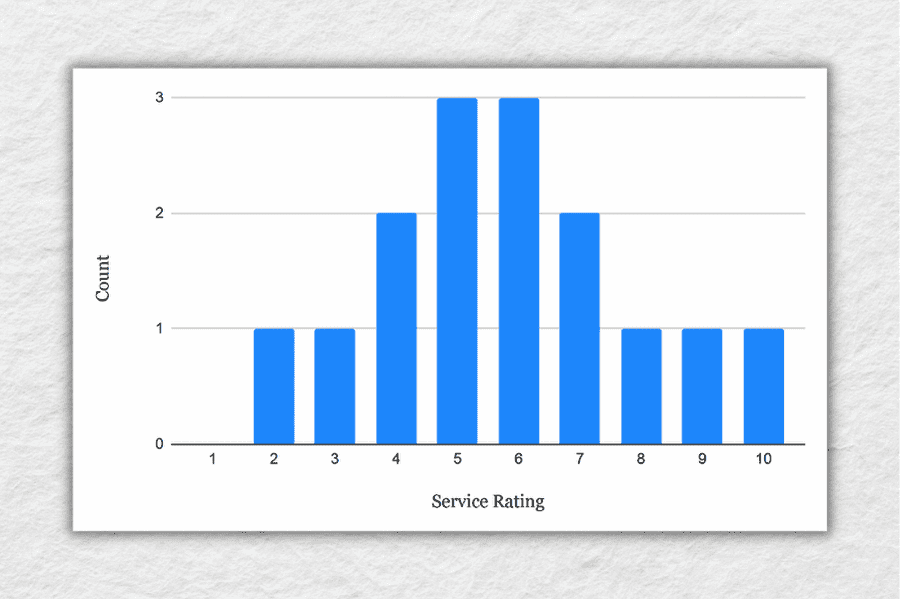

To make this a little more tangible, let’s look at a sample dataset, along with the corresponding mean, median and mode. This dataset reflects the service ratings (on a scale of 1 – 10) from 15 customers.

As you can see, the mean of 5.8 is the average rating across all 15 customers. Meanwhile, 6 is the median . In other words, if you were to list all the responses in order from low to high, Customer 8 would be in the middle (with their service rating being 6). Lastly, the number 5 is the most frequent rating (appearing 3 times), making it the mode.

Together, these three descriptive statistics give us a quick overview of how these customers feel about the service levels at this business. In other words, most customers feel rather lukewarm and there’s certainly room for improvement. From a more statistical perspective, this also means that the data tend to cluster around the 5-6 mark , since the mean and the median are fairly close to each other.

To take this a step further, let’s look at the frequency distribution of the responses . In other words, let’s count how many times each rating was received, and then plot these counts onto a bar chart.

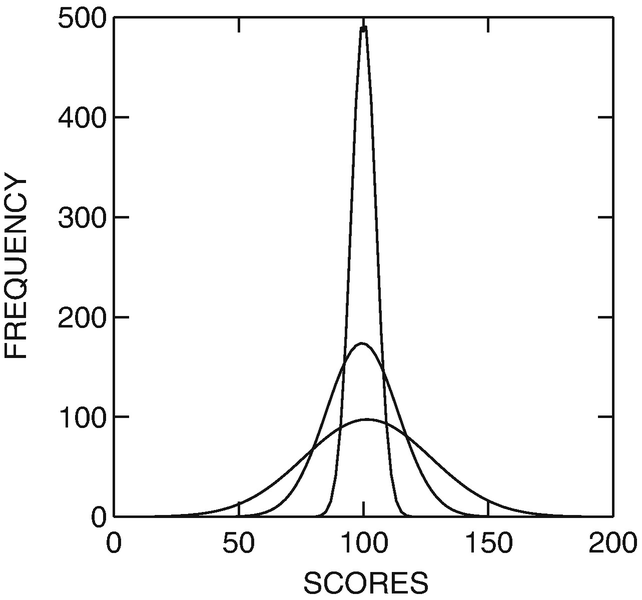

As you can see, the responses tend to cluster toward the centre of the chart , creating something of a bell-shaped curve. In statistical terms, this is called a normal distribution .

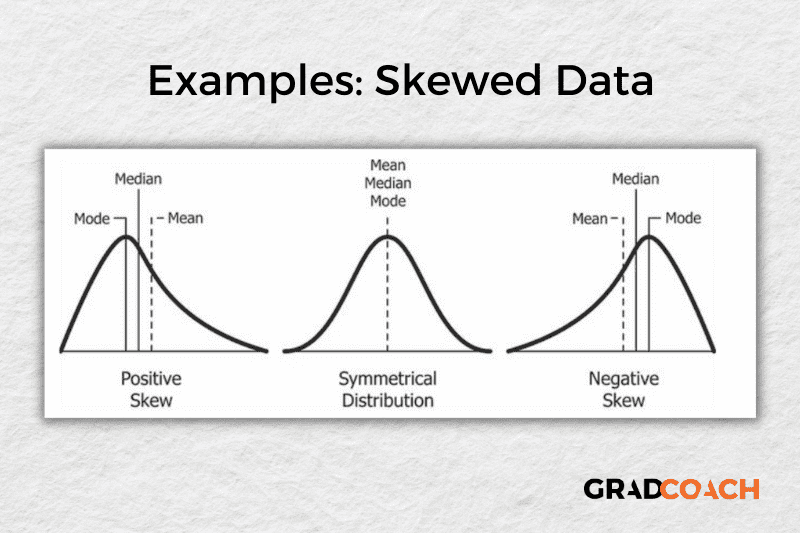

As you delve into quantitative data analysis, you’ll find that normal distributions are very common , but they’re certainly not the only type of distribution. In some cases, the data can lean toward the left or the right of the chart (i.e., toward the low end or high end). This lean is reflected by a measure called skewness , and it’s important to pay attention to this when you’re analysing your data, as this will have an impact on what types of inferential statistics you can use on your dataset.

Measures of dispersion

While the measures of central tendency provide insight into how “centred” the dataset is, it’s also important to understand how dispersed that dataset is . In other words, to what extent the data cluster toward the centre – specifically, the mean. In some cases, the majority of the data points will sit very close to the centre, while in other cases, they’ll be scattered all over the place. Enter the measures of dispersion, of which there are three:

Range , which measures the difference between the largest and smallest number in the dataset. In other words, it indicates how spread out the dataset really is.

Variance , which measures how much each number in a dataset varies from the mean (average). More technically, it calculates the average of the squared differences between each number and the mean. A higher variance indicates that the data points are more spread out , while a lower variance suggests that the data points are closer to the mean.

Standard deviation , which is the square root of the variance . It serves the same purposes as the variance, but is a bit easier to interpret as it presents a figure that is in the same unit as the original data . You’ll typically present this statistic alongside the means when describing the data in your research.

Again, let’s look at our sample dataset to make this all a little more tangible.

As you can see, the range of 8 reflects the difference between the highest rating (10) and the lowest rating (2). The standard deviation of 2.18 tells us that on average, results within the dataset are 2.18 away from the mean (of 5.8), reflecting a relatively dispersed set of data .

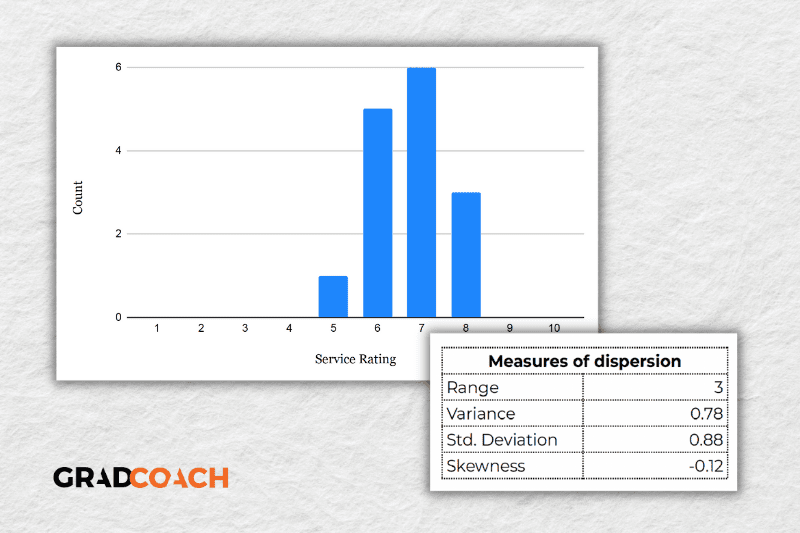

For the sake of comparison, let’s look at another much more tightly grouped (less dispersed) dataset.

As you can see, all the ratings lay between 5 and 8 in this dataset, resulting in a much smaller range, variance and standard deviation . You might also notice that the data are clustered toward the right side of the graph – in other words, the data are skewed. If we calculate the skewness for this dataset, we get a result of -0.12, confirming this right lean.

In summary, range, variance and standard deviation all provide an indication of how dispersed the data are . These measures are important because they help you interpret the measures of central tendency within context . In other words, if your measures of dispersion are all fairly high numbers, you need to interpret your measures of central tendency with some caution , as the results are not particularly centred. Conversely, if the data are all tightly grouped around the mean (i.e., low dispersion), the mean becomes a much more “meaningful” statistic).

Key Takeaways

We’ve covered quite a bit of ground in this post. Here are the key takeaways:

- Descriptive statistics, although relatively simple, are a critically important part of any quantitative data analysis.

- Measures of central tendency include the mean (average), median and mode.

- Skewness indicates whether a dataset leans to one side or another

- Measures of dispersion include the range, variance and standard deviation

If you’d like hands-on help with your descriptive statistics (or any other aspect of your research project), check out our private coaching service , where we hold your hand through each step of the research journey.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

Good day. May I ask about where I would be able to find the statistics cheat sheet?

Right above you comment 🙂

Good job. you saved me

Brilliant and well explained. So much information explained clearly!

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Search Menu

- Sign in through your institution

- Ageing - Other

- Bladder and Bowel Health

- Cardiovascular

- Community Geriatrics

- Dementia and Related Disorders

- End of Life Care

- Ethics and Law

- Falls and Bone Health

- Frailty in Urgent Care Settings

- Gastroenterology and Clinical Nutrition

- Movement Disorders

- Perioperative Care of Older People Undergoing Surgery

- Pharmacology and therapeutics

- Respiratory

- Sarcopenia and Frailty Research

- Telemedicine

- Advance articles

- Editor's Choice

- Supplements

- Themed collections

- The Dhole Eddlestone Memorial Prize

- 50th Anniversary Collection

- Author Guidelines

- Submission Site

- Open Access

- Reasons to Publish

- Advertising and Corporate Services

- Journals Career Network

- Advertising

- Reprints and ePrints

- Sponsored Supplements

- Branded Books

- About Age and Ageing

- About the British Geriatrics Society

- Editorial Board

- Self-Archiving Policy

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, describing the distribution of values, descriptive statistics in text, descriptive statistics in tables, describing loss of participants in a study, comparing baseline characteristics in rcts, conclusions, acknowledgements, conflicts of interest.

- < Previous

Describing the participants in a study

- Article contents

- Figures & tables

- Supplementary Data

R. M. Pickering, Describing the participants in a study, Age and Ageing , Volume 46, Issue 4, July 2017, Pages 576–581, https://doi.org/10.1093/ageing/afx054

- Permissions Icon Permissions

This paper reviews the use of descriptive statistics to describe the participants included in a study. It discusses the practicalities of incorporating statistics in papers for publication in Age and Aging , concisely and in ways that are easy for readers to understand and interpret.

Most papers reporting analysis of clinical data will at some point use statistics to describe the socio-demographic characteristics and medical history of the study participants. An important reason for doing this is to give the reader some idea of the extent to which study findings can be generalised to their own local situation. The production of descriptive statistics is a straightforward matter, most statistical packages producing all the statistics one could possibly desire, and a choice has to be made over which ones to present. These then have to be included in a paper in a manner that is easy for readers to assimilate. There may be constraints on the amount of space available, and it is in any case a good idea to make statistical display as concise as possible. This article reviews the statistics that might be used to describe a sample of older people, and gives tips on how best to do this in a paper for publication in Age and Aging . It builds on a previously published paper [ 1 ].

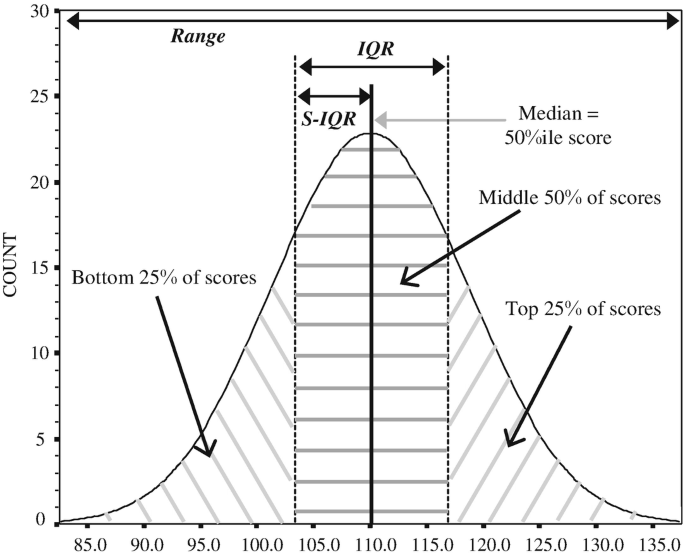

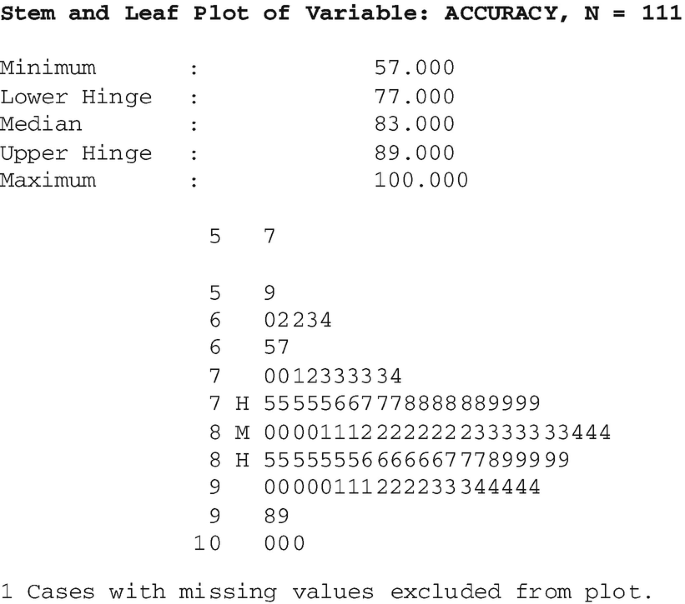

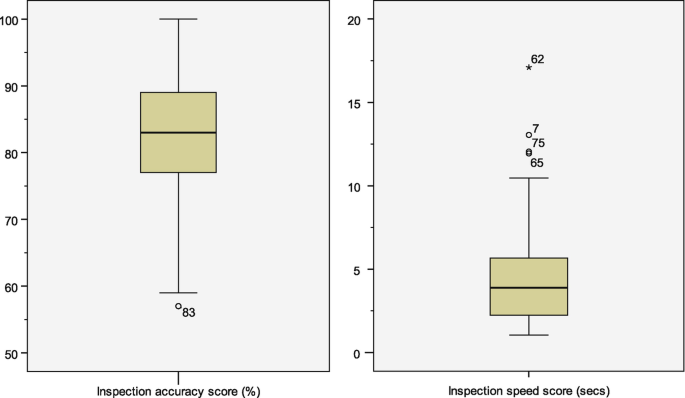

The values observed in a group of subjects, when measurements of a quantitative characteristic are made, are called the distribution of values. Graphical displays can be used to show the detail of the distribution in a variety of ways, but they take up a considerable amount of space. A precis of two key features of the distribution, its centre and its spread, is usually presented using descriptive statistics. The centre of a distribution can be described by its mean or median, and the spread by its standard deviation (SD), range, or inter-quartile range (IQR). Definitions and properties of these statistics are given in statistical textbooks [ 2 ].

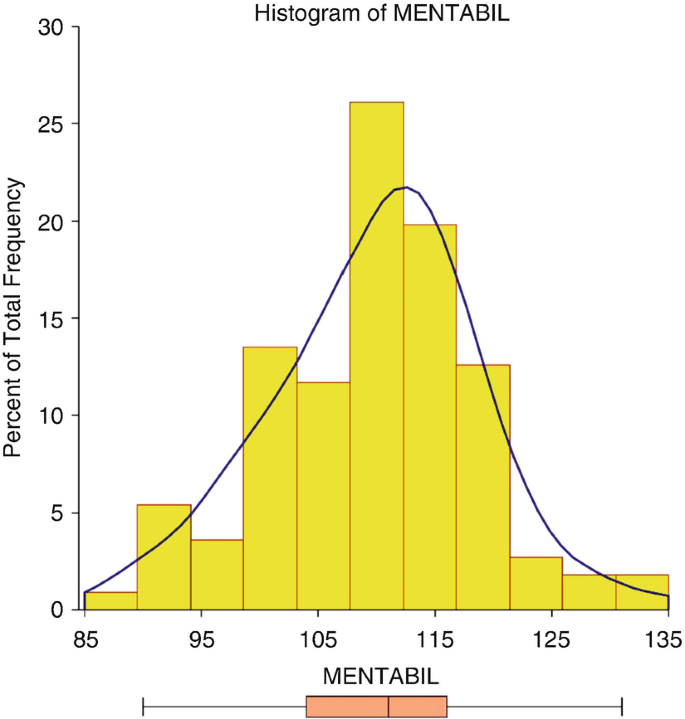

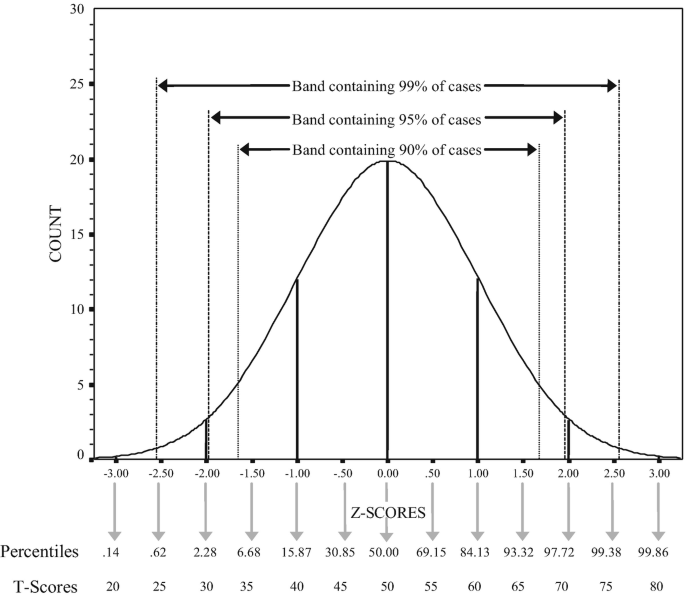

Figure 1 a shows an idealised symmetric distribution for a quantitative variable. The mean might be used here to describe where the centre of the distribution lies and the SD to give an idea of how spread out values are around the centre. SDs are particularly appropriate where a symmetric distribution approximately follows the bell-shaped pattern shown in Figure 1 a which is called the normal distribution. For such a distribution the large majority, 95%, of values observed in a sample will fall between the values two SDs above and below the mean, called the normal range. Presentation of the mean and SD invites the reader to calculate the normal range and think of it as covering most of the distribution of values. Another reason for presenting the SD is that it is required in calculations of sample size for approximately normally distributed outcomes, and can be used by readers in planning future studies. A graphical display of approximately normally distributed real data (age at admission amongst 373 study participants) is shown in Figure 1 c: with relatively small sample size a smooth distribution such as that shown in Figure 1 a cannot be achieved. The mean (82.9) and SD (6.8) of the age distribution lead to the normal range 69.3–96.5 years, which can be seen in Figure 1 c to cover most of the ages in the sample: 14 subjects fall below 69.3 and 7 fall above 96.5, so that the range actually covers 352 (94.4%) of the 373 participants, close to the anticipated 95%. For familiar measurements, such as age, there is additional value in presenting the range, the minimum and maximum values attained. Knowing that the study included people aged between 65 and 101 years is immediately meaningful, whereas the value of the SD is more difficult to interpret.

Idealised and real data distributions. (a) Symmetrical distribution. (b) Skewed distribution. (c) Dotplot (each dot representing one value) of an approximate symmetrical distribution indicating the normal range: age in years at admission ( n = 373). (d) Dotplot (each dot representing one value) of a skewed distribution with outliers emphasised and indicating mean and median: hours in A&E ( n = 348).

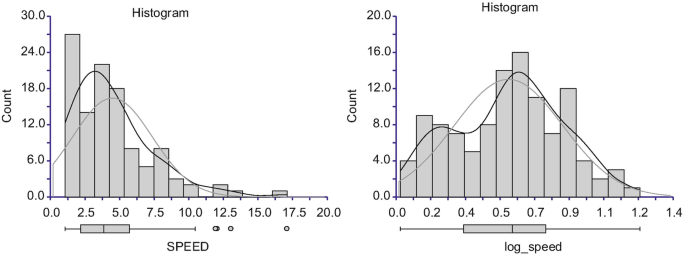

When a distribution is skewed (Figure 1 b) just one or two extreme values, ‘outliers’, in one of the tails of the distribution (to the right in Figure 1 b) pull the mean away from the obvious central value. An alternative statistic describing central location is the median, defined as the point with 50% of the sample falling above it and 50% below. Figure 1 d shows the distribution of real data (hours in A&E amongst 348 study participants) following a skewed distribution. A few excessively long A&E stays pull the mean to the higher value of 4.9 h compared to the median of 4.4 h: the effect would be greater with a higher proportion of subjects having long stays. The median is often recommended as the preferred statistic to describe the centre of a skewed distribution, but the mean can be helpful. If the attribute being described takes only a limited number of values, the medians of two groups can take the same value in spite of substantial differences in the tails. In these circumstances, the mean can be sensitive to an overall shift in distribution while the median is not. When a comparison of cost based on length of stay is to be made, presenting means of the skewed distributions facilitates calculation of cost savings per subject by applying unit cost to the difference in means. Figure 1 b suggests that the value with highest frequency might be a useful descriptor of the centre of a distribution. In practice, this can prove awkward: depending on the precision of measurement there may be no value occurring more than once.

It is clear from Figure 1 b that no single number can adequately describe the spread of a skewed distribution because spread is greater in one direction than the other. The range (from 1.7 to 40.3 h in A&E in our skewed example) could be used. Another possibility is the IQR (from 3.5 to 5.4 h in A&E) covering the central 50% of the distribution. The SD may be presented even though a distribution is skewed, and could be useful to readers for approximate power calculations, but the normal range derived from the mean and SD will be misleading. With mean(SD) = 4.9(3.2), the lower limit of the normal range of hours in A&E is the impossible negative value of –1.5 h, while the upper limit of 11.3 h lies well below the extreme values exhibited in Figure 1 d.

Descriptive statistics may be presented in text, for example [ 3 ]:

Participants’ ages ranged from 50 to 87 years ( M = 66.1, SD = 7.8) with 56% identified as female, 64% married or partnered, 23% reported being retired or not working, 55% had post-secondary and higher education, and <20% reported living alone. Over 60% of the participants identified as NZ European. The mean of net personal annual income was $34,615. The participants reported the diagnosis of an average of 2.63 (±2.07) chronic health conditions, with 50% reported having three or more chronic health conditions.

There are perhaps too many attributes (age, gender, marital status, employment status, educational level, living arrangements, nationality, personal income and number of chronic conditions) being described in the excerpt above: it would be easier to assimilate this information from a table.

Characteristics of subjects at admission and their operations before (1998/99) and after (2000/01) implementation of a care pathway [ 4 ]. Figures are number (% of non-missing values) unless otherwise stated

| . | 1998/99 ( = 395) . | 2000/01 ( = 373) . |

|---|---|---|

| Age on admission (years) | ||

| Mean (SD) | 83 (7) | 83 (7) |

| Minimum–maximum | 65–101 | 65–101 |

| Gender | ||

| Male | 90 (23%) | 90 (24%) |

| Female | 305 (77%) | 283 (76%) |

| Admission domicile | ||

| Own home | 219 (55%) | 202 (54%) |

| Sheltered accommodation | 47 (12%) | 58 (16%) |

| Residential care | 90 (23%) | 83 (22%) |

| Nursing home | 18 (5%) | 15 (4%) |

| Other ward SUHT | 7 (2%) | 2 (1%) |

| Other trust | 14 (4%) | 13 (4%) |

| Ambulation score | ||

| Bed/chair bound | 8 (2%) | 5 (1%) |

| Presence 1+ | 12 (3%) | 7 (2%) |

| 1 person | 25 (6%) | 20 (5%) |

| Unable 50 m | 145 (37%) | 138 (38%) |

| Able 50 m | 200 (51%) | 197 (54%) |

| ( = 390) | ( = 367) | |

| Time in A&E (h) | ||

| Mean (SD) | 4.9 (3.2) | 5.6 (2.4) |

| Minimum–maximum | 1.7–40.3 | 0–21.4 |

| ( = 348) | ( = 328) | |

| History of dementia | 79 (20%) | 85 (23%) |

| ( = 395) | ( = 371) | |

| Confused on admission | 124 (32%) | 125 (34%) |

| ( = 394) | ( = 371) | |

| Type of fracture | ||

| Intra-capsular | 192 (54%) | 173 (52%) |

| Extra-capsular | 165 (46%) | 161 (48%) |

| ( = 357) | ( = 334) | |

| Operation more than 48 h after ward admission | 183 (52%) | 205 (64%) |

| ( = 354) | ( = 323) | |

| Reason for delayed operation | ||

| Medical | 61 (35%) | 74 (43%) |

| Organisational | 66 (38%) | 72 (42%) |

| Both | 45 (26%) | 27 (16%) |

| ( = 172) | ( = 173) | |

| Type of operation | ||

| Thompson's hemiarthroplasty | 101 (27%) | 87 (24%) |

| Austin-Moore hemiarthroplasty | 69 (19%) | 18 (5%) |

| Dynamic screw | 162 (43%) | 165 (46%) |

| Asnis screws | 38 (11%) | 38 (11%) |

| Bipolar hemiarthroplasty | 3 (1%) | 48 (14%) |

| ( = 373) | ( = 356) | |

| Grade of surgeon | ||

| Consultant | 46 (12%) | 110 (32%) |

| SPR | 318 (86%) | 220 (63%) |

| SHO | 6 (2%) | 18 (5%) |

| ( = 355) | ( = 348) | |

| Grade of anaesthetist | ||

| Consultant | 1206 (34%) | 175 (55%) |

| SPR | 99 (28%) | 52 (16%) |

| SHO | 133 (38%) | 81 (29%) |

| ( = 352) | ( = 318) | |

| . | 1998/99 ( = 395) . | 2000/01 ( = 373) . |

|---|---|---|

| Age on admission (years) | ||

| Mean (SD) | 83 (7) | 83 (7) |

| Minimum–maximum | 65–101 | 65–101 |

| Gender | ||

| Male | 90 (23%) | 90 (24%) |

| Female | 305 (77%) | 283 (76%) |

| Admission domicile | ||

| Own home | 219 (55%) | 202 (54%) |

| Sheltered accommodation | 47 (12%) | 58 (16%) |

| Residential care | 90 (23%) | 83 (22%) |

| Nursing home | 18 (5%) | 15 (4%) |

| Other ward SUHT | 7 (2%) | 2 (1%) |

| Other trust | 14 (4%) | 13 (4%) |

| Ambulation score | ||

| Bed/chair bound | 8 (2%) | 5 (1%) |

| Presence 1+ | 12 (3%) | 7 (2%) |

| 1 person | 25 (6%) | 20 (5%) |

| Unable 50 m | 145 (37%) | 138 (38%) |

| Able 50 m | 200 (51%) | 197 (54%) |

| ( = 390) | ( = 367) | |

| Time in A&E (h) | ||

| Mean (SD) | 4.9 (3.2) | 5.6 (2.4) |

| Minimum–maximum | 1.7–40.3 | 0–21.4 |

| ( = 348) | ( = 328) | |

| History of dementia | 79 (20%) | 85 (23%) |

| ( = 395) | ( = 371) | |

| Confused on admission | 124 (32%) | 125 (34%) |

| ( = 394) | ( = 371) | |

| Type of fracture | ||

| Intra-capsular | 192 (54%) | 173 (52%) |

| Extra-capsular | 165 (46%) | 161 (48%) |

| ( = 357) | ( = 334) | |

| Operation more than 48 h after ward admission | 183 (52%) | 205 (64%) |

| ( = 354) | ( = 323) | |

| Reason for delayed operation | ||

| Medical | 61 (35%) | 74 (43%) |

| Organisational | 66 (38%) | 72 (42%) |

| Both | 45 (26%) | 27 (16%) |

| ( = 172) | ( = 173) | |

| Type of operation | ||

| Thompson's hemiarthroplasty | 101 (27%) | 87 (24%) |

| Austin-Moore hemiarthroplasty | 69 (19%) | 18 (5%) |

| Dynamic screw | 162 (43%) | 165 (46%) |

| Asnis screws | 38 (11%) | 38 (11%) |

| Bipolar hemiarthroplasty | 3 (1%) | 48 (14%) |

| ( = 373) | ( = 356) | |

| Grade of surgeon | ||

| Consultant | 46 (12%) | 110 (32%) |

| SPR | 318 (86%) | 220 (63%) |

| SHO | 6 (2%) | 18 (5%) |

| ( = 355) | ( = 348) | |

| Grade of anaesthetist | ||

| Consultant | 1206 (34%) | 175 (55%) |

| SPR | 99 (28%) | 52 (16%) |

| SHO | 133 (38%) | 81 (29%) |

| ( = 352) | ( = 318) | |

The distributions of the two quantitative variables in Table 1 are described by mean (SD) and range. The statistics being presented should be stated in the context of the table, here in the left hand column, and could differ across variables. If the same statistics are presented for all the variables in a table they can be indicated in the column headings or title. From the mean (SD) and range in each phase, we can see that the age distribution is reasonably symmetrical because the mean falls close to the centre of the range, and the mean ± 2 SD approach the limits of the range. The distribution of hours in A&E is skewed to the right but has been summarised with the same statistics. We can see that the distribution is skewed because the mean is much closer to the minimum than the maximum, and, if the normal range is calculated, the upper limit does not approach the high values in either phase. For these reasons, the normal range should not be interpreted as covering 95% of values. These conclusions from descriptive statistics alone can be verified in Figure 1 c and d.

A choice arises when describing the distribution of an ordinal variable indicating ordered response categories, such as ambulation score in Table 1 . If the variable takes many distinct values, it can be treated as a quantitative variable and described in terms of centre and spread: ordinal variables often extend from the minimum to maximum possible values and in this case stating the range is not helpful. The meaning of the extremes should be stated in the context of the table to aid interpretation of results. Ordinal variables taking only a few distinct values are better treated as categorical variables and number (%) presented for each category. With only five categories the latter approach was adopted for ambulation score. Display as a categorical variable can be facilitated by combining infrequently occurring adjacent values.

In the original study, 3,182 of 5,719 admissions were screened and 2,286 were eligible. Six hundred and ten patients were not available on the hospital units when the RA [Research Assistant] arrived to complete the CAM [Confusion Assessment Method]; 1,582 patients assented to complete the CAM and 94 patients did not assent; the CAM was not completed for 728 patients because an informant was not available to confirm an acute change and fluctuation in mental status prior to admission or enrolment. The CAM was completed for 854 patients; 375 had delirium; 278 were enroled. Of the 278 enroled patients, 172 were discharged before the follow-up assessment, 73 were still hospitalised, 8 withdrew from the study and 27 died. Of the 172 discharged patients, delirium recovery status was determined for 152, 16 withdrew from the study after discharge and 4 died.

The authors start with the 5,719 admissions and report the numbers lost at successive stages, to arrive at the analysis sample of 152. It may be easier to assimilate the detail of the process from tabular or graphical presentation. The CONSORT guidelines [ 6 ] concerning the reporting of Randomised Controlled Trials (RCTs) recommend that progress of participants through a trial be presented as a flow chart, and an example is shown in Figure 2 . These charts are unequivocally helpful and are now presented in studies other than RCTs.

![research paper with descriptive statistics Recruitment and attrition rates in an RCT of WiiActive exercises in community dwelling older adults [7].](https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/ageing/46/4/10.1093_ageing_afx054/5/m_afx054f02.jpeg?Expires=1727033620&Signature=aK4i~-fab4oGu4-JNk0zAb9UigzhvJzNKb96qaYpmIMq387xgnTQqBFkoMxSNJAkAEhA8uZh~90rWDfijnw-2wiEJBm7xbg4Lr0Cpa7kHicAe727GVdR~xbxFj3pvsSHxr40gDUBVUchhUKJgLse0k8J0MIkRmj8CpWAjRfFl3dn02XFB-7zSGVsS9Zm4pmmofX182Olz3jjVHFJXkCx5fGVaJWpcvkW80TXopDOCO6qSSI0eOI88nsO7mcnQBeyncNUOZZF91yHGIOPahni2Xmf0eIEMVxe94YMDZJw3GgfxbpxNHfUCigv3~0GApp4CCZTkhB1cHmWuF7nm8IixQ__&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA)

Recruitment and attrition rates in an RCT of WiiActive exercises in community dwelling older adults [ 7 ].

In addition to loss of participants at each time point as shown in a flow chart, information on specific variables may be missing even though a participant was available at the study point in question. Taking Table 1 as an example, there were 395 and 373 admissions during the 1998/99 and 2000/01 phases, respectively, as stated in the column headings, but the number of participants providing information varies considerably across the characteristics in the table. The reader should be able to establish how many cases contribute to each result, and to this end wherever the number available is lower than the total for the phase, it is stated below the descriptive statistics. For example, ambulation score was only available for 390 of the 395 participants in the 1998/99 phase. The percentages presented for ambulation score were calculated amongst cases where information was available, and this was done for all percentages in the table as indicated in the title. Alternatively, missing values in a categorical variable may be treated as a category in their own right. Where there is a large amount of missing information, this may be the best way of handling the situation with percentages calculated from the total sample size as denominator. Stating the numbers available allows the reader to check this point. Only participants whose operation was delayed by more than 48 h, gave a ‘reason why operation was delayed’ in the table, and from the stated numbers the reader can see that a reason was not given for all delayed cases.

In reports of RCTs, a table describing baseline characteristics in each trial arm demonstrates whether or not randomisation was successful in producing similar groups, as well as addressing the generalisability issue. If there are differences at baseline, comparison of outcome may be confounded. Statistical tests of significance should not be used to decide whether any differences need to be taken into account [ 8 , 9 ]. If the allocation was properly randomised, we know that any differences at baseline must be due to chance. The question facing the researcher is whether or not the magnitude of a difference at baseline is sufficient to confound comparison of outcome, and this depends on the strength of the relationship between the potential confounder and the outcome, as well the baseline difference. A statistical test for baseline differences does not address this question; furthermore, there may be insufficient numbers available to detect quite large baseline differences. Statistics describing baseline characteristics are used to judge whether any differences are large enough to be important. If they are, additional analyses of outcome controlled for characteristics that differ at baseline may be performed. On the other hand, in non-randomised studies, groups are likely to differ, and statistical significance tests can be used to evaluate the evidence that the selection process of patients to each intervention results in different groups. In this situation a primary analysis controlled for many predictors of outcome would probably have been planned, and should be carried out irrespective of any differences, or lack of them, between study groups.

Describing the main features of the distribution of important characteristics of the participants included in a study is the first step in most papers reporting statistical analysis. It is important in establishing the generalisability of research findings, and in the context of comparative studies, flags the need for controlled analysis. Usually space constraints limit the presentation of many descriptive statistics, and in any case, too many statistics can confuse rather than enhance insight. The attrition of subjects during a study should also be described, so that study subjects can be related to the patient base from which they were drawn.

Descriptive statistics are used to describe the participants in a study so that readers can assess the generalisability of study findings to their own clinical practice.

They need to be appropriate to the variable or participant characteristic they aim to describe, and presented in a fashion that is easy for readers to understand.

When many patient characteristics are being described, the detail of the statistics used and number of participants contributing to analysis are best incorporated in tabular presentation.

The author would like to thank Dr Helen Roberts for kindly granting permission to use data from the care pathway study [ 4 ] to produce Figure 1 c and d.

None declared.

Pickering RM . Describing the subjects in a study . Palliat Med 2001 ; 15 : 69 – 75 .

Google Scholar

Altman DG . Practical Statistics for Medical research . London : Chapman & Hall , 1991 .

Google Preview

Yeung P , Breheny M . Using the capability approach to understand the determinants of subjective well-being among community-dwelling older people in New Zealand . Age Aging 2016 ; 45 : 292 – 8 .

Roberts HC , Pickering RM , Onslow E et al. . The effectiveness of implementing a care pathway for femoral neck fracture in older people: a prospective controlled before and after study . Age Aging 2004 ; 33 : 178 – 84 .

Cole MG , McCusker JM , Bailey R et al. . Partial and no recovery from delirium after hospital discharge predict increased adverse events . Age Aging 2017 ; 46 : 90 – 5 .

Schulz KF , Altman DG , Moher D , for the CONSORT Group . CONSORT 2010 statement: updated guidelines for reporting parallel-group randomised trials . BMJ 2010 ; 340 : 698 – 702 .

Kwok BC , Pua YH . Effects of WiiActive exercises on fear of falling and functional outcomes in community-dwelling older adults: a randomised control trial . Age Aging 2016 ; 45 : 621 – 28 .

Assman SF , Pocock SJ , Enos LE , Kasten LE . Subgroup analysis and other (mis)uses of baseline data in clinical trials . Lancet 2000 ; 355 : 1064 – 9 .

Altman DG . Comparability of randomized groups . Statistician 1985 ; 34 : 125 – 36 .

- descriptive statistics

| Month: | Total Views: |

|---|---|

| May 2017 | 23 |

| June 2017 | 62 |

| July 2017 | 73 |

| August 2017 | 53 |

| September 2017 | 34 |

| October 2017 | 89 |

| November 2017 | 38 |

| December 2017 | 59 |

| January 2018 | 32 |

| February 2018 | 12 |

| March 2018 | 42 |

| April 2018 | 45 |

| May 2018 | 50 |

| June 2018 | 40 |

| July 2018 | 172 |

| August 2018 | 255 |

| September 2018 | 231 |

| October 2018 | 289 |

| November 2018 | 809 |

| December 2018 | 1,101 |

| January 2019 | 1,217 |

| February 2019 | 1,418 |

| March 2019 | 1,745 |

| April 2019 | 1,633 |

| May 2019 | 1,772 |

| June 2019 | 1,136 |

| July 2019 | 1,088 |

| August 2019 | 1,091 |

| September 2019 | 1,436 |

| October 2019 | 1,933 |

| November 2019 | 1,706 |

| December 2019 | 1,447 |

| January 2020 | 1,553 |

| February 2020 | 2,191 |

| March 2020 | 2,291 |

| April 2020 | 3,369 |

| May 2020 | 2,057 |

| June 2020 | 2,624 |

| July 2020 | 2,439 |

| August 2020 | 2,584 |

| September 2020 | 2,905 |

| October 2020 | 3,179 |

| November 2020 | 3,068 |

| December 2020 | 2,768 |

| January 2021 | 2,626 |

| February 2021 | 2,429 |

| March 2021 | 3,452 |

| April 2021 | 3,830 |

| May 2021 | 3,102 |

| June 2021 | 2,528 |

| July 2021 | 2,016 |

| August 2021 | 1,848 |

| September 2021 | 2,188 |

| October 2021 | 2,649 |

| November 2021 | 2,488 |

| December 2021 | 2,142 |

| January 2022 | 2,073 |

| February 2022 | 2,164 |

| March 2022 | 2,761 |

| April 2022 | 3,154 |

| May 2022 | 3,308 |

| June 2022 | 2,185 |

| July 2022 | 1,754 |

| August 2022 | 2,090 |

| September 2022 | 2,211 |

| October 2022 | 2,497 |

| November 2022 | 2,790 |

| December 2022 | 2,471 |

| January 2023 | 2,270 |

| February 2023 | 2,359 |

| March 2023 | 2,714 |

| April 2023 | 3,028 |

| May 2023 | 3,292 |

| June 2023 | 2,366 |

| July 2023 | 1,774 |

| August 2023 | 1,588 |

| September 2023 | 1,330 |

| October 2023 | 1,571 |

| November 2023 | 1,456 |

| December 2023 | 1,293 |

| January 2024 | 1,699 |

| February 2024 | 1,815 |

| March 2024 | 4,180 |

| April 2024 | 2,115 |

| May 2024 | 1,819 |

| June 2024 | 1,047 |

| July 2024 | 1,142 |

Email alerts

Citing articles via.

- Recommend to your Library

Affiliations

- Online ISSN 1468-2834

- Copyright © 2024 British Geriatrics Society

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Descriptive Statistics for Summarising Data

- First Online: 15 May 2020

Cite this chapter

- Ray W. Cooksey 2

38k Accesses

17 Citations

This chapter discusses and illustrates descriptive statistics . The purpose of the procedures and fundamental concepts reviewed in this chapter is quite straightforward: to facilitate the description and summarisation of data. By ‘describe’ we generally mean either the use of some pictorial or graphical representation of the data (e.g. a histogram, box plot, radar plot, stem-and-leaf display, icon plot or line graph) or the computation of an index or number designed to summarise a specific characteristic of a variable or measurement (e.g., frequency counts, measures of central tendency, variability, standard scores). Along the way, we explore the fundamental concepts of probability and the normal distribution. We seldom interpret individual data points or observations primarily because it is too difficult for the human brain to extract or identify the essential nature, patterns, or trends evident in the data, particularly if the sample is large. Rather we utilise procedures and measures which provide a general depiction of how the data are behaving. These statistical procedures are designed to identify or display specific patterns or trends in the data. What remains after their application is simply for us to interpret and tell the story.

You have full access to this open access chapter, Download chapter PDF

- Descriptive statistics

- Multivariate graphs

- Frequencies

- Central tendency

- Variability

- Standard scores

- Exploratory data analysis

- Probability

- Normal distribution

The first broad category of statistics we discuss concerns descriptive statistics . The purpose of the procedures and fundamental concepts in this category is quite straightforward: to facilitate the description and summarisation of data. By ‘describe’ we generally mean either the use of some pictorial or graphical representation of the data or the computation of an index or number designed to summarise a specific characteristic of a variable or measurement.

We seldom interpret individual data points or observations primarily because it is too difficult for the human brain to extract or identify the essential nature, patterns, or trends evident in the data, particularly if the sample is large. Rather we utilise procedures and measures which provide a general depiction of how the data are behaving. These statistical procedures are designed to identify or display specific patterns or trends in the data. What remains after their application is simply for us to interpret and tell the story.

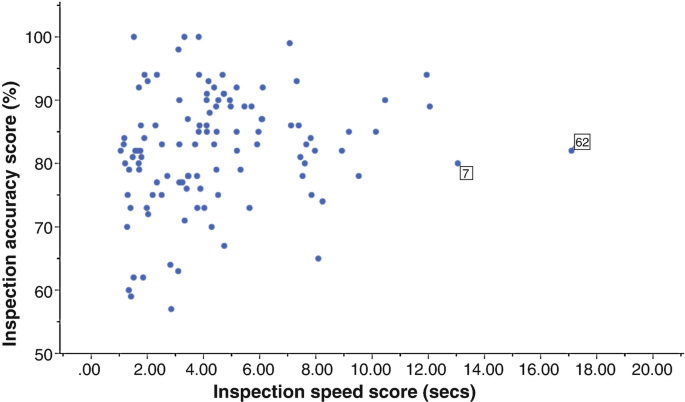

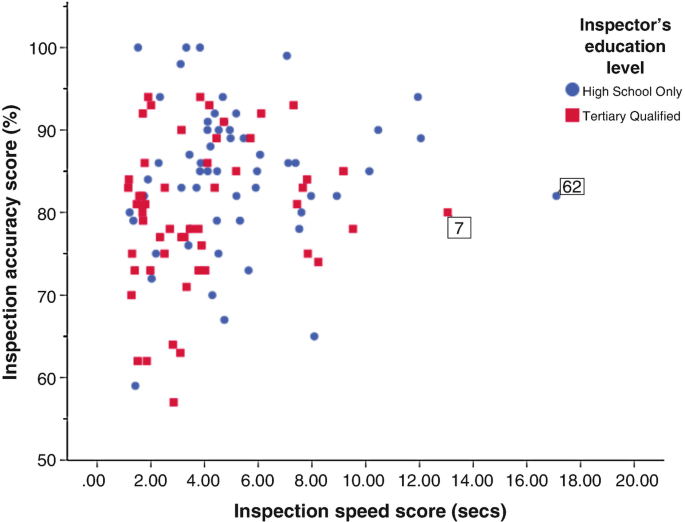

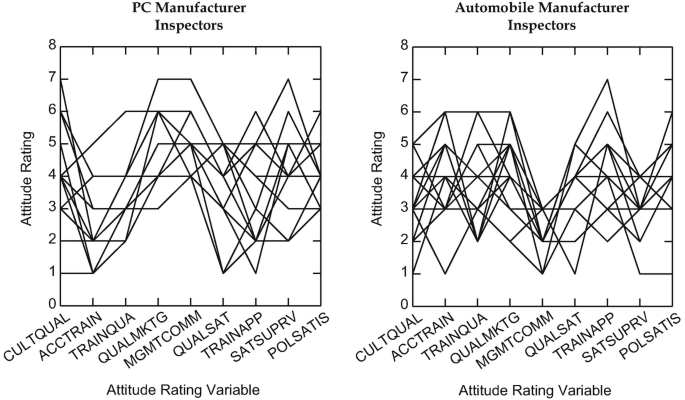

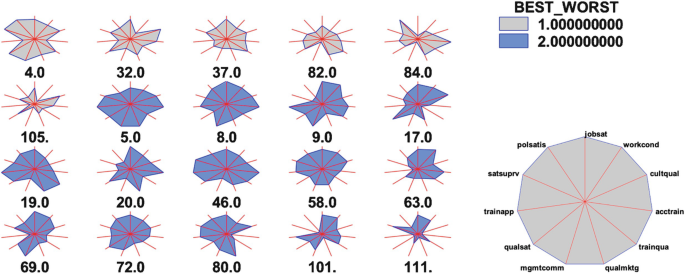

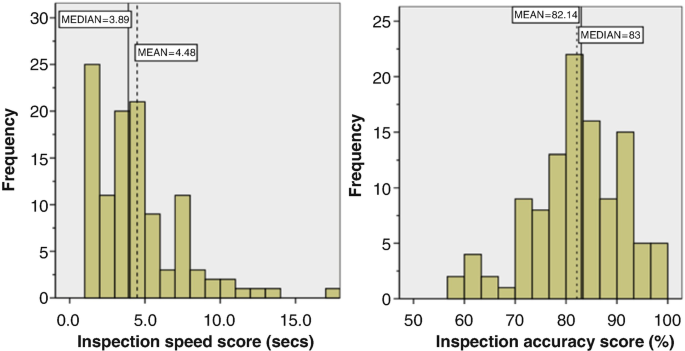

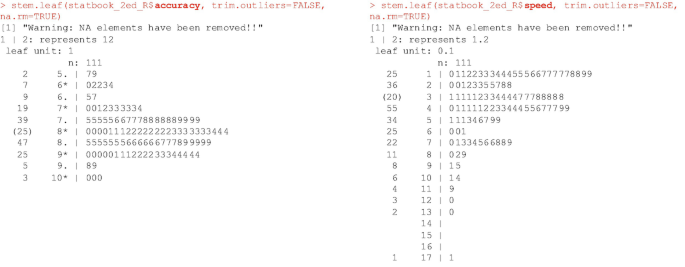

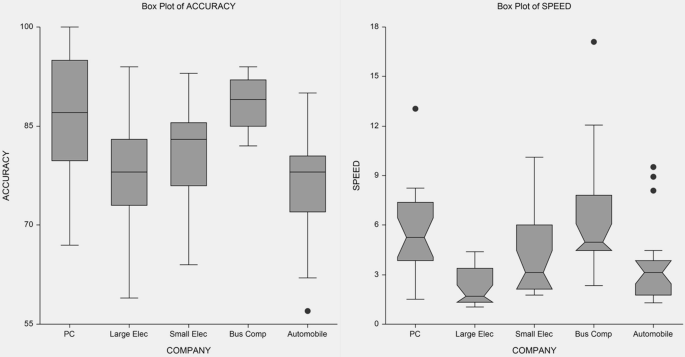

Reflect on the QCI research scenario and the associated data set discussed in Chap. 4 . Consider the following questions that Maree might wish to address with respect to decision accuracy and speed scores:

What was the typical level of accuracy and decision speed for inspectors in the sample? [see Procedure 5.4 – Assessing central tendency.]

What was the most common accuracy and speed score amongst the inspectors? [see Procedure 5.4 – Assessing central tendency.]

What was the range of accuracy and speed scores; the lowest and the highest scores? [see Procedure 5.5 – Assessing variability.]

How frequently were different levels of inspection accuracy and speed observed? What was the shape of the distribution of inspection accuracy and speed scores? [see Procedure 5.1 – Frequency tabulation, distributions & crosstabulation.]

What percentage of inspectors would have ‘failed’ to ‘make the cut’ assuming the industry standard for acceptable inspection accuracy and speed combined was set at 95%? [see Procedure 5.7 – Standard ( z ) scores.]

How variable were the inspectors in their accuracy and speed scores? Were all the accuracy and speed levels relatively close to each other in magnitude or were the scores widely spread out over the range of possible test outcomes? [see Procedure 5.5 – Assessing variability.]

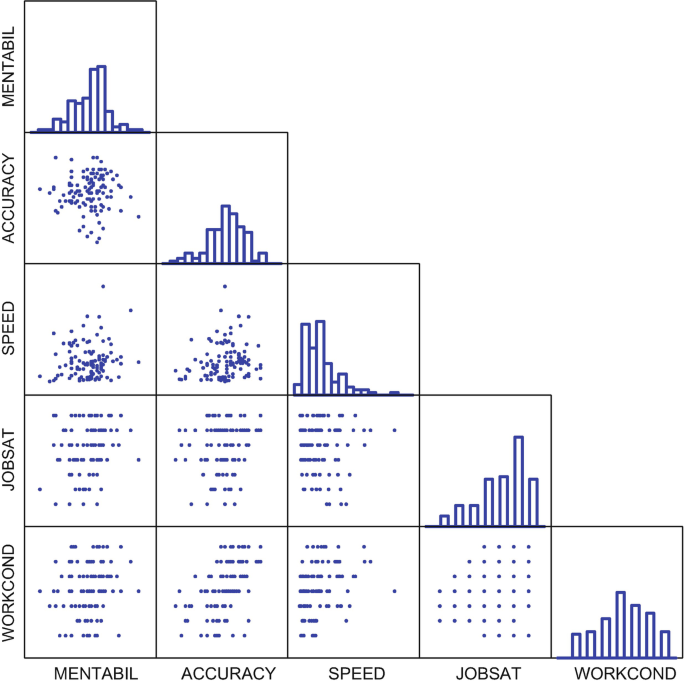

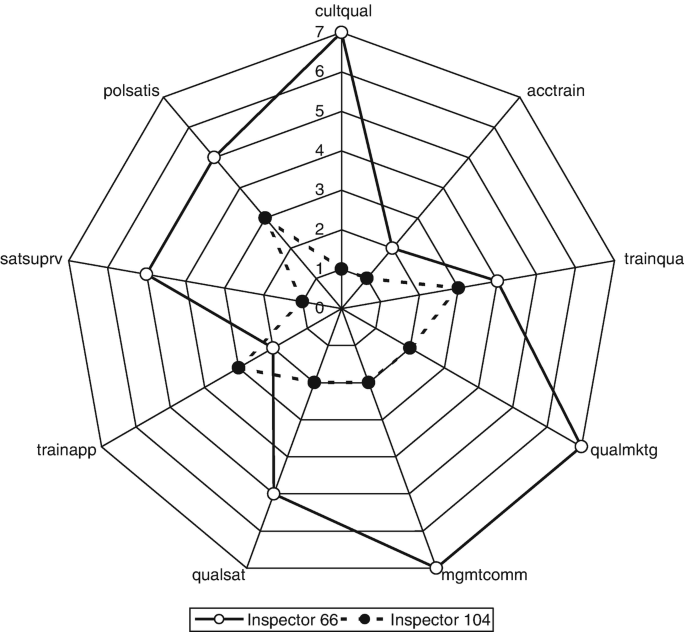

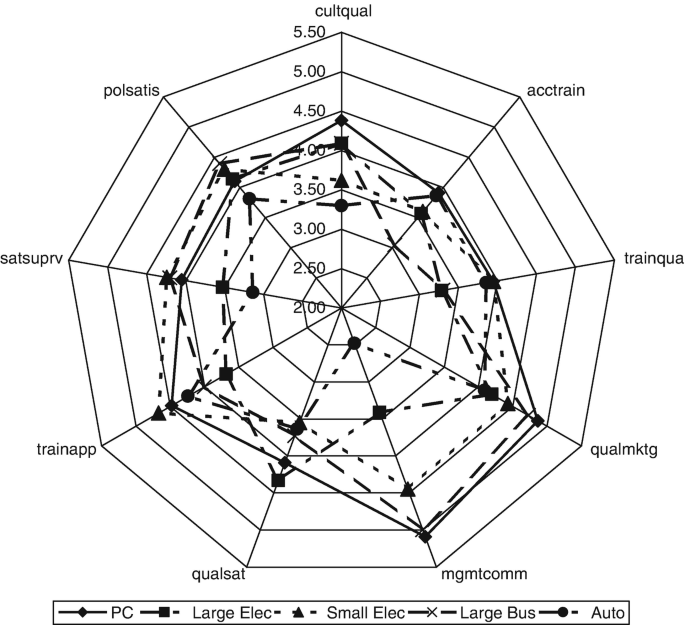

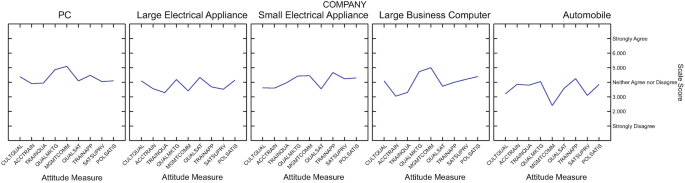

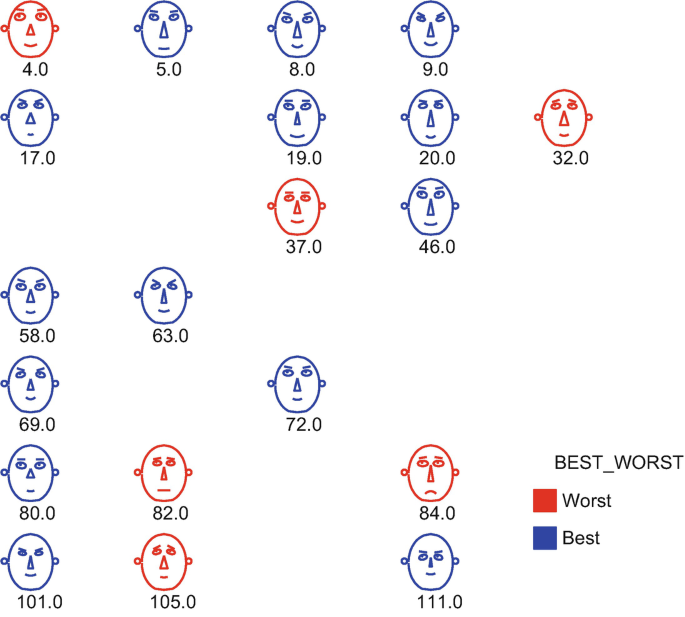

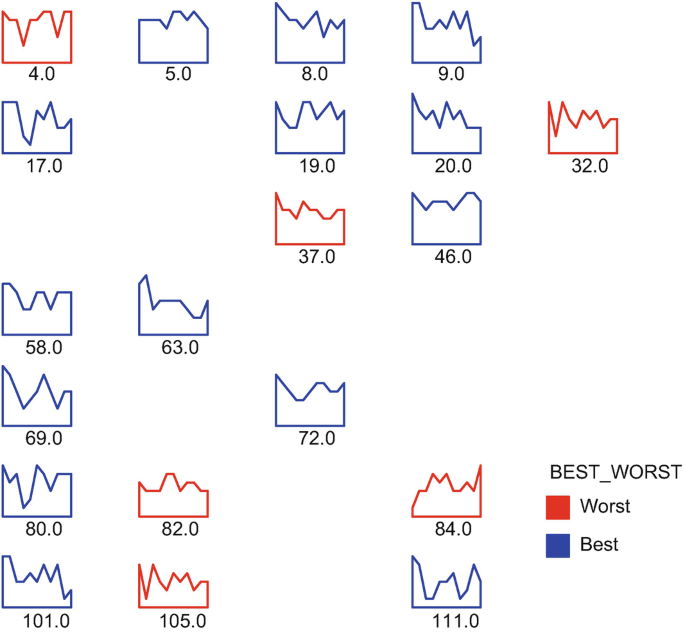

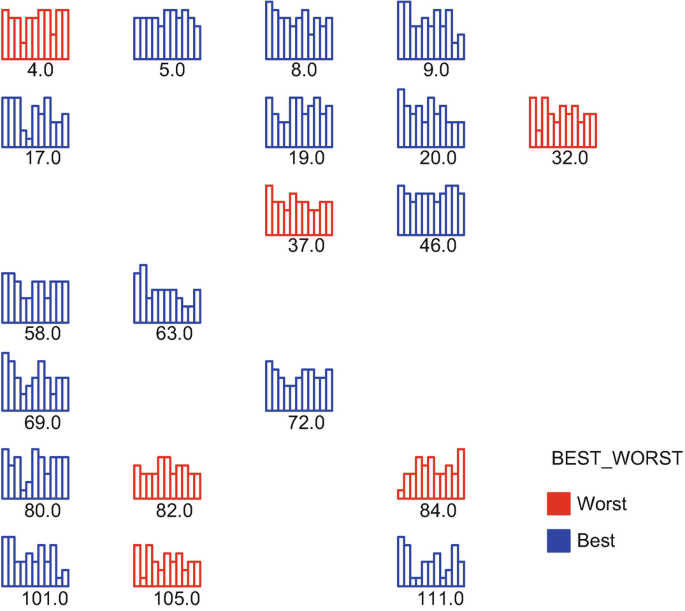

What patterns might be visually detected when looking at various QCI variables singly and together as a set? [see Procedure 5.2 – Graphical methods for dispaying data, Procedure 5.3 – Multivariate graphs & displays, and Procedure 5.6 – Exploratory data analysis.]

This chapter includes discussions and illustrations of a number of procedures available for answering questions about data like those posed above. In addition, you will find discussions of two fundamental concepts, namely probability and the normal distribution ; concepts that provide building blocks for Chaps. 6 and 7 .

Procedure 5.1: Frequency Tabulation, Distributions & Crosstabulation

Univariate (crosstabulations are bivariate); descriptive.

To produce an efficient counting summary of a sample of data points for ease of interpretation.

Any level of measurement can be used for a variable summarised in frequency tabulations and crosstabulations.

Frequency Tabulation and Distributions

Frequency tabulation serves to provide a convenient counting summary for a set of data that facilitates interpretation of various aspects of those data. Basically, frequency tabulation occurs in two stages:

First, the scores in a set of data are rank ordered from the lowest value to the highest value.

Second, the number of times each specific score occurs in the sample is counted. This count records the frequency of occurrence for that specific data value.

Consider the overall job satisfaction variable, jobsat , from the QCI data scenario. Performing frequency tabulation across the 112 Quality Control Inspectors on this variable using the SPSS Frequencies procedure (Allen et al. 2019 , ch. 3; George and Mallery 2019 , ch. 6) produces the frequency tabulation shown in Table 5.1 . Note that three of the inspectors in the sample did not provide a rating for jobsat thereby producing three missing values (= 2.7% of the sample of 112) and leaving 109 inspectors with valid data for the analysis.

The display of frequency tabulation is often referred to as the frequency distribution for the sample of scores. For each value of a variable, the frequency of its occurrence in the sample of data is reported. It is possible to compute various percentages and percentile values from a frequency distribution.

Table 5.1 shows the ‘Percent’ or relative frequency of each score (the percentage of the 112 inspectors obtaining each score, including those inspectors who were missing scores, which SPSS labels as ‘System’ missing). Table 5.1 also shows the ‘Valid Percent’ which is computed only for those inspectors in the sample who gave a valid or non-missing response.

Finally, it is possible to add up the ‘Valid Percent’ values, starting at the low score end of the distribution, to form the cumulative distribution or ‘Cumulative Percent’ . A cumulative distribution is useful for finding percentiles which reflect what percentage of the sample scored at a specific value or below.

We can see in Table 5.1 that 4 of the 109 valid inspectors (a ‘Valid Percent’ of 3.7%) indicated the lowest possible level of job satisfaction—a value of 1 (Very Low) – whereas 18 of the 109 valid inspectors (a ‘Valid Percent’ of 16.5%) indicated the highest possible level of job satisfaction—a value of 7 (Very High). The ‘Cumulative Percent’ number of 18.3 in the row for the job satisfaction score of 3 can be interpreted as “roughly 18% of the sample of inspectors reported a job satisfaction score of 3 or less”; that is, nearly a fifth of the sample expressed some degree of negative satisfaction with their job as a quality control inspector in their particular company.

If you have a large data set having many different scores for a particular variable, it may be more useful to tabulate frequencies on the basis of intervals of scores.

For the accuracy scores in the QCI database, you could count scores occurring in intervals such as ‘less than 75% accuracy’, ‘between 75% but less than 85% accuracy’, ‘between 85% but less than 95% accuracy’, and ‘95% accuracy or greater’, rather than counting the individual scores themselves. This would yield what is termed a ‘grouped’ frequency distribution since the data have been grouped into intervals or score classes. Producing such an analysis using SPSS would involve extra steps to create the new category or ‘grouping’ system for scores prior to conducting the frequency tabulation.

Crosstabulation

In a frequency crosstabulation , we count frequencies on the basis of two variables simultaneously rather than one; thus we have a bivariate situation.

For example, Maree might be interested in the number of male and female inspectors in the sample of 112 who obtained each jobsat score. Here there are two variables to consider: inspector’s gender and inspector’s j obsat score. Table 5.2 shows such a crosstabulation as compiled by the SPSS Crosstabs procedure (George and Mallery 2019 , ch. 8). Note that inspectors who did not report a score for jobsat and/or gender have been omitted as missing values, leaving 106 valid inspectors for the analysis.

The crosstabulation shown in Table 5.2 gives a composite picture of the distribution of satisfaction levels for male inspectors and for female inspectors. If frequencies or ‘Counts’ are added across the gender categories, we obtain the numbers in the ‘Total’ column (the percentages or relative frequencies are also shown immediately below each count) for each discrete value of jobsat (note this column of statistics differs from that in Table 5.1 because the gender variable was missing for certain inspectors). By adding down each gender column, we obtain, in the bottom row labelled ‘Total’, the number of males and the number of females that comprised the sample of 106 valid inspectors.

The totals, either across the rows or down the columns of the crosstabulation, are termed the marginal distributions of the table. These marginal distributions are equivalent to frequency tabulations for each of the variables jobsat and gender . As with frequency tabulation, various percentage measures can be computed in a crosstabulation, including the percentage of the sample associated with a specific count within either a row (‘% within jobsat ’) or a column (‘% within gender ’). You can see in Table 5.2 that 18 inspectors indicated a job satisfaction level of 7 (Very High); of these 18 inspectors reported in the ‘Total’ column, 8 (44.4%) were male and 10 (55.6%) were female. The marginal distribution for gender in the ‘Total’ row shows that 57 inspectors (53.8% of the 106 valid inspectors) were male and 49 inspectors (46.2%) were female. Of the 57 male inspectors in the sample, 8 (14.0%) indicated a job satisfaction level of 7 (Very High). Furthermore, we could generate some additional interpretive information of value by adding the ‘% within gender’ values for job satisfaction levels of 5, 6 and 7 (i.e. differing degrees of positive job satisfaction). Here we would find that 68.4% (= 24.6% + 29.8% + 14.0%) of male inspectors indicated some degree of positive job satisfaction compared to 61.2% (= 10.2% + 30.6% + 20.4%) of female inspectors.

This helps to build a picture of the possible relationship between an inspector’s gender and their level of job satisfaction (a relationship that, as we will see later, can be quantified and tested using Procedure 6.2 and Procedure 7.1 ).

It should be noted that a crosstabulation table such as that shown in Table 5.2 is often referred to as a contingency table about which more will be said later (see Procedure 7.1 and Procedure 7.18 ).

Frequency tabulation is useful for providing convenient data summaries which can aid in interpreting trends in a sample, particularly where the number of discrete values for a variable is relatively small. A cumulative percent distribution provides additional interpretive information about the relative positioning of specific scores within the overall distribution for the sample.

Crosstabulation permits the simultaneous examination of the distributions of values for two variables obtained from the same sample of observations. This examination can yield some useful information about the possible relationship between the two variables. More complex crosstabulations can be also done where the values of three or more variables are tracked in a single systematic summary. The use of frequency tabulation or cross-tabulation in conjunction with various other statistical measures, such as measures of central tendency (see Procedure 5.4 ) and measures of variability (see Procedure 5.5 ), can provide a relatively complete descriptive summary of any data set.

Disadvantages

Frequency tabulations can get messy if interval or ratio-level measures are tabulated simply because of the large number of possible data values. Grouped frequency distributions really should be used in such cases. However, certain choices, such as the size of the score interval (group size), must be made, often arbitrarily, and such choices can affect the nature of the final frequency distribution.

Additionally, percentage measures have certain problems associated with them, most notably, the potential for their misinterpretation in small samples. One should be sure to know the sample size on which percentage measures are based in order to obtain an interpretive reference point for the actual percentage values.

For example

In a sample of 10 individuals, 20% represents only two individuals whereas in a sample of 300 individuals, 20% represents 60 individuals. If all that is reported is the 20%, then the mental inference drawn by readers is likely to be that a sizeable number of individuals had a score or scores of a particular value—but what is ‘sizeable’ depends upon the total number of observations on which the percentage is based.

Where Is This Procedure Useful?

Frequency tabulation and crosstabulation are very commonly applied procedures used to summarise information from questionnaires, both in terms of tabulating various demographic characteristics (e.g. gender, age, education level, occupation) and in terms of actual responses to questions (e.g. numbers responding ‘yes’ or ‘no’ to a particular question). They can be particularly useful in helping to build up the data screening and demographic stories discussed in Chap. 4 . Categorical data from observational studies can also be analysed with this technique (e.g. the number of times Suzy talks to Frank, to Billy, and to John in a study of children’s social interactions).

Certain types of experimental research designs may also be amenable to analysis by crosstabulation with a view to drawing inferences about distribution differences across the sets of categories for the two variables being tracked.

You could employ crosstabulation in conjunction with the tests described in Procedure 7.1 to see if two different styles of advertising campaign differentially affect the product purchasing patterns of male and female consumers.

In the QCI database, Maree could employ crosstabulation to help her answer the question “do different types of electronic manufacturing firms ( company ) differ in terms of their tendency to employ male versus female quality control inspectors ( gender )?”

Software Procedures

Application | Procedures |

|---|---|

SPSS | or . and select the variable(s) you wish to analyse; for the procedure, hitting the ‘ ’ button will allow you to choose various types of statistics and percentages to show in each cell of the table. |

NCSS | or and select the variable(s) you wish to analyse. |

SYSTAT | or ➔ and select the variable(s) you wish to analyse and choose the optional statistics you wish to see. |

STATGRAPHICS | or and select the variable(s) you wish to analyse; hit ‘ ’ and when the ‘Tables and Graphs’ window opens, choose the Tables and Graphs you wish to see. |

Commander | or and select the variable(s) you wish to analyse and choose the optional statistics you wish to see. |

Procedure 5.2: Graphical Methods for Displaying Data

Univariate (scatterplots are bivariate); descriptive.

To visually summarise characteristics of a data sample for ease of interpretation.

Any level of measurement can be accommodated by these graphical methods. Scatterplots are generally used for interval or ratio-level data.

Graphical methods for displaying data include bar and pie charts, histograms and frequency polygons, line graphs and scatterplots. It is important to note that what is presented here is a small but representative sampling of the types of simple graphs one can produce to summarise and display trends in data. Generally speaking, SPSS offers the easiest facility for producing and editing graphs, but with a rather limited range of styles and types. SYSTAT, STATGRAPHICS and NCSS offer a much wider range of graphs (including graphs unique to each package), but with the drawback that it takes somewhat more effort to get the graphs in exactly the form you want.

Bar and Pie Charts

These two types of graphs are useful for summarising the frequency of occurrence of various values (or ranges of values) where the data are categorical (nominal or ordinal level of measurement).

A bar chart uses vertical and horizontal axes to summarise the data. The vertical axis is used to represent frequency (number) of occurrence or the relative frequency (percentage) of occurrence; the horizontal axis is used to indicate the data categories of interest.

A pie chart gives a simpler visual representation of category frequencies by cutting a circular plot into wedges or slices whose sizes are proportional to the relative frequency (percentage) of occurrence of specific data categories. Some pie charts can have a one or more slices emphasised by ‘exploding’ them out from the rest of the pie.

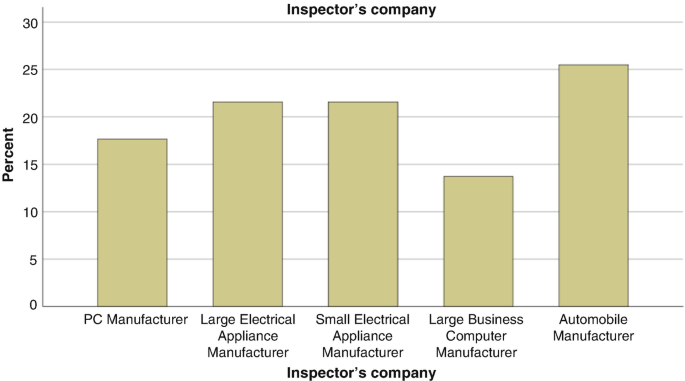

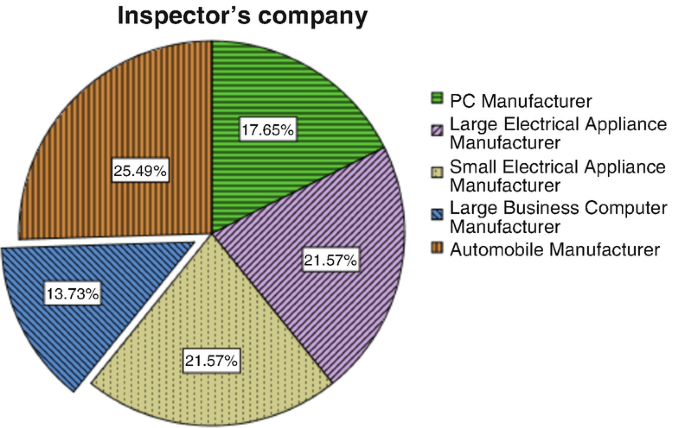

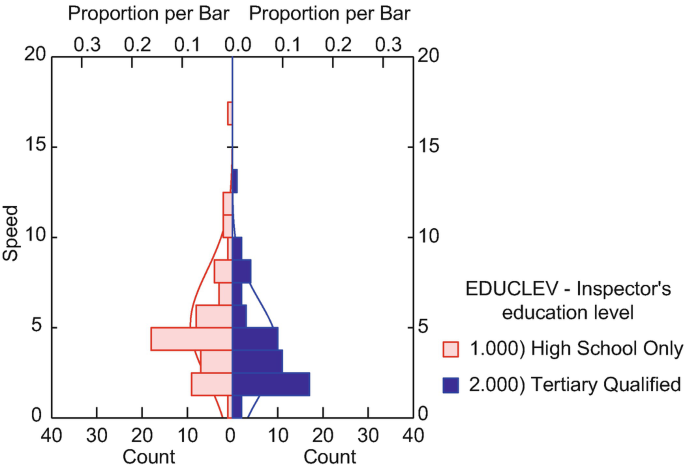

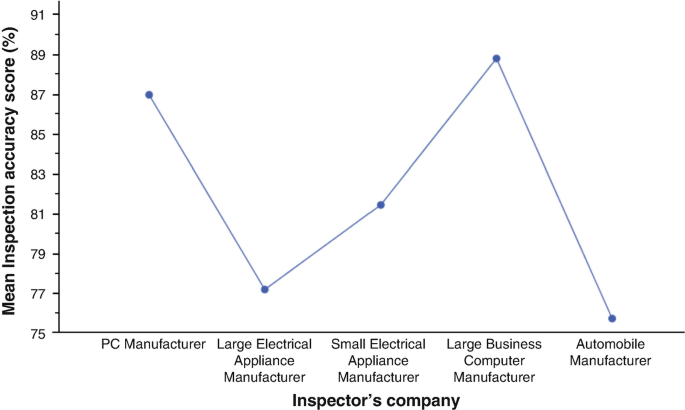

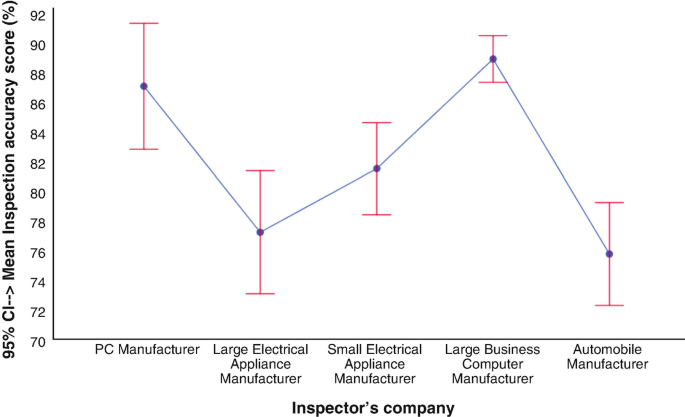

Consider the company variable from the QCI database. This variable depicts the types of manufacturing firms that the quality control inspectors worked for. Figure 5.1 illustrates a bar chart summarising the percentage of female inspectors in the sample coming from each type of firm. Figure 5.2 shows a pie chart representation of the same data, with an ‘exploded slice’ highlighting the percentage of female inspectors in the sample who worked for large business computer manufacturers – the lowest percentage of the five types of companies. Both graphs were produced using SPSS.

Bar chart: Percentage of female inspectors

Pie chart: Percentage of female inspectors

The pie chart was modified with an option to show the actual percentage along with the label for each category. The bar chart shows that computer manufacturing firms have relatively fewer female inspectors compared to the automotive and electrical appliance (large and small) firms. This trend is less clear from the pie chart which suggests that pie charts may be less visually interpretable when the data categories occur with rather similar frequencies. However, the ‘exploded slice’ option can help interpretation in some circumstances.

Certain software programs, such as SPSS, STATGRAPHICS, NCSS and Microsoft Excel, offer the option of generating 3-dimensional bar charts and pie charts and incorporating other ‘bells and whistles’ that can potentially add visual richness to the graphic representation of the data. However, you should generally be careful with these fancier options as they can produce distortions and create ambiguities in interpretation (e.g. see discussions in Jacoby 1997 ; Smithson 2000 ; Wilkinson 2009 ). Such distortions and ambiguities could ultimately end up providing misinformation to researchers as well as to those who read their research.

Histograms and Frequency Polygons

These two types of graphs are useful for summarising the frequency of occurrence of various values (or ranges of values) where the data are essentially continuous (interval or ratio level of measurement) in nature. Both histograms and frequency polygons use vertical and horizontal axes to summarise the data. The vertical axis is used to represent the frequency (number) of occurrence or the relative frequency (percentage) of occurrences; the horizontal axis is used for the data values or ranges of values of interest. The histogram uses bars of varying heights to depict frequency; the frequency polygon uses lines and points.

There is a visual difference between a histogram and a bar chart: the bar chart uses bars that do not physically touch, signifying the discrete and categorical nature of the data, whereas the bars in a histogram physically touch to signal the potentially continuous nature of the data.

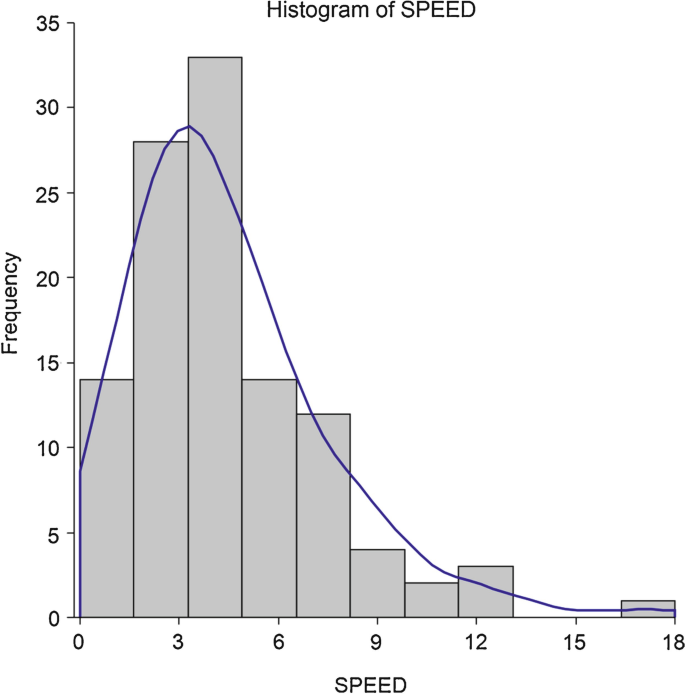

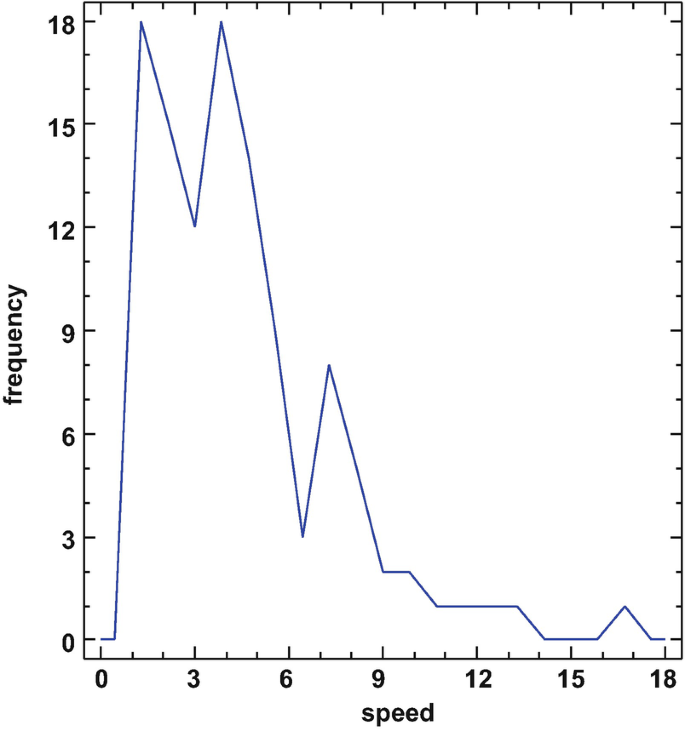

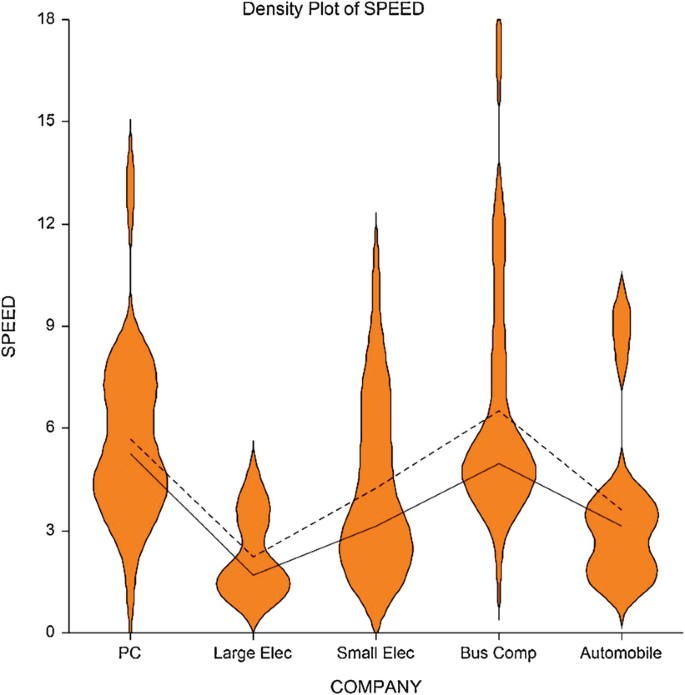

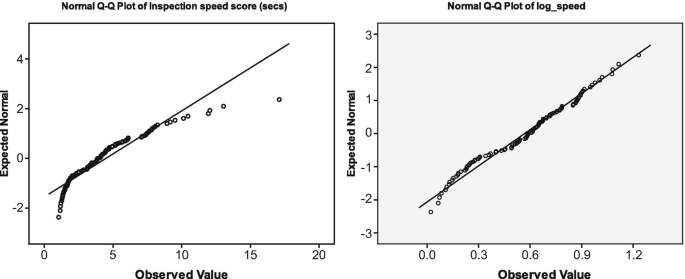

Suppose Maree wanted to graphically summarise the distribution of speed scores for the 112 inspectors in the QCI database. Figure 5.3 (produced using NCSS) illustrates a histogram representation of this variable. Figure 5.3 also illustrates another representational device called the ‘density plot’ (the solid tracing line overlaying the histogram) which gives a smoothed impression of the overall shape of the distribution of speed scores. Figure 5.4 (produced using STATGRAPHICS) illustrates the frequency polygon representation for the same data.

Histogram of the speed variable (with density plot overlaid)

Frequency polygon plot of the speed variable