- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Descriptive Research in Psychology

Sometimes you need to dig deeper than the pure statistics

John Loeppky is a freelance journalist based in Regina, Saskatchewan, Canada, who has written about disability and health for outlets of all kinds.

:max_bytes(150000):strip_icc():format(webp)/johnloeppky-e791955a6dde4c10a4993f3e6c8544b8.jpg)

FG Trade / E+/ Getty

Types of Descriptive Research and the Methods Used

- Advantages & Limitations of Descriptive Research

Best Practices for Conducting Descriptive Research

Descriptive research is one of the key tools needed in any psychology researcher’s toolbox in order to create and lead a project that is both equitable and effective. Because psychology, as a field, loves definitions, let’s start with one. The University of Minnesota’s Introduction to Psychology defines this type of research as one that is “...designed to provide a snapshot of the current state of affairs.” That's pretty broad, so what does that mean in practice? Dr. Heather Derry-Vick (PhD) , an assistant professor in psychiatry at Hackensack Meridian School of Medicine, helps us put it into perspective. "Descriptive research really focuses on defining, understanding, and measuring a phenomenon or an experience," she says. "Not trying to change a person's experience or outcome, or even really looking at the mechanisms for why that might be happening, but more so describing an experience or a process as it unfolds naturally.”

Within the descriptive research methodology there are multiple types, including the following.

Descriptive Survey Research

This involves going beyond a typical tool like a LIkert Scale —where you typically place your response to a prompt on a one to five scale. We already know that scales like this can be ineffective, particularly when studying pain, for example.

When that's the case, using a descriptive methodology can help dig deeper into how a person is thinking, feeling, and acting rather than simply quantifying it in a way that might be unclear or confusing.

Descriptive Observational Research

Think of observational research like an ethically-focused version of people-watching. One example would be watching the patterns of children on a playground—perhaps when looking at a concept like risky play or seeking to observe social behaviors between children of different ages.

Descriptive Case Study Research

A descriptive approach to a case study is akin to a biography of a person, honing in on the experiences of a small group to extrapolate to larger themes. We most commonly see descriptive case studies when those in the psychology field are using past clients as an example to illustrate a point.

Correlational Descriptive Research

While descriptive research is often about the here and now, this form of the methodology allows researchers to make connections between groups of people. As an example from her research, Derry-Vick says she uses this method to identify how gender might play a role in cancer scan anxiety, aka scanxiety.

Dr. Derry-Vick's research uses surveys and interviews to get a sense of how cancer patients are feeling and what they are experiencing both in the course of their treatment and in the lead-up to their next scan, which can be a significant source of stress.

David Marlon, PsyD, MBA , who works as a clinician and as CEO at Vegas Stronger, and whose research focused on leadership styles at community-based clinics, says that using descriptive research allowed him to get beyond the numbers.

In his case, that includes data points like how many unhoused people found stable housing over a certain period or how many people became drug-free—and identify the reasons for those changes.

Those [data points] are some practical, quantitative tools that are helpful. But when I question them on how safe they feel, when I question them on the depth of the bond or the therapeutic alliance, when I talk to them about their processing of traumas, wellbeing...these are things that don't really fall on to a yes, no, or even on a Likert scale.

For the portion of his thesis that was focused on descriptive research, Marlon used semi-structured interviews to look at the how and the why of transformational leadership and its impact on clinics’ clients and staff.

Advantages & Limitations of Descriptive Research

So, if the advantages of using descriptive research include that it centers the research participants, gives us a clear picture of what is happening to a person in a particular moment, and gives us very nuanced insights into how a particular situation is being perceived by the very person affected, are there drawbacks? Yes, there are. Dr. Derry-Vick says that it’s important to keep in mind that just because descriptive research tells us something is happening doesn’t mean it necessarily leads us to the resolution of a given problem.

I think that, by design, the descriptive research might not tell you why a phenomenon is happening. So it might tell you, very well, how often it's happening, or what the levels are, or help you understand it in depth. But that may or may not always tell you information about the causes or mechanisms for why something is happening.

Another limitation she identifies is that it also can’t tell you, on its own, whether a particular treatment pathway is having the desired effect.

“Descriptive research in and of itself can't really tell you whether a specific approach is going to be helpful until you take in a different approach to actually test it.”

Marlon, who believes in a multi-disciplinary approach, says that his subfield—addictions—is one where descriptive research had its limits, but helps readers go beyond preconceived notions of what addictions treatment looks and feels like when it is effective. “If we talked to and interviewed and got descriptive information from the clinicians and the clients, a much more precise picture would be painted, showing the need for a client's specific multidisciplinary approach augmented with a variety of modalities," he says. "If you tried to look at my discipline in a pure quantitative approach , it wouldn't begin to tell the real story.”

Because you’re controlling far fewer variables than other forms of research, it’s important to identify whether those you are describing, your study participants, should be informed that they are part of a study.

For example, if you’re observing and describing who is buying what in a grocery store to identify patterns, then you might not need to identify yourself.

However, if you’re asking people about their fear of certain treatment, or how their marginalized identities impact their mental health in a particular way, there is far more of a pressure to think deeply about how you, as the researcher, are connected to the people you are researching.

Many descriptive research projects use interviews as a form of research gathering and, as a result, descriptive research that is focused on this type of data gathering also has ethical and practical concerns attached. Thankfully, there are plenty of guides from established researchers about how to best conduct these interviews and/or formulate surveys .

While descriptive research has its limits, it is commonly used by researchers to get a clear vantage point on what is happening in a given situation.

Tools like surveys, interviews, and observation are often employed to dive deeper into a given issue and really highlight the human element in psychological research. At its core, descriptive research is rooted in a collaborative style that allows deeper insights when used effectively.

University of Minnesota. Introduction to Psychology .

By John Loeppky John Loeppky is a freelance journalist based in Regina, Saskatchewan, Canada, who has written about disability and health for outlets of all kinds.

- General Categories

- Mental Health

- IQ and Intelligence

- Bipolar Disorder

Descriptive Methods in Psychology: Unveiling Research Techniques and Applications

From the unspoken depths of the human mind to the observable behaviors that shape our world, descriptive methods in psychology unveil the intricate tapestry of the human experience. These methods serve as the bedrock of psychological research, offering a window into the complexities of human behavior, cognition, and emotion. But what exactly are descriptive methods, and why are they so crucial to our understanding of the human psyche?

At its core, descriptive methods in psychology encompass a range of techniques used to systematically observe, document, and analyze human behavior and mental processes. Unlike their experimental counterparts, which manipulate variables to establish cause-and-effect relationships, descriptive methods aim to paint a comprehensive picture of phenomena as they naturally occur.

Imagine, if you will, a curious psychologist perched on a park bench, notebook in hand, observing the ebb and flow of human interactions around them. This scene captures the essence of descriptive research – a meticulous effort to capture the nuances of human behavior in its natural habitat.

The importance of these methods in psychological research cannot be overstated. They provide the foundation upon which theories are built, hypotheses are formed, and interventions are designed. By offering rich, detailed accounts of human experiences, descriptive methods allow researchers to identify patterns, generate new ideas, and formulate questions that drive the field forward.

But how do descriptive methods differ from their experimental cousins? Picture two chefs in a kitchen. The experimental chef carefully measures ingredients, adjusts cooking temperatures, and controls every variable to create a specific dish. The descriptive chef, on the other hand, observes the natural cooking process, taking detailed notes on the ingredients used, the techniques employed, and the resulting flavors – without interfering with the process itself.

Types of Descriptive Methods in Psychology: A Smorgasbord of Techniques

Just as a master chef has a variety of tools at their disposal, psychologists employ a diverse array of descriptive methods to explore the human mind. Let’s take a culinary tour through these techniques, shall we?

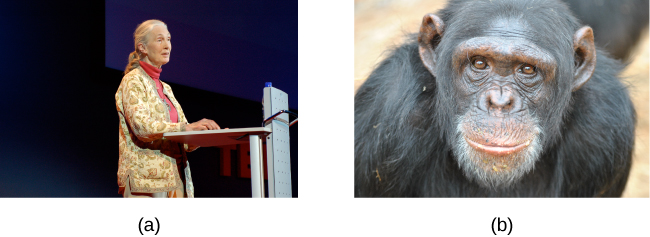

First on our menu is the observational method. Like a food critic savoring every bite, researchers using this approach carefully observe and record behavior in natural settings. This could involve watching children interact on a playground, studying facial expressions during conversations, or even observing online behavior in social media interactions.

Next, we have case studies – the psychological equivalent of a deep dive into a single, exquisite dish. These in-depth investigations focus on individual subjects or small groups, providing rich, detailed accounts of unique psychological phenomena. Think of the famous case of Phineas Gage, whose personality dramatically changed after a railroad spike pierced his brain, revolutionizing our understanding of the frontal lobe’s role in personality.

Moving on to surveys and questionnaires, we find ourselves at the buffet of psychological research. These methods allow researchers to gather large amounts of data from diverse populations, offering a broad view of attitudes, beliefs, and behaviors. It’s like asking hundreds of diners about their favorite dishes to understand food preferences on a grand scale.

Archival research, our next course, involves digging into existing records and documents. It’s akin to a food historian poring over ancient cookbooks and menus to understand culinary trends of the past. Psychologists might examine school records, medical files, or historical documents to glean insights into human behavior and societal changes over time.

Last but not least, we have content analysis – the process of systematically analyzing written, spoken, or visual communication. Imagine a culinary critic dissecting food reviews to understand trends in gastronomy. Similarly, psychologists might analyze social media posts, news articles, or therapy transcripts to identify patterns in language use, emotional expression, or cultural attitudes.

Observational Methods: A Closer Look at the Art of Watching

Let’s zoom in on observational methods, shall we? These techniques are the binoculars through which psychologists view the wild safari of human behavior. Observational methods in psychology come in various flavors, each offering a unique perspective on the human experience.

Naturalistic observation is the vanilla ice cream of observational methods – simple, classic, and incredibly versatile. Here, researchers observe behavior in its natural environment without any interference. Picture a psychologist sitting in a busy café, discreetly noting how people interact, their body language, and their coffee-ordering habits. It’s like being a fly on the wall, but with a notepad and a keen eye for detail.

Participant observation, on the other hand, is more like a spicy curry – it requires the researcher to immerse themselves in the environment they’re studying. Imagine an anthropologist living with a remote tribe to understand their customs and beliefs. In psychology, this might involve a researcher joining a support group to study group dynamics or working in a daycare to observe child development up close.

Structured observation is the precise soufflé of observational methods. It involves carefully planned observations with predetermined categories of behavior to record. Think of a researcher in a classroom, ticking boxes on a checklist every time a student raises their hand or speaks out of turn. It’s systematic, it’s organized, and it produces quantifiable data that can be statistically analyzed.

Now, like any good recipe, observational methods have their strengths and weaknesses. On the plus side, they offer a window into real-world behavior, capturing the nuances and complexities that might be lost in a controlled laboratory setting. They’re particularly useful for studying behaviors that would be unethical or impractical to manipulate experimentally.

However, observational methods aren’t without their limitations. Observer bias can creep in, like a sneaky pinch of salt that alters the entire flavor of a dish. Researchers might unconsciously focus on behaviors that confirm their hypotheses, overlooking contradictory evidence. Moreover, the mere presence of an observer can alter behavior – much like how you might eat more politely when dining with your in-laws.

Despite these challenges, observational methods remain a crucial ingredient in the recipe of psychological research. They provide the raw, unfiltered data that forms the basis for theories and hypotheses, serving as a springboard for more focused, experimental studies.

Case Studies: Diving Deep into the Human Psyche

Ah, case studies – the psychological equivalent of a gourmet tasting menu. These in-depth investigations offer a rich, multi-course exploration of individual experiences, providing insights that broader studies might miss. Let’s dig in, shall we?

Single-subject designs focus on one individual, much like a chef crafting a bespoke dish for a discerning patron. These studies involve repeated observations of a single person over time, often used to track the progress of therapy or the effects of a specific intervention. It’s like watching a caterpillar transform into a butterfly, documenting every fascinating stage of the metamorphosis.

Multiple-case designs, on the other hand, are more like a carefully curated flight of wines. They involve studying several individuals or groups, allowing researchers to identify patterns and themes across different cases. This approach can be particularly powerful in fields like clinical psychology, where comparing the experiences of multiple patients can shed light on the nuances of a particular disorder or treatment approach.

The strengths of case studies lie in their ability to provide rich, detailed accounts of psychological phenomena. They’re like high-definition cameras, capturing the subtle expressions and gestures that might be missed in a wide-angle shot. Case studies are particularly valuable for studying rare conditions or unique circumstances that don’t lend themselves to large-scale research.

However, like a delicate soufflé, case studies have their vulnerabilities. The small sample size makes it difficult to generalize findings to broader populations. It’s a bit like trying to understand the entire culinary world based on one exquisite meal. Moreover, researcher bias can creep in, potentially skewing the interpretation of the data.

Despite these limitations, case studies have played a pivotal role in the history of psychology. Who could forget the famous case of “Little Hans,” which shaped Freud’s theories of psychosexual development? Or the tragic story of Phineas Gage, whose personality change after a brain injury revolutionized our understanding of the frontal lobe’s role in personality and decision-making?

These landmark cases serve as a reminder of the power of in-depth, qualitative research in advancing our understanding of the human mind. They’re the secret ingredients that add depth and flavor to the rich stew of psychological knowledge.

Surveys and Questionnaires: Casting a Wide Net in the Sea of Human Experience

Now, let’s turn our attention to surveys and questionnaires – the all-you-can-eat buffet of psychological research methods. These tools allow researchers to gather large amounts of data from diverse populations, offering a broad view of attitudes, beliefs, and behaviors. It’s like conducting a massive taste test to understand the flavor preferences of an entire city.

The survey method in psychology comes in various forms, each designed to elicit different types of information. Closed-ended questions are like multiple-choice menus, offering respondents a set of predefined options to choose from. These are great for gathering quantitative data that can be easily analyzed statistically.

Open-ended questions, on the other hand, are more like asking diners to describe their ideal meal. They allow respondents to answer in their own words, providing rich, qualitative data that can uncover unexpected insights and nuances.

Likert scales, those ubiquitous “strongly disagree” to “strongly agree” options, are the spice rack of surveys. They allow researchers to measure the intensity of attitudes or opinions, adding depth and flavor to the data collected.

The design and administration of surveys is an art in itself. It’s like crafting the perfect menu – you need to consider the order of questions, the wording, and even the visual layout to ensure you’re getting accurate, unbiased responses. Online surveys have revolutionized this process, making it easier than ever to reach large, diverse populations. However, they also bring new challenges, such as ensuring representative samples and dealing with the potential for low response rates.

Analyzing survey data is where the real magic happens. It’s like a master chef tasting and adjusting a complex sauce. Researchers use statistical techniques to identify patterns, correlations, and trends in the data. They might look for relationships between variables, compare responses across different demographic groups, or track changes in attitudes over time.

Survey research in psychology has its strengths and limitations. On the plus side, it allows researchers to gather large amounts of data relatively quickly and inexpensively. It’s particularly useful for studying attitudes, beliefs, and self-reported behaviors that might be difficult to observe directly.

However, surveys rely on self-report, which can be as unreliable as asking someone to accurately recall everything they ate last week. People may not always be honest, may misremember, or may be influenced by social desirability bias – the tendency to give answers that make them look good.

Despite these challenges, surveys and questionnaires remain a staple in the psychological research diet. They provide valuable insights into the thoughts, feelings, and experiences of large populations, helping to paint a broad picture of human psychology.

Applications of Descriptive Methods in Psychology: From the Clinic to the Classroom

Now that we’ve sampled the various flavors of descriptive methods, let’s explore how these techniques are applied across different areas of psychology. It’s like watching a master chef adapt their skills to create dishes for different cuisines.

In clinical psychology, descriptive methods are the bread and butter of diagnosis and treatment. Clinicians use structured interviews and behavioral observations to assess symptoms and track treatment progress. Case studies of individual patients often provide valuable insights into rare disorders or unique treatment approaches. It’s like a medical detective story, piecing together clues to understand and treat complex psychological conditions.

Developmental psychologists use observational methods to study how children grow and change over time. They might observe infants’ reactions to new stimuli, track language development in toddlers, or use longitudinal surveys to follow adolescents’ social and emotional development. It’s like watching a time-lapse video of a plant growing from seed to flower, capturing each stage of development in exquisite detail.

Social psychologists employ a smorgasbord of descriptive methods to study human interaction and group behavior. They might use naturalistic observation to study crowd behavior at sporting events, conduct surveys to measure attitudes towards social issues, or analyze social media content to understand online communication patterns. It’s like being a culinary anthropologist, studying the social rituals and customs around food in different cultures.

In organizational psychology, surveys and questionnaires are the main course. Researchers use these tools to measure employee satisfaction, assess organizational culture, and evaluate the effectiveness of training programs. It’s akin to a restaurant critic sampling dishes from every section of the menu to provide a comprehensive review.

Cross-cultural psychology relies heavily on descriptive methods to understand how cultural factors influence behavior and mental processes. Researchers might use participant observation to immerse themselves in different cultures, conduct surveys to compare attitudes across countries, or analyze cultural artifacts to understand societal values. It’s like exploring a global food market, sampling dishes from around the world to understand the rich diversity of human experience.

Conclusion: The Continuing Feast of Psychological Research

As we come to the end of our culinary tour of descriptive methods in psychology, let’s take a moment to savor the rich flavors we’ve experienced. From the subtle notes of naturalistic observation to the complex bouquet of case studies, from the broad palette of surveys to the deep umami of archival research, these methods form the foundation of our understanding of the human mind and behavior.

The key takeaway? There’s no one-size-fits-all approach in psychological research. Just as a skilled chef chooses their techniques based on the ingredients at hand and the dish they want to create, psychologists must select the most appropriate methods for their research questions. Sometimes, a mixed-methods approach – combining different descriptive techniques or blending descriptive and experimental methods – can provide the most comprehensive understanding of a phenomenon.

Looking to the future, we can expect to see new flavors added to the menu of psychological methods . Advances in technology are opening up exciting possibilities for data collection and analysis. Big data and machine learning algorithms might allow researchers to analyze vast amounts of naturally occurring behavioral data. Virtual reality could provide new ways to conduct controlled observations in realistic settings.

Yet, amidst these innovations, the core principles of descriptive research remain as crucial as ever. The careful observation, detailed documentation, and thoughtful analysis that characterize these methods will continue to be essential ingredients in the recipe of psychological understanding.

As we close this exploration, let’s remember that behind every statistic, every case study, every survey response, there’s a human story. Research methods in psychology are not just dry, academic tools – they’re the means by which we unravel the mysteries of the human experience, one observation at a time.

So, the next time you find yourself people-watching in a café, or pondering the results of a public opinion poll, or engrossed in a documentary about an extraordinary individual, remember – you’re engaging in a form of descriptive research. You’re participating in the grand, ongoing feast of human understanding. Bon appétit!

References:

1. Coolican, H. (2014). Research Methods and Statistics in Psychology. Psychology Press.

2. Creswell, J. W., & Poth, C. N. (2016). Qualitative Inquiry and Research Design: Choosing Among Five Approaches. SAGE Publications.

3. Goodwin, C. J., & Goodwin, K. A. (2016). Research in Psychology: Methods and Design. John Wiley & Sons.

4. Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied settings. Oxford University Press.

5. Leedy, P. D., & Ormrod, J. E. (2015). Practical Research: Planning and Design. Pearson.

6. Mehl, M. R., & Conner, T. S. (Eds.). (2012). Handbook of Research Methods for Studying Daily Life. Guilford Press.

7. Mertens, D. M. (2014). Research and Evaluation in Education and Psychology: Integrating Diversity With Quantitative, Qualitative, and Mixed Methods. SAGE Publications.

8. Ritchie, J., Lewis, J., Nicholls, C. M., & Ormston, R. (Eds.). (2013). Qualitative Research Practice: A Guide for Social Science Students and Researchers. SAGE.

9. Shaughnessy, J. J., Zechmeister, E. B., & Zechmeister, J. S. (2014). Research Methods in Psychology. McGraw-Hill Education.

10. Yin, R. K. (2017). Case Study Research and Applications: Design and Methods. SAGE Publications.

Was this article helpful?

Would you like to add any comments (optional), leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Post Comment

Related Resources

Brain Samples: Unlocking the Secrets of Neuroscience

Discover Psychology Impact Factor: Exploring the Journal’s Influence and Significance

Confidence Intervals in Psychology: Enhancing Statistical Interpretation and Research Validity

Control Condition in Psychology: Definition, Purpose, and Applications

Dependent Variables in Psychology: Definition, Examples, and Importance

Debriefing in Psychology: Definition, Purpose, and Techniques

Correlation in Psychology: Definition, Types, and Applications

Data Collection Methods in Psychology: Essential Techniques for Researchers

Histogram in Psychology: Definition, Applications, and Significance

Dimensional vs Categorical Approach in Psychology: Comparing Methods of Classification

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 3. Psychological Science

3.2 Psychologists Use Descriptive, Correlational, and Experimental Research Designs to Understand Behaviour

Learning objectives.

- Differentiate the goals of descriptive, correlational, and experimental research designs and explain the advantages and disadvantages of each.

- Explain the goals of descriptive research and the statistical techniques used to interpret it.

- Summarize the uses of correlational research and describe why correlational research cannot be used to infer causality.

- Review the procedures of experimental research and explain how it can be used to draw causal inferences.

Psychologists agree that if their ideas and theories about human behaviour are to be taken seriously, they must be backed up by data. However, the research of different psychologists is designed with different goals in mind, and the different goals require different approaches. These varying approaches, summarized in Table 3.2, are known as research designs . A research design is the specific method a researcher uses to collect, analyze, and interpret data . Psychologists use three major types of research designs in their research, and each provides an essential avenue for scientific investigation. Descriptive research is research designed to provide a snapshot of the current state of affairs . Correlational research is research designed to discover relationships among variables and to allow the prediction of future events from present knowledge . Experimental research is research in which initial equivalence among research participants in more than one group is created, followed by a manipulation of a given experience for these groups and a measurement of the influence of the manipulation . Each of the three research designs varies according to its strengths and limitations, and it is important to understand how each differs.

Descriptive Research: Assessing the Current State of Affairs

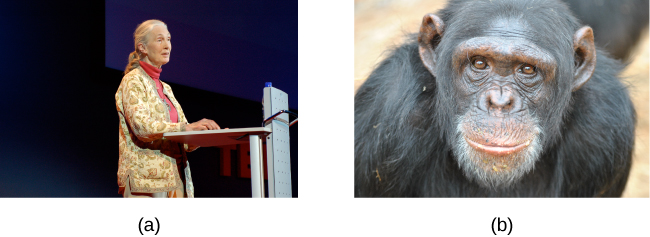

Descriptive research is designed to create a snapshot of the current thoughts, feelings, or behaviour of individuals. This section reviews three types of descriptive research : case studies , surveys , and naturalistic observation (Figure 3.4).

Sometimes the data in a descriptive research project are based on only a small set of individuals, often only one person or a single small group. These research designs are known as case studies — descriptive records of one or more individual’s experiences and behaviour . Sometimes case studies involve ordinary individuals, as when developmental psychologist Jean Piaget used his observation of his own children to develop his stage theory of cognitive development. More frequently, case studies are conducted on individuals who have unusual or abnormal experiences or characteristics or who find themselves in particularly difficult or stressful situations. The assumption is that by carefully studying individuals who are socially marginal, who are experiencing unusual situations, or who are going through a difficult phase in their lives, we can learn something about human nature.

Sigmund Freud was a master of using the psychological difficulties of individuals to draw conclusions about basic psychological processes. Freud wrote case studies of some of his most interesting patients and used these careful examinations to develop his important theories of personality. One classic example is Freud’s description of “Little Hans,” a child whose fear of horses the psychoanalyst interpreted in terms of repressed sexual impulses and the Oedipus complex (Freud, 1909/1964).

Another well-known case study is Phineas Gage, a man whose thoughts and emotions were extensively studied by cognitive psychologists after a railroad spike was blasted through his skull in an accident. Although there are questions about the interpretation of this case study (Kotowicz, 2007), it did provide early evidence that the brain’s frontal lobe is involved in emotion and morality (Damasio et al., 2005). An interesting example of a case study in clinical psychology is described by Rokeach (1964), who investigated in detail the beliefs of and interactions among three patients with schizophrenia, all of whom were convinced they were Jesus Christ.

In other cases the data from descriptive research projects come in the form of a survey — a measure administered through either an interview or a written questionnaire to get a picture of the beliefs or behaviours of a sample of people of interest . The people chosen to participate in the research (known as the sample) are selected to be representative of all the people that the researcher wishes to know about (the population). In election polls, for instance, a sample is taken from the population of all “likely voters” in the upcoming elections.

The results of surveys may sometimes be rather mundane, such as “Nine out of 10 doctors prefer Tymenocin” or “The median income in the city of Hamilton is $46,712.” Yet other times (particularly in discussions of social behaviour), the results can be shocking: “More than 40,000 people are killed by gunfire in the United States every year” or “More than 60% of women between the ages of 50 and 60 suffer from depression.” Descriptive research is frequently used by psychologists to get an estimate of the prevalence (or incidence ) of psychological disorders.

A final type of descriptive research — known as naturalistic observation — is research based on the observation of everyday events . For instance, a developmental psychologist who watches children on a playground and describes what they say to each other while they play is conducting descriptive research, as is a biopsychologist who observes animals in their natural habitats. One example of observational research involves a systematic procedure known as the strange situation , used to get a picture of how adults and young children interact. The data that are collected in the strange situation are systematically coded in a coding sheet such as that shown in Table 3.3.

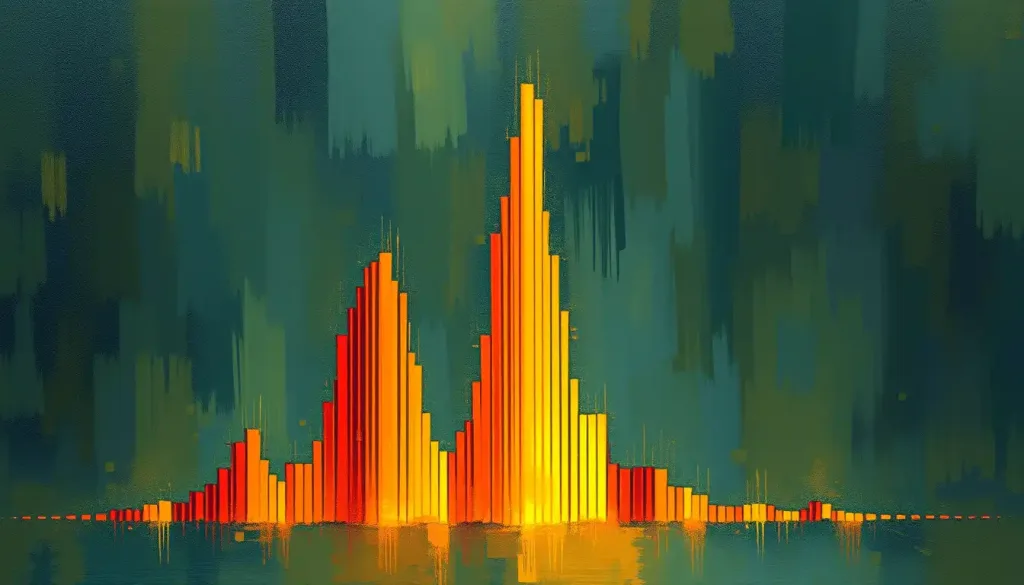

The results of descriptive research projects are analyzed using descriptive statistics — numbers that summarize the distribution of scores on a measured variable . Most variables have distributions similar to that shown in Figure 3.5 where most of the scores are located near the centre of the distribution, and the distribution is symmetrical and bell-shaped. A data distribution that is shaped like a bell is known as a normal distribution .

A distribution can be described in terms of its central tendency — that is, the point in the distribution around which the data are centred — and its dispersion, or spread . The arithmetic average, or arithmetic mean , symbolized by the letter M , is the most commonly used measure of central tendency . It is computed by calculating the sum of all the scores of the variable and dividing this sum by the number of participants in the distribution (denoted by the letter N ). In the data presented in Figure 3.5 the mean height of the students is 67.12 inches (170.5 cm). The sample mean is usually indicated by the letter M .

In some cases, however, the data distribution is not symmetrical. This occurs when there are one or more extreme scores (known as outliers ) at one end of the distribution. Consider, for instance, the variable of family income (see Figure 3.6), which includes an outlier (a value of $3,800,000). In this case the mean is not a good measure of central tendency. Although it appears from Figure 3.6 that the central tendency of the family income variable should be around $70,000, the mean family income is actually $223,960. The single very extreme income has a disproportionate impact on the mean, resulting in a value that does not well represent the central tendency.

The median is used as an alternative measure of central tendency when distributions are not symmetrical. The median is the score in the center of the distribution, meaning that 50% of the scores are greater than the median and 50% of the scores are less than the median . In our case, the median household income ($73,000) is a much better indication of central tendency than is the mean household income ($223,960).

A final measure of central tendency, known as the mode , represents the value that occurs most frequently in the distribution . You can see from Figure 3.6 that the mode for the family income variable is $93,000 (it occurs four times).

In addition to summarizing the central tendency of a distribution, descriptive statistics convey information about how the scores of the variable are spread around the central tendency. Dispersion refers to the extent to which the scores are all tightly clustered around the central tendency , as seen in Figure 3.7.

Or they may be more spread out away from it, as seen in Figure 3.8.

One simple measure of dispersion is to find the largest (the maximum ) and the smallest (the minimum ) observed values of the variable and to compute the range of the variable as the maximum observed score minus the minimum observed score. You can check that the range of the height variable in Figure 3.5 is 72 – 62 = 10. The standard deviation , symbolized as s , is the most commonly used measure of dispersion . Distributions with a larger standard deviation have more spread. The standard deviation of the height variable is s = 2.74, and the standard deviation of the family income variable is s = $745,337.

An advantage of descriptive research is that it attempts to capture the complexity of everyday behaviour. Case studies provide detailed information about a single person or a small group of people, surveys capture the thoughts or reported behaviours of a large population of people, and naturalistic observation objectively records the behaviour of people or animals as it occurs naturally. Thus descriptive research is used to provide a relatively complete understanding of what is currently happening.

Despite these advantages, descriptive research has a distinct disadvantage in that, although it allows us to get an idea of what is currently happening, it is usually limited to static pictures. Although descriptions of particular experiences may be interesting, they are not always transferable to other individuals in other situations, nor do they tell us exactly why specific behaviours or events occurred. For instance, descriptions of individuals who have suffered a stressful event, such as a war or an earthquake, can be used to understand the individuals’ reactions to the event but cannot tell us anything about the long-term effects of the stress. And because there is no comparison group that did not experience the stressful situation, we cannot know what these individuals would be like if they hadn’t had the stressful experience.

Correlational Research: Seeking Relationships among Variables

In contrast to descriptive research, which is designed primarily to provide static pictures, correlational research involves the measurement of two or more relevant variables and an assessment of the relationship between or among those variables. For instance, the variables of height and weight are systematically related (correlated) because taller people generally weigh more than shorter people. In the same way, study time and memory errors are also related, because the more time a person is given to study a list of words, the fewer errors he or she will make. When there are two variables in the research design, one of them is called the predictor variable and the other the outcome variable . The research design can be visualized as shown in Figure 3.9, where the curved arrow represents the expected correlation between these two variables.

One way of organizing the data from a correlational study with two variables is to graph the values of each of the measured variables using a scatter plot . As you can see in Figure 3.10 a scatter plot is a visual image of the relationship between two variables . A point is plotted for each individual at the intersection of his or her scores for the two variables. When the association between the variables on the scatter plot can be easily approximated with a straight line , as in parts (a) and (b) of Figure 3.10 the variables are said to have a linear relationship .

When the straight line indicates that individuals who have above-average values for one variable also tend to have above-average values for the other variable , as in part (a), the relationship is said to be positive linear . Examples of positive linear relationships include those between height and weight, between education and income, and between age and mathematical abilities in children. In each case, people who score higher on one of the variables also tend to score higher on the other variable. Negative linear relationships , in contrast, as shown in part (b), occur when above-average values for one variable tend to be associated with below-average values for the other variable. Examples of negative linear relationships include those between the age of a child and the number of diapers the child uses, and between practice on and errors made on a learning task. In these cases, people who score higher on one of the variables tend to score lower on the other variable.

Relationships between variables that cannot be described with a straight line are known as nonlinear relationships . Part (c) of Figure 3.10 shows a common pattern in which the distribution of the points is essentially random. In this case there is no relationship at all between the two variables, and they are said to be independent . Parts (d) and (e) of Figure 3.10 show patterns of association in which, although there is an association, the points are not well described by a single straight line. For instance, part (d) shows the type of relationship that frequently occurs between anxiety and performance. Increases in anxiety from low to moderate levels are associated with performance increases, whereas increases in anxiety from moderate to high levels are associated with decreases in performance. Relationships that change in direction and thus are not described by a single straight line are called curvilinear relationships .

The most common statistical measure of the strength of linear relationships among variables is the Pearson correlation coefficient , which is symbolized by the letter r . The value of the correlation coefficient ranges from r = –1.00 to r = +1.00. The direction of the linear relationship is indicated by the sign of the correlation coefficient. Positive values of r (such as r = .54 or r = .67) indicate that the relationship is positive linear (i.e., the pattern of the dots on the scatter plot runs from the lower left to the upper right), whereas negative values of r (such as r = –.30 or r = –.72) indicate negative linear relationships (i.e., the dots run from the upper left to the lower right). The strength of the linear relationship is indexed by the distance of the correlation coefficient from zero (its absolute value). For instance, r = –.54 is a stronger relationship than r = .30, and r = .72 is a stronger relationship than r = –.57. Because the Pearson correlation coefficient only measures linear relationships, variables that have curvilinear relationships are not well described by r , and the observed correlation will be close to zero.

It is also possible to study relationships among more than two measures at the same time. A research design in which more than one predictor variable is used to predict a single outcome variable is analyzed through multiple regression (Aiken & West, 1991). Multiple regression is a statistical technique, based on correlation coefficients among variables, that allows predicting a single outcome variable from more than one predictor variable . For instance, Figure 3.11 shows a multiple regression analysis in which three predictor variables (Salary, job satisfaction, and years employed) are used to predict a single outcome (job performance). The use of multiple regression analysis shows an important advantage of correlational research designs — they can be used to make predictions about a person’s likely score on an outcome variable (e.g., job performance) based on knowledge of other variables.

An important limitation of correlational research designs is that they cannot be used to draw conclusions about the causal relationships among the measured variables. Consider, for instance, a researcher who has hypothesized that viewing violent behaviour will cause increased aggressive play in children. He has collected, from a sample of Grade 4 children, a measure of how many violent television shows each child views during the week, as well as a measure of how aggressively each child plays on the school playground. From his collected data, the researcher discovers a positive correlation between the two measured variables.

Although this positive correlation appears to support the researcher’s hypothesis, it cannot be taken to indicate that viewing violent television causes aggressive behaviour. Although the researcher is tempted to assume that viewing violent television causes aggressive play, there are other possibilities. One alternative possibility is that the causal direction is exactly opposite from what has been hypothesized. Perhaps children who have behaved aggressively at school develop residual excitement that leads them to want to watch violent television shows at home (Figure 3.13):

Although this possibility may seem less likely, there is no way to rule out the possibility of such reverse causation on the basis of this observed correlation. It is also possible that both causal directions are operating and that the two variables cause each other (Figure 3.14).

Still another possible explanation for the observed correlation is that it has been produced by the presence of a common-causal variable (also known as a third variable ). A common-causal variable is a variable that is not part of the research hypothesis but that causes both the predictor and the outcome variable and thus produces the observed correlation between them . In our example, a potential common-causal variable is the discipline style of the children’s parents. Parents who use a harsh and punitive discipline style may produce children who like to watch violent television and who also behave aggressively in comparison to children whose parents use less harsh discipline (Figure 3.15)

In this case, television viewing and aggressive play would be positively correlated (as indicated by the curved arrow between them), even though neither one caused the other but they were both caused by the discipline style of the parents (the straight arrows). When the predictor and outcome variables are both caused by a common-causal variable, the observed relationship between them is said to be spurious . A spurious relationship is a relationship between two variables in which a common-causal variable produces and “explains away” the relationship . If effects of the common-causal variable were taken away, or controlled for, the relationship between the predictor and outcome variables would disappear. In the example, the relationship between aggression and television viewing might be spurious because by controlling for the effect of the parents’ disciplining style, the relationship between television viewing and aggressive behaviour might go away.

Common-causal variables in correlational research designs can be thought of as mystery variables because, as they have not been measured, their presence and identity are usually unknown to the researcher. Since it is not possible to measure every variable that could cause both the predictor and outcome variables, the existence of an unknown common-causal variable is always a possibility. For this reason, we are left with the basic limitation of correlational research: correlation does not demonstrate causation. It is important that when you read about correlational research projects, you keep in mind the possibility of spurious relationships, and be sure to interpret the findings appropriately. Although correlational research is sometimes reported as demonstrating causality without any mention being made of the possibility of reverse causation or common-causal variables, informed consumers of research, like you, are aware of these interpretational problems.

In sum, correlational research designs have both strengths and limitations. One strength is that they can be used when experimental research is not possible because the predictor variables cannot be manipulated. Correlational designs also have the advantage of allowing the researcher to study behaviour as it occurs in everyday life. And we can also use correlational designs to make predictions — for instance, to predict from the scores on their battery of tests the success of job trainees during a training session. But we cannot use such correlational information to determine whether the training caused better job performance. For that, researchers rely on experiments.

Experimental Research: Understanding the Causes of Behaviour

The goal of experimental research design is to provide more definitive conclusions about the causal relationships among the variables in the research hypothesis than is available from correlational designs. In an experimental research design, the variables of interest are called the independent variable (or variables ) and the dependent variable . The independent variable in an experiment is the causing variable that is created (manipulated) by the experimenter . The dependent variable in an experiment is a measured variable that is expected to be influenced by the experimental manipulation . The research hypothesis suggests that the manipulated independent variable or variables will cause changes in the measured dependent variables. We can diagram the research hypothesis by using an arrow that points in one direction. This demonstrates the expected direction of causality (Figure 3.16):

Research Focus: Video Games and Aggression

Consider an experiment conducted by Anderson and Dill (2000). The study was designed to test the hypothesis that viewing violent video games would increase aggressive behaviour. In this research, male and female undergraduates from Iowa State University were given a chance to play with either a violent video game (Wolfenstein 3D) or a nonviolent video game (Myst). During the experimental session, the participants played their assigned video games for 15 minutes. Then, after the play, each participant played a competitive game with an opponent in which the participant could deliver blasts of white noise through the earphones of the opponent. The operational definition of the dependent variable (aggressive behaviour) was the level and duration of noise delivered to the opponent. The design of the experiment is shown in Figure 3.17

Two advantages of the experimental research design are (a) the assurance that the independent variable (also known as the experimental manipulation ) occurs prior to the measured dependent variable, and (b) the creation of initial equivalence between the conditions of the experiment (in this case by using random assignment to conditions).

Experimental designs have two very nice features. For one, they guarantee that the independent variable occurs prior to the measurement of the dependent variable. This eliminates the possibility of reverse causation. Second, the influence of common-causal variables is controlled, and thus eliminated, by creating initial equivalence among the participants in each of the experimental conditions before the manipulation occurs.

The most common method of creating equivalence among the experimental conditions is through random assignment to conditions, a procedure in which the condition that each participant is assigned to is determined through a random process, such as drawing numbers out of an envelope or using a random number table . Anderson and Dill first randomly assigned about 100 participants to each of their two groups (Group A and Group B). Because they used random assignment to conditions, they could be confident that, before the experimental manipulation occurred, the students in Group A were, on average, equivalent to the students in Group B on every possible variable, including variables that are likely to be related to aggression, such as parental discipline style, peer relationships, hormone levels, diet — and in fact everything else.

Then, after they had created initial equivalence, Anderson and Dill created the experimental manipulation — they had the participants in Group A play the violent game and the participants in Group B play the nonviolent game. Then they compared the dependent variable (the white noise blasts) between the two groups, finding that the students who had viewed the violent video game gave significantly longer noise blasts than did the students who had played the nonviolent game.

Anderson and Dill had from the outset created initial equivalence between the groups. This initial equivalence allowed them to observe differences in the white noise levels between the two groups after the experimental manipulation, leading to the conclusion that it was the independent variable (and not some other variable) that caused these differences. The idea is that the only thing that was different between the students in the two groups was the video game they had played.

Despite the advantage of determining causation, experiments do have limitations. One is that they are often conducted in laboratory situations rather than in the everyday lives of people. Therefore, we do not know whether results that we find in a laboratory setting will necessarily hold up in everyday life. Second, and more important, is that some of the most interesting and key social variables cannot be experimentally manipulated. If we want to study the influence of the size of a mob on the destructiveness of its behaviour, or to compare the personality characteristics of people who join suicide cults with those of people who do not join such cults, these relationships must be assessed using correlational designs, because it is simply not possible to experimentally manipulate these variables.

Key Takeaways

- Descriptive, correlational, and experimental research designs are used to collect and analyze data.

- Descriptive designs include case studies, surveys, and naturalistic observation. The goal of these designs is to get a picture of the current thoughts, feelings, or behaviours in a given group of people. Descriptive research is summarized using descriptive statistics.

- Correlational research designs measure two or more relevant variables and assess a relationship between or among them. The variables may be presented on a scatter plot to visually show the relationships. The Pearson Correlation Coefficient ( r ) is a measure of the strength of linear relationship between two variables.

- Common-causal variables may cause both the predictor and outcome variable in a correlational design, producing a spurious relationship. The possibility of common-causal variables makes it impossible to draw causal conclusions from correlational research designs.

- Experimental research involves the manipulation of an independent variable and the measurement of a dependent variable. Random assignment to conditions is normally used to create initial equivalence between the groups, allowing researchers to draw causal conclusions.

Exercises and Critical Thinking

- There is a negative correlation between the row that a student sits in in a large class (when the rows are numbered from front to back) and his or her final grade in the class. Do you think this represents a causal relationship or a spurious relationship, and why?

- Think of two variables (other than those mentioned in this book) that are likely to be correlated, but in which the correlation is probably spurious. What is the likely common-causal variable that is producing the relationship?

- Imagine a researcher wants to test the hypothesis that participating in psychotherapy will cause a decrease in reported anxiety. Describe the type of research design the investigator might use to draw this conclusion. What would be the independent and dependent variables in the research?

Image Attributions

Figure 3.4: “ Reading newspaper ” by Alaskan Dude (http://commons.wikimedia.org/wiki/File:Reading_newspaper.jpg) is licensed under CC BY 2.0

Aiken, L., & West, S. (1991). Multiple regression: Testing and interpreting interactions . Newbury Park, CA: Sage.

Ainsworth, M. S., Blehar, M. C., Waters, E., & Wall, S. (1978). Patterns of attachment: A psychological study of the strange situation . Hillsdale, NJ: Lawrence Erlbaum Associates.

Anderson, C. A., & Dill, K. E. (2000). Video games and aggressive thoughts, feelings, and behavior in the laboratory and in life. Journal of Personality and Social Psychology, 78 (4), 772–790.

Damasio, H., Grabowski, T., Frank, R., Galaburda, A. M., Damasio, A. R., Cacioppo, J. T., & Berntson, G. G. (2005). The return of Phineas Gage: Clues about the brain from the skull of a famous patient. In Social neuroscience: Key readings. (pp. 21–28). New York, NY: Psychology Press.

Freud, S. (1909/1964). Analysis of phobia in a five-year-old boy. In E. A. Southwell & M. Merbaum (Eds.), Personality: Readings in theory and research (pp. 3–32). Belmont, CA: Wadsworth. (Original work published 1909).

Kotowicz, Z. (2007). The strange case of Phineas Gage. History of the Human Sciences, 20 (1), 115–131.

Rokeach, M. (1964). The three Christs of Ypsilanti: A psychological study . New York, NY: Knopf.

Stangor, C. (2011). Research methods for the behavioural sciences (4th ed.). Mountain View, CA: Cengage.

Long Descriptions

Figure 3.6 long description: There are 25 families. 24 families have an income between $44,000 and $111,000 and one family has an income of $3,800,000. The mean income is $223,960 while the median income is $73,000. [Return to Figure 3.6]

Figure 3.10 long description: Types of scatter plots.

- Positive linear, r=positive .82. The plots on the graph form a rough line that runs from lower left to upper right.

- Negative linear, r=negative .70. The plots on the graph form a rough line that runs from upper left to lower right.

- Independent, r=0.00. The plots on the graph are spread out around the centre.

- Curvilinear, r=0.00. The plots of the graph form a rough line that goes up and then down like a hill.

- Curvilinear, r=0.00. The plots on the graph for a rough line that goes down and then up like a ditch.

[Return to Figure 3.10]

Introduction to Psychology - 1st Canadian Edition Copyright © 2014 by Jennifer Walinga and Charles Stangor is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Descriptive Research and Case Studies

Learning objectives.

- Explain the importance and uses of descriptive research, especially case studies, in studying abnormal behavior

Types of Research Methods

There are many research methods available to psychologists in their efforts to understand, describe, and explain behavior and the cognitive and biological processes that underlie it. Some methods rely on observational techniques. Other approaches involve interactions between the researcher and the individuals who are being studied—ranging from a series of simple questions; to extensive, in-depth interviews; to well-controlled experiments.

The three main categories of psychological research are descriptive, correlational, and experimental research. Research studies that do not test specific relationships between variables are called descriptive, or qualitative, studies . These studies are used to describe general or specific behaviors and attributes that are observed and measured. In the early stages of research, it might be difficult to form a hypothesis, especially when there is not any existing literature in the area. In these situations designing an experiment would be premature, as the question of interest is not yet clearly defined as a hypothesis. Often a researcher will begin with a non-experimental approach, such as a descriptive study, to gather more information about the topic before designing an experiment or correlational study to address a specific hypothesis. Descriptive research is distinct from correlational research , in which psychologists formally test whether a relationship exists between two or more variables. Experimental research goes a step further beyond descriptive and correlational research and randomly assigns people to different conditions, using hypothesis testing to make inferences about how these conditions affect behavior. It aims to determine if one variable directly impacts and causes another. Correlational and experimental research both typically use hypothesis testing, whereas descriptive research does not.

Each of these research methods has unique strengths and weaknesses, and each method may only be appropriate for certain types of research questions. For example, studies that rely primarily on observation produce incredible amounts of information, but the ability to apply this information to the larger population is somewhat limited because of small sample sizes. Survey research, on the other hand, allows researchers to easily collect data from relatively large samples. While surveys allow results to be generalized to the larger population more easily, the information that can be collected on any given survey is somewhat limited and subject to problems associated with any type of self-reported data. Some researchers conduct archival research by using existing records. While existing records can be a fairly inexpensive way to collect data that can provide insight into a number of research questions, researchers using this approach have no control on how or what kind of data was collected.

Correlational research can find a relationship between two variables, but the only way a researcher can claim that the relationship between the variables is cause and effect is to perform an experiment. In experimental research, which will be discussed later, there is a tremendous amount of control over variables of interest. While performing an experiment is a powerful approach, experiments are often conducted in very artificial settings, which calls into question the validity of experimental findings with regard to how they would apply in real-world settings. In addition, many of the questions that psychologists would like to answer cannot be pursued through experimental research because of ethical concerns.

The three main types of descriptive studies are case studies, naturalistic observation, and surveys.

Clinical or Case Studies

Psychologists can use a detailed description of one person or a small group based on careful observation. Case studies are intensive studies of individuals and have commonly been seen as a fruitful way to come up with hypotheses and generate theories. Case studies add descriptive richness. Case studies are also useful for formulating concepts, which are an important aspect of theory construction. Through fine-grained knowledge and description, case studies can fully specify the causal mechanisms in a way that may be harder in a large study.

Sigmund Freud developed many theories from case studies (Anna O., Little Hans, Wolf Man, Dora, etc.). F or example, he conducted a case study of a man, nicknamed “Rat Man,” in which he claimed that this patient had been cured by psychoanalysis. T he nickname derives from the fact that among the patient’s many compulsions, he had an obsession with nightmarish fantasies about rats.

Today, more commonly, case studies reflect an up-close, in-depth, and detailed examination of an individual’s course of treatment. Case studies typically include a complete history of the subject’s background and response to treatment. From the particular client’s experience in therapy, the therapist’s goal is to provide information that may help other therapists who treat similar clients.

Case studies are generally a single-case design, but can also be a multiple-case design, where replication instead of sampling is the criterion for inclusion. Like other research methodologies within psychology, the case study must produce valid and reliable results in order to be useful for the development of future research. Distinct advantages and disadvantages are associated with the case study in psychology.

A commonly described limit of case studies is that they do not lend themselves to generalizability . The other issue is that the case study is subject to the bias of the researcher in terms of how the case is written, and that cases are chosen because they are consistent with the researcher’s preconceived notions, resulting in biased research. Another common problem in case study research is that of reconciling conflicting interpretations of the same case history.

Despite these limitations, there are advantages to using case studies. One major advantage of the case study in psychology is the potential for the development of novel hypotheses of the cause of abnormal behavior for later testing. Second, the case study can provide detailed descriptions of specific and rare cases and help us study unusual conditions that occur too infrequently to study with large sample sizes. The major disadvantage is that case studies cannot be used to determine causation, as is the case in experimental research, where the factors or variables hypothesized to play a causal role are manipulated or controlled by the researcher.

Link to Learning: Famous Case Studies

Some well-known case studies that related to abnormal psychology include the following:

- Harlow— Phineas Gage

- Breuer & Freud (1895)— Anna O.

- Cleckley’s case studies: on psychopathy ( The Mask of Sanity ) (1941) and multiple personality disorder ( The Three Faces of Eve ) (1957)

- Freud and Little Hans

- Freud and the Rat Man

- John Money and the John/Joan case

- Genie (feral child)

- Piaget’s studies

- Rosenthal’s book on the murder of Kitty Genovese

- Washoe (sign language)

- Patient H.M.

Naturalistic Observation

If you want to understand how behavior occurs, one of the best ways to gain information is to simply observe the behavior in its natural context. However, people might change their behavior in unexpected ways if they know they are being observed. How do researchers obtain accurate information when people tend to hide their natural behavior? As an example, imagine that your professor asks everyone in your class to raise their hand if they always wash their hands after using the restroom. Chances are that almost everyone in the classroom will raise their hand, but do you think hand washing after every trip to the restroom is really that universal?

This is very similar to the phenomenon mentioned earlier in this module: many individuals do not feel comfortable answering a question honestly. But if we are committed to finding out the facts about handwashing, we have other options available to us.

Suppose we send a researcher to a school playground to observe how aggressive or socially anxious children interact with peers. Will our observer blend into the playground environment by wearing a white lab coat, sitting with a clipboard, and staring at the swings? We want our researcher to be inconspicuous and unobtrusively positioned—perhaps pretending to be a school monitor while secretly recording the relevant information. This type of observational study is called naturalistic observation : observing behavior in its natural setting. To better understand peer exclusion, Suzanne Fanger collaborated with colleagues at the University of Texas to observe the behavior of preschool children on a playground. How did the observers remain inconspicuous over the duration of the study? They equipped a few of the children with wireless microphones (which the children quickly forgot about) and observed while taking notes from a distance. Also, the children in that particular preschool (a “laboratory preschool”) were accustomed to having observers on the playground (Fanger, Frankel, & Hazen, 2012).

It is critical that the observer be as unobtrusive and as inconspicuous as possible: when people know they are being watched, they are less likely to behave naturally. For example, psychologists have spent weeks observing the behavior of homeless people on the streets, in train stations, and bus terminals. They try to ensure that their naturalistic observations are unobtrusive, so as to minimize interference with the behavior they observe. Nevertheless, the presence of the observer may distort the behavior that is observed, and this must be taken into consideration (Figure 1).

The greatest benefit of naturalistic observation is the validity, or accuracy, of information collected unobtrusively in a natural setting. Having individuals behave as they normally would in a given situation means that we have a higher degree of ecological validity, or realism, than we might achieve with other research approaches. Therefore, our ability to generalize the findings of the research to real-world situations is enhanced. If done correctly, we need not worry about people modifying their behavior simply because they are being observed. Sometimes, people may assume that reality programs give us a glimpse into authentic human behavior. However, the principle of inconspicuous observation is violated as reality stars are followed by camera crews and are interviewed on camera for personal confessionals. Given that environment, we must doubt how natural and realistic their behaviors are.

The major downside of naturalistic observation is that they are often difficult to set up and control. Although something as simple as observation may seem like it would be a part of all research methods, participant observation is a distinct methodology that involves the researcher embedding themselves into a group in order to study its dynamics. For example, Festinger, Riecken, and Shacter (1956) were very interested in the psychology of a particular cult. However, this cult was very secretive and wouldn’t grant interviews to outside members. So, in order to study these people, Festinger and his colleagues pretended to be cult members, allowing them access to the behavior and psychology of the cult. Despite this example, it should be noted that the people being observed in a participant observation study usually know that the researcher is there to study them. [1]

Another potential problem in observational research is observer bias . Generally, people who act as observers are closely involved in the research project and may unconsciously skew their observations to fit their research goals or expectations. To protect against this type of bias, researchers should have clear criteria established for the types of behaviors recorded and how those behaviors should be classified. In addition, researchers often compare observations of the same event by multiple observers, in order to test inter-rater reliability : a measure of reliability that assesses the consistency of observations by different observers.

Often, psychologists develop surveys as a means of gathering data. Surveys are lists of questions to be answered by research participants, and can be delivered as paper-and-pencil questionnaires, administered electronically, or conducted verbally (Figure 3). Generally, the survey itself can be completed in a short time, and the ease of administering a survey makes it easy to collect data from a large number of people.

Surveys allow researchers to gather data from larger samples than may be afforded by other research methods . A sample is a subset of individuals selected from a population , which is the overall group of individuals that the researchers are interested in. Researchers study the sample and seek to generalize their findings to the population.

There is both strength and weakness in surveys when compared to case studies. By using surveys, we can collect information from a larger sample of people. A larger sample is better able to reflect the actual diversity of the population, thus allowing better generalizability. Therefore, if our sample is sufficiently large and diverse, we can assume that the data we collect from the survey can be generalized to the larger population with more certainty than the information collected through a case study. However, given the greater number of people involved, we are not able to collect the same depth of information on each person that would be collected in a case study.

Another potential weakness of surveys is something we touched on earlier in this module: people do not always give accurate responses. They may lie, misremember, or answer questions in a way that they think makes them look good. For example, people may report drinking less alcohol than is actually the case.

Any number of research questions can be answered through the use of surveys. One real-world example is the research conducted by Jenkins, Ruppel, Kizer, Yehl, and Griffin (2012) about the backlash against the U.S. Arab-American community following the terrorist attacks of September 11, 2001. Jenkins and colleagues wanted to determine to what extent these negative attitudes toward Arab-Americans still existed nearly a decade after the attacks occurred. In one study, 140 research participants filled out a survey with 10 questions, including questions asking directly about the participant’s overt prejudicial attitudes toward people of various ethnicities. The survey also asked indirect questions about how likely the participant would be to interact with a person of a given ethnicity in a variety of settings (such as, “How likely do you think it is that you would introduce yourself to a person of Arab-American descent?”). The results of the research suggested that participants were unwilling to report prejudicial attitudes toward any ethnic group. However, there were significant differences between their pattern of responses to questions about social interaction with Arab-Americans compared to other ethnic groups: they indicated less willingness for social interaction with Arab-Americans compared to the other ethnic groups. This suggested that the participants harbored subtle forms of prejudice against Arab-Americans, despite their assertions that this was not the case (Jenkins et al., 2012).

Think it Over

Research has shown that parental depressive symptoms are linked to a number of negative child outcomes. A classmate of yours is interested in the associations between parental depressive symptoms and actual child behaviors in everyday life [2] because this associations remains largely unknown. After reading this section, what do you think is the best way to better understand such associations? Which method might result in the most valid data?

clinical or case study: observational research study focusing on one or a few people

correlational research: tests whether a relationship exists between two or more variables

descriptive research: research studies that do not test specific relationships between variables; they are used to describe general or specific behaviors and attributes that are observed and measured

experimental research: tests a hypothesis to determine cause-and-effect relationships

generalizability: inferring that the results for a sample apply to the larger population

inter-rater reliability: measure of agreement among observers on how they record and classify a particular event

naturalistic observation: observation of behavior in its natural setting

observer bias: when observations may be skewed to align with observer expectations

population: overall group of individuals that the researchers are interested in

sample: subset of individuals selected from the larger population

survey: list of questions to be answered by research participants—given as paper-and-pencil questionnaires, administered electronically, or conducted verbally—allowing researchers to collect data from a large number of people

CC Licensed Content, Shared Previously

- Descriptive Research and Case Studies . Authored by : Sonja Ann Miller for Lumen Learning. Provided by : Lumen Learning. License : CC BY-SA: Attribution-ShareAlike

- Approaches to Research. Authored by : OpenStax College. Located at : http://cnx.org/contents/[email protected]:iMyFZJzg@5/Approaches-to-Research . License : CC BY: Attribution . License Terms : Download for free at http://cnx.org/contents/[email protected]

- Descriptive Research. Provided by : Boundless. Located at : https://www.boundless.com/psychology/textbooks/boundless-psychology-textbook/researching-psychology-2/types-of-research-studies-27/descriptive-research-124-12659/ . License : CC BY-SA: Attribution-ShareAlike

- Case Study. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Case_study . License : CC BY-SA: Attribution-ShareAlike

- Rat man. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Rat_Man#Legacy . License : CC BY-SA: Attribution-ShareAlike

- Case study in psychology. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Case_study_in_psychology . License : CC BY-SA: Attribution-ShareAlike

- Research Designs. Authored by : Christie Napa Scollon. Provided by : Singapore Management University. Located at : https://nobaproject.com/modules/research-designs#reference-6 . Project : The Noba Project. License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- Single subject design. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Single-subject_design . License : CC BY-SA: Attribution-ShareAlike

- Single subject research. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Single-subject_research#A-B-A-B . License : Public Domain: No Known Copyright

- Pills. Authored by : qimono. Provided by : Pixabay. Located at : https://pixabay.com/illustrations/pill-capsule-medicine-medical-1884775/ . License : CC0: No Rights Reserved

- ABAB Design. Authored by : Doc. Yu. Provided by : Wikimedia. Located at : https://commons.wikimedia.org/wiki/File:A-B-A-B_Design.png . License : CC BY-SA: Attribution-ShareAlike