- En español – ExME

- Em português – EME

An introduction to different types of study design

Posted on 6th April 2021 by Hadi Abbas

Study designs are the set of methods and procedures used to collect and analyze data in a study.

Broadly speaking, there are 2 types of study designs: descriptive studies and analytical studies.

Descriptive studies

- Describes specific characteristics in a population of interest

- The most common forms are case reports and case series

- In a case report, we discuss our experience with the patient’s symptoms, signs, diagnosis, and treatment

- In a case series, several patients with similar experiences are grouped.

Analytical Studies

Analytical studies are of 2 types: observational and experimental.

Observational studies are studies that we conduct without any intervention or experiment. In those studies, we purely observe the outcomes. On the other hand, in experimental studies, we conduct experiments and interventions.

Observational studies

Observational studies include many subtypes. Below, I will discuss the most common designs.

Cross-sectional study:

- This design is transverse where we take a specific sample at a specific time without any follow-up

- It allows us to calculate the frequency of disease ( p revalence ) or the frequency of a risk factor

- This design is easy to conduct

- For example – if we want to know the prevalence of migraine in a population, we can conduct a cross-sectional study whereby we take a sample from the population and calculate the number of patients with migraine headaches.

Cohort study:

- We conduct this study by comparing two samples from the population: one sample with a risk factor while the other lacks this risk factor

- It shows us the risk of developing the disease in individuals with the risk factor compared to those without the risk factor ( RR = relative risk )

- Prospective : we follow the individuals in the future to know who will develop the disease

- Retrospective : we look to the past to know who developed the disease (e.g. using medical records)

- This design is the strongest among the observational studies

- For example – to find out the relative risk of developing chronic obstructive pulmonary disease (COPD) among smokers, we take a sample including smokers and non-smokers. Then, we calculate the number of individuals with COPD among both.

Case-Control Study:

- We conduct this study by comparing 2 groups: one group with the disease (cases) and another group without the disease (controls)

- This design is always retrospective

- We aim to find out the odds of having a risk factor or an exposure if an individual has a specific disease (Odds ratio)

- Relatively easy to conduct

- For example – we want to study the odds of being a smoker among hypertensive patients compared to normotensive ones. To do so, we choose a group of patients diagnosed with hypertension and another group that serves as the control (normal blood pressure). Then we study their smoking history to find out if there is a correlation.

Experimental Studies

- Also known as interventional studies

- Can involve animals and humans

- Pre-clinical trials involve animals

- Clinical trials are experimental studies involving humans

- In clinical trials, we study the effect of an intervention compared to another intervention or placebo. As an example, I have listed the four phases of a drug trial:

I: We aim to assess the safety of the drug ( is it safe ? )

II: We aim to assess the efficacy of the drug ( does it work ? )

III: We want to know if this drug is better than the old treatment ( is it better ? )

IV: We follow-up to detect long-term side effects ( can it stay in the market ? )

- In randomized controlled trials, one group of participants receives the control, while the other receives the tested drug/intervention. Those studies are the best way to evaluate the efficacy of a treatment.

Finally, the figure below will help you with your understanding of different types of study designs.

References (pdf)

You may also be interested in the following blogs for further reading:

An introduction to randomized controlled trials

Case-control and cohort studies: a brief overview

Cohort studies: prospective and retrospective designs

Prevalence vs Incidence: what is the difference?

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on An introduction to different types of study design

you are amazing one!! if I get you I’m working with you! I’m student from Ethiopian higher education. health sciences student

Very informative and easy understandable

You are my kind of doctor. Do not lose sight of your objective.

Wow very erll explained and easy to understand

I’m Khamisu Habibu community health officer student from Abubakar Tafawa Balewa university teaching hospital Bauchi, Nigeria, I really appreciate your write up and you have make it clear for the learner. thank you

well understood,thank you so much

Well understood…thanks

Simply explained. Thank You.

Thanks a lot for this nice informative article which help me to understand different study designs that I felt difficult before

That’s lovely to hear, Mona, thank you for letting the author know how useful this was. If there are any other particular topics you think would be useful to you, and are not already on the website, please do let us know.

it is very informative and useful.

thank you statistician

Fabulous to hear, thank you John.

Thanks for this information

Thanks so much for this information….I have clearly known the types of study design Thanks

That’s so good to hear, Mirembe, thank you for letting the author know.

Very helpful article!! U have simplified everything for easy understanding

I’m a health science major currently taking statistics for health care workers…this is a challenging class…thanks for the simified feedback.

That’s good to hear this has helped you. Hopefully you will find some of the other blogs useful too. If you see any topics that are missing from the website, please do let us know!

Hello. I liked your presentation, the fact that you ranked them clearly is very helpful to understand for people like me who is a novelist researcher. However, I was expecting to read much more about the Experimental studies. So please direct me if you already have or will one day. Thank you

Dear Ay. My sincere apologies for not responding to your comment sooner. You may find it useful to filter the blogs by the topic of ‘Study design and research methods’ – here is a link to that filter: https://s4be.cochrane.org/blog/topic/study-design/ This will cover more detail about experimental studies. Or have a look on our library page for further resources there – you’ll find that on the ‘Resources’ drop down from the home page.

However, if there are specific things you feel you would like to learn about experimental studies, that are missing from the website, it would be great if you could let me know too. Thank you, and best of luck. Emma

Great job Mr Hadi. I advise you to prepare and study for the Australian Medical Board Exams as soon as you finish your undergrad study in Lebanon. Good luck and hope we can meet sometime in the future. Regards ;)

You have give a good explaination of what am looking for. However, references am not sure of where to get them from.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

Expertise-based Randomized Controlled Trials

This blog summarizes the concepts of Expertise-based randomized controlled trials with a focus on the advantages and challenges associated with this type of study.

A well-designed cohort study can provide powerful results. This blog introduces prospective and retrospective cohort studies, discussing the advantages, disadvantages and use of these type of study designs.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Guide to Experimental Design | Overview, Steps, & Examples

Guide to Experimental Design | Overview, 5 steps & Examples

Published on December 3, 2019 by Rebecca Bevans . Revised on June 21, 2023.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design create a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

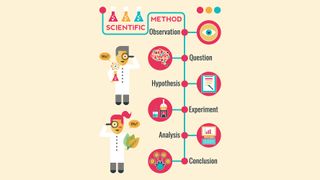

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead. This minimizes several types of research bias, particularly sampling bias , survivorship bias , and attrition bias as time passes.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, other interesting articles, frequently asked questions about experiments.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

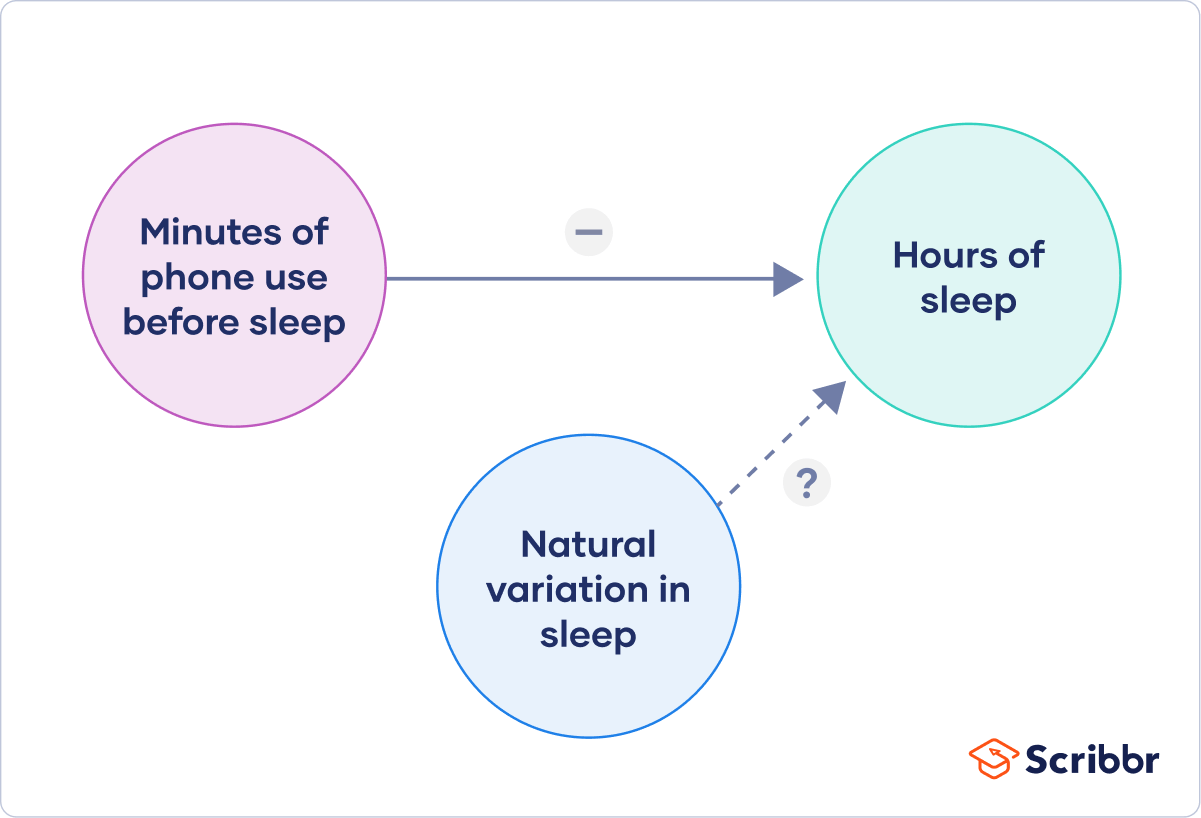

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

| Research question | Independent variable | Dependent variable |

|---|---|---|

| Phone use and sleep | Minutes of phone use before sleep | Hours of sleep per night |

| Temperature and soil respiration | Air temperature just above the soil surface | CO2 respired from soil |

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

| Extraneous variable | How to control | |

|---|---|---|

| Phone use and sleep | in sleep patterns among individuals. | measure the average difference between sleep with phone use and sleep without phone use rather than the average amount of sleep per treatment group. |

| Temperature and soil respiration | also affects respiration, and moisture can decrease with increasing temperature. | monitor soil moisture and add water to make sure that soil moisture is consistent across all treatment plots. |

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

| Null hypothesis (H ) | Alternate hypothesis (H ) | |

|---|---|---|

| Phone use and sleep | Phone use before sleep does not correlate with the amount of sleep a person gets. | Increasing phone use before sleep leads to a decrease in sleep. |

| Temperature and soil respiration | Air temperature does not correlate with soil respiration. | Increased air temperature leads to increased soil respiration. |

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalized and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomized design vs a randomized block design .

- A between-subjects design vs a within-subjects design .

Randomization

An experiment can be completely randomized or randomized within blocks (aka strata):

- In a completely randomized design , every subject is assigned to a treatment group at random.

- In a randomized block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

| Completely randomized design | Randomized block design | |

|---|---|---|

| Phone use and sleep | Subjects are all randomly assigned a level of phone use using a random number generator. | Subjects are first grouped by age, and then phone use treatments are randomly assigned within these groups. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random by using a number generator to generate map coordinates within the study area. | Soils are first grouped by average rainfall, and then treatment plots are randomly assigned within these groups. |

Sometimes randomization isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs. within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomizing or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

| Between-subjects (independent measures) design | Within-subjects (repeated measures) design | |

|---|---|---|

| Phone use and sleep | Subjects are randomly assigned a level of phone use (none, low, or high) and follow that level of phone use throughout the experiment. | Subjects are assigned consecutively to zero, low, and high levels of phone use throughout the experiment, and the order in which they follow these treatments is randomized. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random and the soils are kept at this temperature throughout the experiment. | Every plot receives each warming treatment (1, 3, 5, 8, and 10C above ambient temperatures) consecutively over the course of the experiment, and the order in which they receive these treatments is randomized. |

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimize research bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalized to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

Experimental design means planning a set of procedures to investigate a relationship between variables . To design a controlled experiment, you need:

- A testable hypothesis

- At least one independent variable that can be precisely manipulated

- At least one dependent variable that can be precisely measured

When designing the experiment, you decide:

- How you will manipulate the variable(s)

- How you will control for any potential confounding variables

- How many subjects or samples will be included in the study

- How subjects will be assigned to treatment levels

Experimental design is essential to the internal and external validity of your experiment.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word “between” means that you’re comparing different conditions between groups, while the word “within” means you’re comparing different conditions within the same group.

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 21). Guide to Experimental Design | Overview, 5 steps & Examples. Scribbr. Retrieved June 18, 2024, from https://www.scribbr.com/methodology/experimental-design/

Is this article helpful?

Rebecca Bevans

Other students also liked, random assignment in experiments | introduction & examples, quasi-experimental design | definition, types & examples, how to write a lab report, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Design of Experimental Studies in Biomedical Sciences

- First Online: 06 February 2020

Cite this chapter

- Bagher Larijani ORCID: orcid.org/0000-0001-5386-7597 4 ,

- Akram Tayanloo-Beik ORCID: orcid.org/0000-0001-8370-9557 5 ,

- Moloud Payab ORCID: orcid.org/0000-0002-9311-8395 6 ,

- Mahdi Gholami 7 ,

- Motahareh Sheikh-Hosseini 8 &

- Mehran Nematizadeh 8

Part of the book series: Learning Materials in Biosciences ((LMB))

1264 Accesses

1 Altmetric

Proposing, investigating, and testing new theories lead to a considerable progress in science. In this concept, appropriate experimental design has a fundamental importance. Well-designed experimental studies with considering key points which are appropriately analyzed and reported could maximize scientific gains. However, there are still some problems in this process that should be addressed. Accordingly, using adequate methods based on the aim of experiments, qualifying the laboratory tests, pointing reproducibility of results, considering statistics for data analysis, reporting data transparently, and conducting pilot studies are fundamental considerations that will be discussed in this chapter. This chapter begins with a brief definition of experimental studies and different types of research in biosciences. Consequently, general principles of the experimental study design and conduct will be reviewed. Thereafter, some limitations and challenges in the field of experimental studies and also validation and standardization will be described.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

How to Read the Literature, Develop a Hypothesis, and Design an Experiment for Basic Science and Translational Research

An Introduction to Biostatistics

Basic Study Design

Johnson PD, Besselsen DG. Practical aspects of experimental design in animal research. ILAR J. 2002;43(4):202–6.

Article CAS Google Scholar

Festing MFW, Overend P, Cortina Borja M, Berdoy M. The design of animal experiments: reducing the use of animals in research through better experimental design. Revised and updated ed. London, UK: Sage Publications Ltd; 2016.

Google Scholar

Kilkenny C, Parsons N, Kadyszewski E, Festing MF, Cuthill IC, Fry D, et al. Survey of the quality of experimental design, statistical analysis and reporting of research using animals. PLoS One. 2009;4(11):e7824.

Article Google Scholar

Röhrig B, du Prel J-B, Wachtlin D, Blettner M. Types of study in medical research: part 3 of a series on evaluation of scientific publications. Dtsch Arztebl Int. 2009;106(15):262.

PubMed PubMed Central Google Scholar

Parker RM, Browne WJ. The place of experimental design and statistics in the 3Rs. ILAR J. 2014;55(3):477–85.

Festing MF, Altman DG. Guidelines for the design and statistical analysis of experiments using laboratory animals. ILAR J. 2002;43(4):244–58.

Thorndike E. Animal intelligence: experimental studies. London: Routledge; 2017.

Book Google Scholar

Creswell JW. Steps in Conducting a Scholarly Mixed Methods Study. DBER Speaker Series; 2013. 48.

Roy RK. Design of experiments using the Taguchi approach: 16 steps to product and process improvement. New York: Wiley; 2001.

Yu C-H, Ohlund B. Threats to validity of research design. 2010. Retrieved January 12, 2012.

Henderson VC, Kimmelman J, Fergusson D, Grimshaw JM, Hackam DG. Threats to validity in the design and conduct of preclinical efficacy studies: a systematic review of guidelines for in vivo animal experiments. PLoS Med. 2013;10(7):e1001489.

Christensen LB, Johnson B, Turner LA. Research methods, design, and analysis. Boston: Pearson; 2011.

Ferguson L. External validity, generalizability, and knowledge utilization. J Nurs Scholarsh. 2004;36(1):16–22.

Brewer MB, Crano WD. Research design and issues of validity. In: Handbook of research methods in social and personality psychology. Cambridge: Cambridge University Press; 2000. p. 3–16.

Further Reading

Bernard HR, Bernard HR. Social research methods: qualitative and quantitative approaches. Thousand Oaks: Sage; 2013.

Fraenkel JR, Wallen NE, Hyun HH. How to design and evaluate research in education. New York: McGraw-Hill Humanities/Social Sciences/Languages; 2011.

Gall MD, Borg WR, Gall JP. Educational research: an introduction. New York: Longman Publishing; 1996.

Keppel G. Design and analysis: a researcher’s handbook. Englewood Cliffs: Prentice-Hall, Inc; 1991.

Punch KF. Introduction to social research: quantitative and qualitative approaches. London: Sage; 2013.

Fan J. Local polynomial modelling and its applications: monographs on statistics and applied probability 66. Boca Raton: Routledge; 2018.

Festing MF, Altman DG. Guidelines for the design and statistical analysis of experiments using laboratory animals. ILAR J. 2002;43(4):244–58. https://doi.org/10.1093/ilar.43.4.244 .

Article CAS PubMed Google Scholar

Quinn GP, Keough MJ. Experimental design and data analysis for biologists. Cambridge: Cambridge University Press; 2002.

Download references

Author information

Authors and affiliations.

Endocrinology and Metabolism Research Center, Endocrinology and Metabolism Clinical Sciences Institute, Tehran University of Medical Sciences, Tehran, Iran

Bagher Larijani

Cell Therapy and Regenerative Medicine Research Center, Endocrinology and Metabolism Molecular-Cellular Sciences Institute, Tehran University of Medical Sciences, Tehran, Iran

Akram Tayanloo-Beik

Obesity and Eating Habits Research Center, Endocrinology and Metabolism Molecular-Cellular Sciences Institute, Tehran University of Medical Sciences, Tehran, Iran

Moloud Payab

Department of Toxicology & Pharmacology, Faculty of Pharmacy, Toxicology and Poisoning Research Center, Tehran University of Medical Sciences, Tehran, Iran

Mahdi Gholami

Metabolomics and Genomics Research Center, Endocrinology and Metabolism Molecular-Cellular Sciences Institute, Tehran University of Medical Sciences, Tehran, Iran

Motahareh Sheikh-Hosseini & Mehran Nematizadeh

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Babak Arjmand

Brain and Spinal Cord Injury Research Center, Neuroscience Institute, Tehran University of Medical Sciences, Tehran, Iran

Parisa Goodarzi

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Larijani, B., Tayanloo-Beik, A., Payab, M., Gholami, M., Sheikh-Hosseini, M., Nematizadeh, M. (2020). Design of Experimental Studies in Biomedical Sciences. In: Arjmand, B., Payab, M., Goodarzi, P. (eds) Biomedical Product Development: Bench to Bedside. Learning Materials in Biosciences. Springer, Cham. https://doi.org/10.1007/978-3-030-35626-2_4

Download citation

DOI : https://doi.org/10.1007/978-3-030-35626-2_4

Published : 06 February 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-35625-5

Online ISBN : 978-3-030-35626-2

eBook Packages : Biomedical and Life Sciences Biomedical and Life Sciences (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

We have a new app!

Take the Access library with you wherever you go—easy access to books, videos, images, podcasts, personalized features, and more.

Download the Access App here: iOS and Android . Learn more here!

- Remote Access

- Save figures into PowerPoint

- Download tables as PDFs

Chapter 4. Experimental Study Designs

- Download Chapter PDF

Disclaimer: These citations have been automatically generated based on the information we have and it may not be 100% accurate. Please consult the latest official manual style if you have any questions regarding the format accuracy.

Download citation file:

- Search Book

Jump to a Section

- Experimental Study Designs: Introduction

- Experimental Design

- Clinical Drug Trials

- Randomized, Controlled Clinical Trials

- Process of Performing a Clinical Trial

- Sample Size Determination and Data Analysis

- Clinical Trials and the U.S. Drug Approval Process

- Patient Compliance in Clinical Drug Research

- Study Questions

- Full Chapter

- Supplementary Content

Experimental study designs are the primary method for testing the effectiveness of new therapies and other interventions, including innovative drugs. By the 1930s, the pharmaceutical industry had adopted experimental methods and other research designs to develop and screen new compounds, improve production outputs, and test drugs for therapeutic benefits. The full potential of experimental methods in drug research was realized in the 1940s and 1950s with the growth in scientific knowledge and industrial technology. 1

In the 1960s, the controlled clinical trial, in which a group of patients receiving an experimental drug is compared with another group receiving a control drug or no treatment, became the standard for doing pharmaceutical research and measuring the therapeutic benefits of new drugs. 1 By the same time, the double-blind strategy of drug testing, in which both the patients and the researcher are unaware of which treatment is being taken by whom, had been adopted to limit the effect of external influences on the true pharmacological action of the drug. The drug regulations of the 1960s also reinforced the importance of controlled clinical trials by requiring that proof of effectiveness for new drugs be made through use of these research methods. 2,3

In pharmacoepidemiology, the primary use of experimental design is in performing clinical trials, most notably randomized, controlled clinical trials. 4 These studies involve people as the units of analysis. A variation on this experimental design is the community intervention study, in which groups of people, such as whole communities, are the unit of analysis. Key aspects of the clinical and community intervention trial designs are randomization, blinding, intention-to-treat analysis, and sample size determination.

An experiment is a study designed to compare benefits of an intervention with standard treatments, or no treatment, such as a new drug therapy or prevention program, or to show cause and effect (see Figure 3-2 ). This type of study is performed prospectively. Subjects are selected from a study population, assigned to the various study groups, and monitored over time to determine the outcomes that occur and are produced by the new drug therapy, treatment, or intervention.

Experimental designs have numerous advantages compared with other epidemiological methods. Randomization, when used, tends to balance confounding variables across the various study groups, especially variables that might be associated with changes in the disease state or the outcome of the intervention under study. Detailed information and data are collected at the beginning of an experimental study to develop a baseline; this same type of information also is collected at specified follow-up periods throughout the study. The investigators have control over variables such as the dose or degree of intervention. The blinding process reduces distortion in assessment. And, of great value, and not possible with other methods, is the testing of hypotheses. Most important, this design is the only real test of cause–effect relationships.

Get Free Access Through Your Institution

Pop-up div successfully displayed.

This div only appears when the trigger link is hovered over. Otherwise it is hidden from view.

Please Wait

- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental Design

Experimental design is a process of planning and conducting scientific experiments to investigate a hypothesis or research question. It involves carefully designing an experiment that can test the hypothesis, and controlling for other variables that may influence the results.

Experimental design typically includes identifying the variables that will be manipulated or measured, defining the sample or population to be studied, selecting an appropriate method of sampling, choosing a method for data collection and analysis, and determining the appropriate statistical tests to use.

Types of Experimental Design

Here are the different types of experimental design:

Completely Randomized Design

In this design, participants are randomly assigned to one of two or more groups, and each group is exposed to a different treatment or condition.

Randomized Block Design

This design involves dividing participants into blocks based on a specific characteristic, such as age or gender, and then randomly assigning participants within each block to one of two or more treatment groups.

Factorial Design

In a factorial design, participants are randomly assigned to one of several groups, each of which receives a different combination of two or more independent variables.

Repeated Measures Design

In this design, each participant is exposed to all of the different treatments or conditions, either in a random order or in a predetermined order.

Crossover Design

This design involves randomly assigning participants to one of two or more treatment groups, with each group receiving one treatment during the first phase of the study and then switching to a different treatment during the second phase.

Split-plot Design

In this design, the researcher manipulates one or more variables at different levels and uses a randomized block design to control for other variables.

Nested Design

This design involves grouping participants within larger units, such as schools or households, and then randomly assigning these units to different treatment groups.

Laboratory Experiment

Laboratory experiments are conducted under controlled conditions, which allows for greater precision and accuracy. However, because laboratory conditions are not always representative of real-world conditions, the results of these experiments may not be generalizable to the population at large.

Field Experiment

Field experiments are conducted in naturalistic settings and allow for more realistic observations. However, because field experiments are not as controlled as laboratory experiments, they may be subject to more sources of error.

Experimental Design Methods

Experimental design methods refer to the techniques and procedures used to design and conduct experiments in scientific research. Here are some common experimental design methods:

Randomization

This involves randomly assigning participants to different groups or treatments to ensure that any observed differences between groups are due to the treatment and not to other factors.

Control Group

The use of a control group is an important experimental design method that involves having a group of participants that do not receive the treatment or intervention being studied. The control group is used as a baseline to compare the effects of the treatment group.

Blinding involves keeping participants, researchers, or both unaware of which treatment group participants are in, in order to reduce the risk of bias in the results.

Counterbalancing

This involves systematically varying the order in which participants receive treatments or interventions in order to control for order effects.

Replication

Replication involves conducting the same experiment with different samples or under different conditions to increase the reliability and validity of the results.

This experimental design method involves manipulating multiple independent variables simultaneously to investigate their combined effects on the dependent variable.

This involves dividing participants into subgroups or blocks based on specific characteristics, such as age or gender, in order to reduce the risk of confounding variables.

Data Collection Method

Experimental design data collection methods are techniques and procedures used to collect data in experimental research. Here are some common experimental design data collection methods:

Direct Observation

This method involves observing and recording the behavior or phenomenon of interest in real time. It may involve the use of structured or unstructured observation, and may be conducted in a laboratory or naturalistic setting.

Self-report Measures

Self-report measures involve asking participants to report their thoughts, feelings, or behaviors using questionnaires, surveys, or interviews. These measures may be administered in person or online.

Behavioral Measures

Behavioral measures involve measuring participants’ behavior directly, such as through reaction time tasks or performance tests. These measures may be administered using specialized equipment or software.

Physiological Measures

Physiological measures involve measuring participants’ physiological responses, such as heart rate, blood pressure, or brain activity, using specialized equipment. These measures may be invasive or non-invasive, and may be administered in a laboratory or clinical setting.

Archival Data

Archival data involves using existing records or data, such as medical records, administrative records, or historical documents, as a source of information. These data may be collected from public or private sources.

Computerized Measures

Computerized measures involve using software or computer programs to collect data on participants’ behavior or responses. These measures may include reaction time tasks, cognitive tests, or other types of computer-based assessments.

Video Recording

Video recording involves recording participants’ behavior or interactions using cameras or other recording equipment. This method can be used to capture detailed information about participants’ behavior or to analyze social interactions.

Data Analysis Method

Experimental design data analysis methods refer to the statistical techniques and procedures used to analyze data collected in experimental research. Here are some common experimental design data analysis methods:

Descriptive Statistics

Descriptive statistics are used to summarize and describe the data collected in the study. This includes measures such as mean, median, mode, range, and standard deviation.

Inferential Statistics

Inferential statistics are used to make inferences or generalizations about a larger population based on the data collected in the study. This includes hypothesis testing and estimation.

Analysis of Variance (ANOVA)

ANOVA is a statistical technique used to compare means across two or more groups in order to determine whether there are significant differences between the groups. There are several types of ANOVA, including one-way ANOVA, two-way ANOVA, and repeated measures ANOVA.

Regression Analysis

Regression analysis is used to model the relationship between two or more variables in order to determine the strength and direction of the relationship. There are several types of regression analysis, including linear regression, logistic regression, and multiple regression.

Factor Analysis

Factor analysis is used to identify underlying factors or dimensions in a set of variables. This can be used to reduce the complexity of the data and identify patterns in the data.

Structural Equation Modeling (SEM)

SEM is a statistical technique used to model complex relationships between variables. It can be used to test complex theories and models of causality.

Cluster Analysis

Cluster analysis is used to group similar cases or observations together based on similarities or differences in their characteristics.

Time Series Analysis

Time series analysis is used to analyze data collected over time in order to identify trends, patterns, or changes in the data.

Multilevel Modeling

Multilevel modeling is used to analyze data that is nested within multiple levels, such as students nested within schools or employees nested within companies.

Applications of Experimental Design

Experimental design is a versatile research methodology that can be applied in many fields. Here are some applications of experimental design:

- Medical Research: Experimental design is commonly used to test new treatments or medications for various medical conditions. This includes clinical trials to evaluate the safety and effectiveness of new drugs or medical devices.

- Agriculture : Experimental design is used to test new crop varieties, fertilizers, and other agricultural practices. This includes randomized field trials to evaluate the effects of different treatments on crop yield, quality, and pest resistance.

- Environmental science: Experimental design is used to study the effects of environmental factors, such as pollution or climate change, on ecosystems and wildlife. This includes controlled experiments to study the effects of pollutants on plant growth or animal behavior.

- Psychology : Experimental design is used to study human behavior and cognitive processes. This includes experiments to test the effects of different interventions, such as therapy or medication, on mental health outcomes.

- Engineering : Experimental design is used to test new materials, designs, and manufacturing processes in engineering applications. This includes laboratory experiments to test the strength and durability of new materials, or field experiments to test the performance of new technologies.

- Education : Experimental design is used to evaluate the effectiveness of teaching methods, educational interventions, and programs. This includes randomized controlled trials to compare different teaching methods or evaluate the impact of educational programs on student outcomes.

- Marketing : Experimental design is used to test the effectiveness of marketing campaigns, pricing strategies, and product designs. This includes experiments to test the impact of different marketing messages or pricing schemes on consumer behavior.

Examples of Experimental Design

Here are some examples of experimental design in different fields:

- Example in Medical research : A study that investigates the effectiveness of a new drug treatment for a particular condition. Patients are randomly assigned to either a treatment group or a control group, with the treatment group receiving the new drug and the control group receiving a placebo. The outcomes, such as improvement in symptoms or side effects, are measured and compared between the two groups.

- Example in Education research: A study that examines the impact of a new teaching method on student learning outcomes. Students are randomly assigned to either a group that receives the new teaching method or a group that receives the traditional teaching method. Student achievement is measured before and after the intervention, and the results are compared between the two groups.

- Example in Environmental science: A study that tests the effectiveness of a new method for reducing pollution in a river. Two sections of the river are selected, with one section treated with the new method and the other section left untreated. The water quality is measured before and after the intervention, and the results are compared between the two sections.

- Example in Marketing research: A study that investigates the impact of a new advertising campaign on consumer behavior. Participants are randomly assigned to either a group that is exposed to the new campaign or a group that is not. Their behavior, such as purchasing or product awareness, is measured and compared between the two groups.

- Example in Social psychology: A study that examines the effect of a new social intervention on reducing prejudice towards a marginalized group. Participants are randomly assigned to either a group that receives the intervention or a control group that does not. Their attitudes and behavior towards the marginalized group are measured before and after the intervention, and the results are compared between the two groups.

When to use Experimental Research Design

Experimental research design should be used when a researcher wants to establish a cause-and-effect relationship between variables. It is particularly useful when studying the impact of an intervention or treatment on a particular outcome.

Here are some situations where experimental research design may be appropriate:

- When studying the effects of a new drug or medical treatment: Experimental research design is commonly used in medical research to test the effectiveness and safety of new drugs or medical treatments. By randomly assigning patients to treatment and control groups, researchers can determine whether the treatment is effective in improving health outcomes.

- When evaluating the effectiveness of an educational intervention: An experimental research design can be used to evaluate the impact of a new teaching method or educational program on student learning outcomes. By randomly assigning students to treatment and control groups, researchers can determine whether the intervention is effective in improving academic performance.

- When testing the effectiveness of a marketing campaign: An experimental research design can be used to test the effectiveness of different marketing messages or strategies. By randomly assigning participants to treatment and control groups, researchers can determine whether the marketing campaign is effective in changing consumer behavior.

- When studying the effects of an environmental intervention: Experimental research design can be used to study the impact of environmental interventions, such as pollution reduction programs or conservation efforts. By randomly assigning locations or areas to treatment and control groups, researchers can determine whether the intervention is effective in improving environmental outcomes.

- When testing the effects of a new technology: An experimental research design can be used to test the effectiveness and safety of new technologies or engineering designs. By randomly assigning participants or locations to treatment and control groups, researchers can determine whether the new technology is effective in achieving its intended purpose.

How to Conduct Experimental Research

Here are the steps to conduct Experimental Research:

- Identify a Research Question : Start by identifying a research question that you want to answer through the experiment. The question should be clear, specific, and testable.

- Develop a Hypothesis: Based on your research question, develop a hypothesis that predicts the relationship between the independent and dependent variables. The hypothesis should be clear and testable.

- Design the Experiment : Determine the type of experimental design you will use, such as a between-subjects design or a within-subjects design. Also, decide on the experimental conditions, such as the number of independent variables, the levels of the independent variable, and the dependent variable to be measured.

- Select Participants: Select the participants who will take part in the experiment. They should be representative of the population you are interested in studying.

- Randomly Assign Participants to Groups: If you are using a between-subjects design, randomly assign participants to groups to control for individual differences.

- Conduct the Experiment : Conduct the experiment by manipulating the independent variable(s) and measuring the dependent variable(s) across the different conditions.

- Analyze the Data: Analyze the data using appropriate statistical methods to determine if there is a significant effect of the independent variable(s) on the dependent variable(s).

- Draw Conclusions: Based on the data analysis, draw conclusions about the relationship between the independent and dependent variables. If the results support the hypothesis, then it is accepted. If the results do not support the hypothesis, then it is rejected.

- Communicate the Results: Finally, communicate the results of the experiment through a research report or presentation. Include the purpose of the study, the methods used, the results obtained, and the conclusions drawn.

Purpose of Experimental Design

The purpose of experimental design is to control and manipulate one or more independent variables to determine their effect on a dependent variable. Experimental design allows researchers to systematically investigate causal relationships between variables, and to establish cause-and-effect relationships between the independent and dependent variables. Through experimental design, researchers can test hypotheses and make inferences about the population from which the sample was drawn.

Experimental design provides a structured approach to designing and conducting experiments, ensuring that the results are reliable and valid. By carefully controlling for extraneous variables that may affect the outcome of the study, experimental design allows researchers to isolate the effect of the independent variable(s) on the dependent variable(s), and to minimize the influence of other factors that may confound the results.

Experimental design also allows researchers to generalize their findings to the larger population from which the sample was drawn. By randomly selecting participants and using statistical techniques to analyze the data, researchers can make inferences about the larger population with a high degree of confidence.

Overall, the purpose of experimental design is to provide a rigorous, systematic, and scientific method for testing hypotheses and establishing cause-and-effect relationships between variables. Experimental design is a powerful tool for advancing scientific knowledge and informing evidence-based practice in various fields, including psychology, biology, medicine, engineering, and social sciences.

Advantages of Experimental Design

Experimental design offers several advantages in research. Here are some of the main advantages:

- Control over extraneous variables: Experimental design allows researchers to control for extraneous variables that may affect the outcome of the study. By manipulating the independent variable and holding all other variables constant, researchers can isolate the effect of the independent variable on the dependent variable.

- Establishing causality: Experimental design allows researchers to establish causality by manipulating the independent variable and observing its effect on the dependent variable. This allows researchers to determine whether changes in the independent variable cause changes in the dependent variable.

- Replication : Experimental design allows researchers to replicate their experiments to ensure that the findings are consistent and reliable. Replication is important for establishing the validity and generalizability of the findings.

- Random assignment: Experimental design often involves randomly assigning participants to conditions. This helps to ensure that individual differences between participants are evenly distributed across conditions, which increases the internal validity of the study.

- Precision : Experimental design allows researchers to measure variables with precision, which can increase the accuracy and reliability of the data.

- Generalizability : If the study is well-designed, experimental design can increase the generalizability of the findings. By controlling for extraneous variables and using random assignment, researchers can increase the likelihood that the findings will apply to other populations and contexts.

Limitations of Experimental Design

Experimental design has some limitations that researchers should be aware of. Here are some of the main limitations:

- Artificiality : Experimental design often involves creating artificial situations that may not reflect real-world situations. This can limit the external validity of the findings, or the extent to which the findings can be generalized to real-world settings.

- Ethical concerns: Some experimental designs may raise ethical concerns, particularly if they involve manipulating variables that could cause harm to participants or if they involve deception.

- Participant bias : Participants in experimental studies may modify their behavior in response to the experiment, which can lead to participant bias.

- Limited generalizability: The conditions of the experiment may not reflect the complexities of real-world situations. As a result, the findings may not be applicable to all populations and contexts.

- Cost and time : Experimental design can be expensive and time-consuming, particularly if the experiment requires specialized equipment or if the sample size is large.

- Researcher bias : Researchers may unintentionally bias the results of the experiment if they have expectations or preferences for certain outcomes.

- Lack of feasibility : Experimental design may not be feasible in some cases, particularly if the research question involves variables that cannot be manipulated or controlled.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Methods – Types, Examples and Guide

Mixed Methods Research – Types & Analysis

Quantitative Research – Methods, Types and...

Quasi-Experimental Research Design – Types...

Case Study – Methods, Examples and Guide

Questionnaire – Definition, Types, and Examples

- Medicine >

- Divisions >

- Behavioral Medicine >

- Research & Facilities >

- Clinical Research Consulting Lab >

Research Study Design

First things first: determining your research design.

Medical research studies have a number of possible designs. A strong research project closely ties the research questions/hypotheses to the methodology to be used, the variables to be measured or manipulated, and the planned analysis of collected data. CRCL personnel can help you with determining the proper research design and data analyses to adequately address your research your question. CRCL can also assist you in performing the proper statistical analysis on your collected data. Our staff are experts in research methodology and statistical analysis are proficient with multiple statistical methods statistical software packages. The type of research study you conduct determines what the proper data analyses are and what conclusions you can draw from your data.

Identify the type of research study you are planning on conducting:

Descriptive:.

A Descriptive analysis is provides basic information about a sample drawn from a population of interest. In a descriptive analysis, information is reported about the frequency of and/or percentages of the qualities of interest in the sample (for example the number of men or women with a disorder or the percentage of people for whom a type of cancer progresses into stage 4 after a given amount of time). For variables that measure a quantity measures of central tendency (mean, median, mode) and measures of dispersion (standard deviation, variance, range) may be calculated (for example the average age at which cardiac arrhythmia symptoms first appeared, the median amount of time until a cancer metastasizes, or the modal average person’s rating of chronic pain). Descriptive studies simply describe, they do not inform regarding the relationship between variables nor provide any information about how changes in one variable may cause changes in another.

Correlational:

A correlational study looks to examine a relationship between two or more variables and asks the question: are changes in one variable associated with changes in a second variable? The first variable is often referred to as the independent variable, the predictor variable, or the exogenous variable while the second variable is referred to as the dependent variable, the criterion variable, or the endogenous variable. Examples of correlational studies would be studies that examine the relationship between age and cholesterol level or between dose of Lisinopril and blood pressure. Common statistical methods used in this type of study are Pearson correlation, chi-square, and regression. It is important to remember that correlation does not imply causation, only the existence of a relationship. Why that relationship exists may be due to a causal mechanism but may also be due to other factors which influence both the variables.

Quasi-experimental:

Quasi-experimental studies examine the question of whether groups differ but the groups must be naturally occurring groups, not groups created by the researcher. The design is quasi-experimental because of the lack of random assignment of participants (patients, rats, bacterial cultures) into the groups of interest; the groups themselves are predetermined. For example the examination of the relationship between amount of vitamin D in the diet on chemotherapy outcomes for patients with prostate cancer versus colorectal cancer would be a quasi-experimental study because the experimenter has not controlled who has what type of cancer or their intake of Vitamin D. The comparison group may also be a naturally occurring control group, for example an experimenter may study the frequency of cardiac arrhythmias in and elderly population consisting of one group who regularly consume alcohol compared to one group of elderly patients who normally abstain from alcohol. Common statistical analyses in non-experimental studies like these are t-tests, Analysis of Variance (ANOVA), regression, multiple regression, and moderated multiple regression. While non-experimental studies cannot fully prove causation, they can point to the need for a more controlled experimental tests.

Experimental:

The most stringent test of a scientific hypothesis is an experimental study. The hallmark of an experimental design is that rather than simply measuring an independent variable or selecting a preexisting group that differs on that variable, the experimenter manipulates that variable to create experimental and control groups. Random assignment is used to create the groups and, if the sample is large enough, helps to equate the experimental and control groups on all variables except for the variables of interest. An example of an experimental design would be randomly assigning patients with congestive heart failure into one of three groups (two doses of a new beta-blocker or a placebo condition) and examining ejection fraction after three months to determine if heart function differs between the three groups. Common statistical analyses in experimental studies like these are also t-tests, Analysis of Variance (ANOVA), regression, multiple regression, and moderated multiple regression. The stronger the controls in an experimental design the more justified one is in concluding that the manipulation of the independent variable caused the changes in the dependent variable.

Experimental Research Design — 6 mistakes you should never make!

Since school days’ students perform scientific experiments that provide results that define and prove the laws and theorems in science. These experiments are laid on a strong foundation of experimental research designs.

An experimental research design helps researchers execute their research objectives with more clarity and transparency.

In this article, we will not only discuss the key aspects of experimental research designs but also the issues to avoid and problems to resolve while designing your research study.

Table of Contents

What Is Experimental Research Design?

Experimental research design is a framework of protocols and procedures created to conduct experimental research with a scientific approach using two sets of variables. Herein, the first set of variables acts as a constant, used to measure the differences of the second set. The best example of experimental research methods is quantitative research .

Experimental research helps a researcher gather the necessary data for making better research decisions and determining the facts of a research study.

When Can a Researcher Conduct Experimental Research?

A researcher can conduct experimental research in the following situations —

- When time is an important factor in establishing a relationship between the cause and effect.

- When there is an invariable or never-changing behavior between the cause and effect.

- Finally, when the researcher wishes to understand the importance of the cause and effect.

Importance of Experimental Research Design

To publish significant results, choosing a quality research design forms the foundation to build the research study. Moreover, effective research design helps establish quality decision-making procedures, structures the research to lead to easier data analysis, and addresses the main research question. Therefore, it is essential to cater undivided attention and time to create an experimental research design before beginning the practical experiment.

By creating a research design, a researcher is also giving oneself time to organize the research, set up relevant boundaries for the study, and increase the reliability of the results. Through all these efforts, one could also avoid inconclusive results. If any part of the research design is flawed, it will reflect on the quality of the results derived.

Types of Experimental Research Designs

Based on the methods used to collect data in experimental studies, the experimental research designs are of three primary types:

1. Pre-experimental Research Design

A research study could conduct pre-experimental research design when a group or many groups are under observation after implementing factors of cause and effect of the research. The pre-experimental design will help researchers understand whether further investigation is necessary for the groups under observation.

Pre-experimental research is of three types —

- One-shot Case Study Research Design

- One-group Pretest-posttest Research Design

- Static-group Comparison

2. True Experimental Research Design

A true experimental research design relies on statistical analysis to prove or disprove a researcher’s hypothesis. It is one of the most accurate forms of research because it provides specific scientific evidence. Furthermore, out of all the types of experimental designs, only a true experimental design can establish a cause-effect relationship within a group. However, in a true experiment, a researcher must satisfy these three factors —

- There is a control group that is not subjected to changes and an experimental group that will experience the changed variables

- A variable that can be manipulated by the researcher

- Random distribution of the variables

This type of experimental research is commonly observed in the physical sciences.

3. Quasi-experimental Research Design

The word “Quasi” means similarity. A quasi-experimental design is similar to a true experimental design. However, the difference between the two is the assignment of the control group. In this research design, an independent variable is manipulated, but the participants of a group are not randomly assigned. This type of research design is used in field settings where random assignment is either irrelevant or not required.

The classification of the research subjects, conditions, or groups determines the type of research design to be used.

Advantages of Experimental Research

Experimental research allows you to test your idea in a controlled environment before taking the research to clinical trials. Moreover, it provides the best method to test your theory because of the following advantages:

- Researchers have firm control over variables to obtain results.

- The subject does not impact the effectiveness of experimental research. Anyone can implement it for research purposes.

- The results are specific.

- Post results analysis, research findings from the same dataset can be repurposed for similar research ideas.

- Researchers can identify the cause and effect of the hypothesis and further analyze this relationship to determine in-depth ideas.

- Experimental research makes an ideal starting point. The collected data could be used as a foundation to build new research ideas for further studies.

6 Mistakes to Avoid While Designing Your Research

There is no order to this list, and any one of these issues can seriously compromise the quality of your research. You could refer to the list as a checklist of what to avoid while designing your research.

1. Invalid Theoretical Framework

Usually, researchers miss out on checking if their hypothesis is logical to be tested. If your research design does not have basic assumptions or postulates, then it is fundamentally flawed and you need to rework on your research framework.

2. Inadequate Literature Study

Without a comprehensive research literature review , it is difficult to identify and fill the knowledge and information gaps. Furthermore, you need to clearly state how your research will contribute to the research field, either by adding value to the pertinent literature or challenging previous findings and assumptions.

3. Insufficient or Incorrect Statistical Analysis

Statistical results are one of the most trusted scientific evidence. The ultimate goal of a research experiment is to gain valid and sustainable evidence. Therefore, incorrect statistical analysis could affect the quality of any quantitative research.

4. Undefined Research Problem

This is one of the most basic aspects of research design. The research problem statement must be clear and to do that, you must set the framework for the development of research questions that address the core problems.

5. Research Limitations

Every study has some type of limitations . You should anticipate and incorporate those limitations into your conclusion, as well as the basic research design. Include a statement in your manuscript about any perceived limitations, and how you considered them while designing your experiment and drawing the conclusion.

6. Ethical Implications

The most important yet less talked about topic is the ethical issue. Your research design must include ways to minimize any risk for your participants and also address the research problem or question at hand. If you cannot manage the ethical norms along with your research study, your research objectives and validity could be questioned.

Experimental Research Design Example

In an experimental design, a researcher gathers plant samples and then randomly assigns half the samples to photosynthesize in sunlight and the other half to be kept in a dark box without sunlight, while controlling all the other variables (nutrients, water, soil, etc.)

By comparing their outcomes in biochemical tests, the researcher can confirm that the changes in the plants were due to the sunlight and not the other variables.

Experimental research is often the final form of a study conducted in the research process which is considered to provide conclusive and specific results. But it is not meant for every research. It involves a lot of resources, time, and money and is not easy to conduct, unless a foundation of research is built. Yet it is widely used in research institutes and commercial industries, for its most conclusive results in the scientific approach.

Have you worked on research designs? How was your experience creating an experimental design? What difficulties did you face? Do write to us or comment below and share your insights on experimental research designs!

Frequently Asked Questions

Randomization is important in an experimental research because it ensures unbiased results of the experiment. It also measures the cause-effect relationship on a particular group of interest.

Experimental research design lay the foundation of a research and structures the research to establish quality decision making process.

There are 3 types of experimental research designs. These are pre-experimental research design, true experimental research design, and quasi experimental research design.

The difference between an experimental and a quasi-experimental design are: 1. The assignment of the control group in quasi experimental research is non-random, unlike true experimental design, which is randomly assigned. 2. Experimental research group always has a control group; on the other hand, it may not be always present in quasi experimental research.

Experimental research establishes a cause-effect relationship by testing a theory or hypothesis using experimental groups or control variables. In contrast, descriptive research describes a study or a topic by defining the variables under it and answering the questions related to the same.

good and valuable

Very very good

Good presentation.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

![experimental design in medical research What is Academic Integrity and How to Uphold it [FREE CHECKLIST]](https://www.enago.com/academy/wp-content/uploads/2024/05/FeatureImages-59-210x136.png)

Ensuring Academic Integrity and Transparency in Academic Research: A comprehensive checklist for researchers

Academic integrity is the foundation upon which the credibility and value of scientific findings are…

- Publishing Research

- Reporting Research

How to Optimize Your Research Process: A step-by-step guide

For researchers across disciplines, the path to uncovering novel findings and insights is often filled…

- Industry News

- Trending Now

Breaking Barriers: Sony and Nature unveil “Women in Technology Award”

Sony Group Corporation and the prestigious scientific journal Nature have collaborated to launch the inaugural…

Achieving Research Excellence: Checklist for good research practices

Academia is built on the foundation of trustworthy and high-quality research, supported by the pillars…

- Promoting Research

Plain Language Summary — Communicating your research to bridge the academic-lay gap

Science can be complex, but does that mean it should not be accessible to the…

Choosing the Right Analytical Approach: Thematic analysis vs. content analysis for…

Comparing Cross Sectional and Longitudinal Studies: 5 steps for choosing the right…

Research Recommendations – Guiding policy-makers for evidence-based decision making

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What would be most effective in reducing research misconduct?

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Reflections on experimental research in medical education

Affiliation.

- 1 Mayo Clinic College of Medicine, Rochester, Minnesota 55905, USA. [email protected]

- PMID: 18427941

- DOI: 10.1007/s10459-008-9117-3

As medical education research advances, it is important that education researchers employ rigorous methods for conducting and reporting their investigations. In this article we discuss several important yet oft neglected issues in designing experimental research in education. First, randomization controls for only a subset of possible confounders. Second, the posttest-only design is inherently stronger than the pretest-posttest design, provided the study is randomized and the sample is sufficiently large. Third, demonstrating the superiority of an educational intervention in comparison to no intervention does little to advance the art and science of education. Fourth, comparisons involving multifactorial interventions are hopelessly confounded, have limited application to new settings, and do little to advance our understanding of education. Fifth, single-group pretest-posttest studies are susceptible to numerous validity threats. Finally, educational interventions (including the comparison group) must be described in detail sufficient to allow replication.

PubMed Disclaimer

Similar articles

- Medical errors education: A prospective study of a new educational tool. Paxton JH, Rubinfeld IS. Paxton JH, et al. Am J Med Qual. 2010 Mar-Apr;25(2):135-42. doi: 10.1177/1062860609353345. Epub 2010 Jan 28. Am J Med Qual. 2010. PMID: 20110456

- Cognitive and learning sciences in biomedical and health instructional design: A review with lessons for biomedical informatics education. Patel VL, Yoskowitz NA, Arocha JF, Shortliffe EH. Patel VL, et al. J Biomed Inform. 2009 Feb;42(1):176-97. doi: 10.1016/j.jbi.2008.12.002. Epub 2008 Dec 24. J Biomed Inform. 2009. PMID: 19135173 Review.

- Description, justification and clarification: a framework for classifying the purposes of research in medical education. Cook DA, Bordage G, Schmidt HG. Cook DA, et al. Med Educ. 2008 Feb;42(2):128-33. doi: 10.1111/j.1365-2923.2007.02974.x. Epub 2008 Jan 8. Med Educ. 2008. PMID: 18194162 Review.

- Where are we with Web-based learning in medical education? Cook DA. Cook DA. Med Teach. 2006 Nov;28(7):594-8. doi: 10.1080/01421590601028854. Med Teach. 2006. PMID: 17594549 Review.